DP-203: Data Engineering on Microsoft Azure

You need to trigger an Azure Data Factory pipeline when a file arrives in an Azure Data Lake Storage Gen2 container.

Which resource provider should you enable?

Microsoft.Sql

Microsoft.Automation

Microsoft.EventGrid

Microsoft.EventHub

Answer is Microsoft.EventGrid

Event-driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require Data Factory customers to trigger pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure

Blob Storage account. Data Factory natively integrates with Azure Event Grid, which lets you trigger pipelines on such events.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger

https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipeline-execution-triggers

You have an Azure Data Factory instance that contains two pipelines named Pipeline1 and Pipeline2.

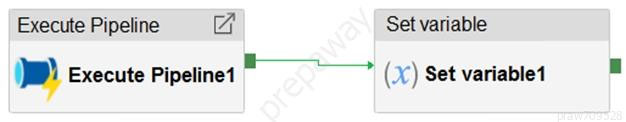

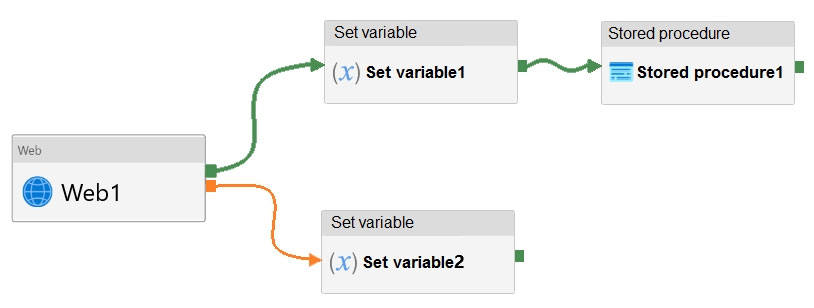

Pipeline1 has the activities shown in the following exhibit.

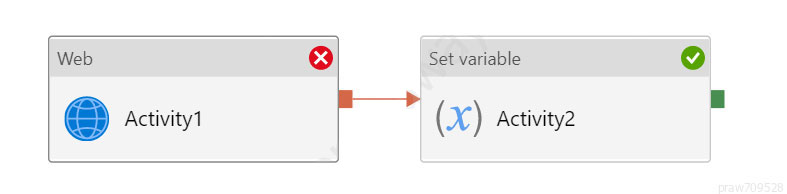

Pipeline2 has the activities shown in the following exhibit.

You execute Pipeline2, and Stored procedure1 in Pipeline1 fails.

What is the status of the pipeline runs?

Pipeline1 and Pipeline2 succeeded.

Pipeline1 and Pipeline2 failed.

Pipeline1 succeeded and Pipeline2 failed.

Pipeline1 failed and Pipeline2 succeeded.

Answer is Pipeline1 and Pipeline2 succeeded.

Activities are linked together via dependencies. A dependency has a condition of one of the following: Succeeded, Failed, Skipped, or Completed.

Consider Pipeline1:

If we have a pipeline with two activities where Activity2 has a failure dependency on Activity1, the pipeline will not fail just because Activity1 failed. If Activity1 fails and Activity2 succeeds, the pipeline will succeed. This scenario is treated as a try-catch block by Data Factory.

The failure dependency means this pipeline reports success.

Note:

If we have a pipeline containing Activity1 and Activity2, and Activity2 has a success dependency on Activity1, it will only execute if Activity1 is successful. In this scenario, if Activity1 fails, the pipeline will fail.

The trick is the fact that pipeline 1 only has a Failure dependency between de activity's. In this situation this results in a Succeeded pipeline if the Stored procedure failed. If also the success connection was linked to a follow up activity, and the SP would fail, the pipeline would be indeed marked as failed.

Reference:

https://datasavvy.me/category/azure-data-factory/

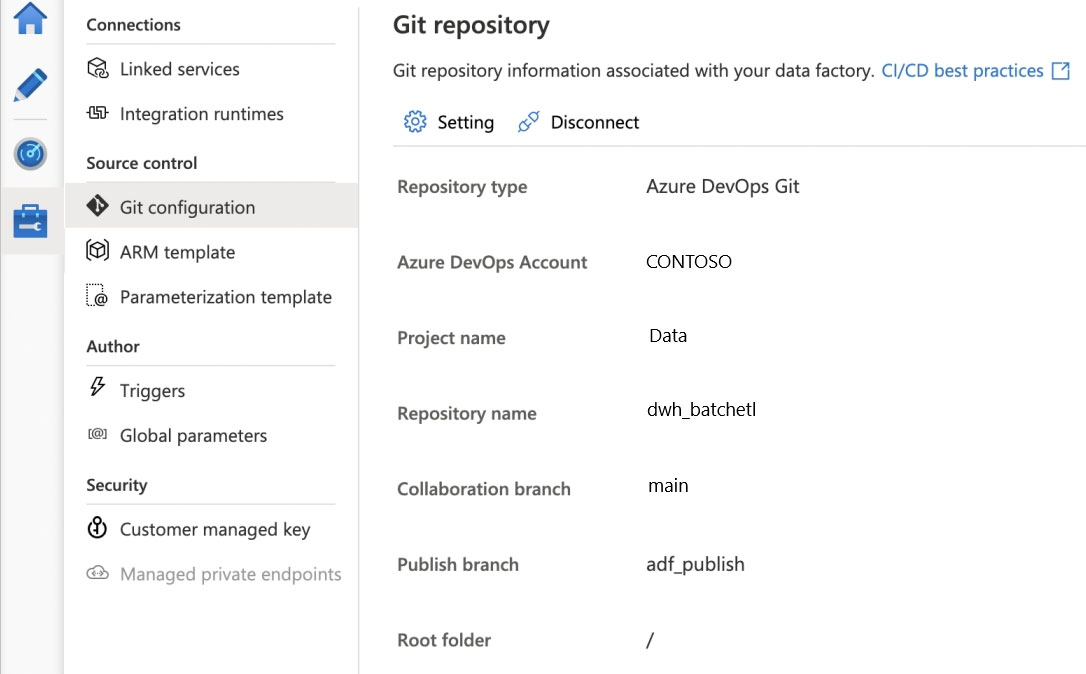

You configure version control for an Azure Data Factory instance as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Box 1: adf_publish

The Publish branch is the branch in your repository where publishing related ARM templates are stored and updated. By default, it's adf_publish.

Box 2: / dwh_batchetl/adf_publish/contososales

Note: RepositoryName (here dwh_batchetl): Your Azure Repos code repository name. Azure Repos projects contain Git repositories to manage your source code as your project grows. You can create a new repository or use an existing repository that's already in your project.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/source-control

You have an Azure Data Factory instance named ADF1 and two Azure Synapse Analytics workspaces named WS1 and WS2.

ADF1 contains the following pipelines:

● P1: Uses a copy activity to copy data from a nonpartitioned table in a dedicated SQL pool of WS1 to an Azure Data Lake Storage Gen2 account

● P2: Uses a copy activity to copy data from text-delimited files in an Azure Data Lake Storage Gen2 account to a nonpartitioned table in a dedicated SQL pool of

WS2 -

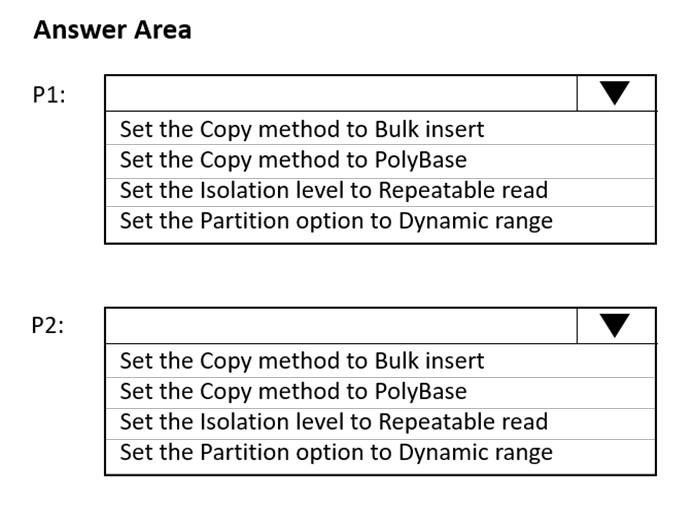

You need to configure P1 and P2 to maximize parallelism and performance.

Which dataset settings should you configure for the copy activity if each pipeline? To answer, select the appropriate options in the answer area.

Both answers are PolyBase.

PolyBase supports both export to and import from ADLS as documented here: https://docs.microsoft.com/en-us/sql/relational-databases/polybase/polybase-versioned-feature-summary

PolyBase does support delimited text files, which contradicts the question's official answer. "Currently PolyBase can load data from UTF-8 and UTF-16 encoded delimited text files as well as the popular Hadoop file formats RC File, ORC, and Parquet (non-nested format)."

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/load-data-overview

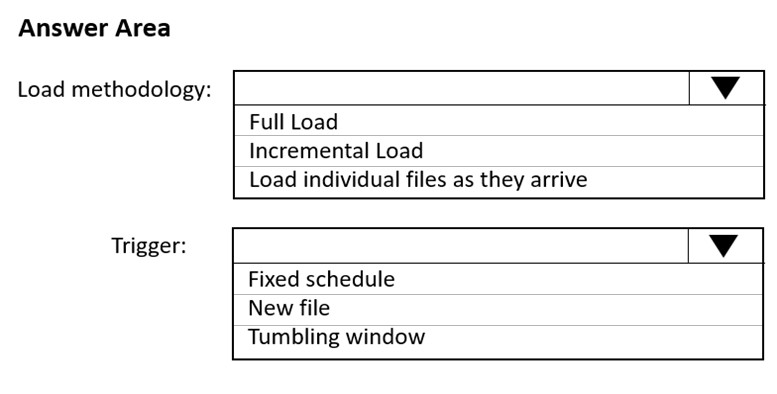

You have an Azure Storage account that generates 200,000 new files daily. The file names have a format of {YYYY}/{MM}/{DD}/{HH}/{CustomerID}.csv.

You need to design an Azure Data Factory solution that will load new data from the storage account to an Azure Data Lake once hourly. The solution must minimize load times and costs.

How should you configure the solution? To answer, select the appropriate options in the answer area.

Box 1: Incremental load

Box 2: Tumbling window

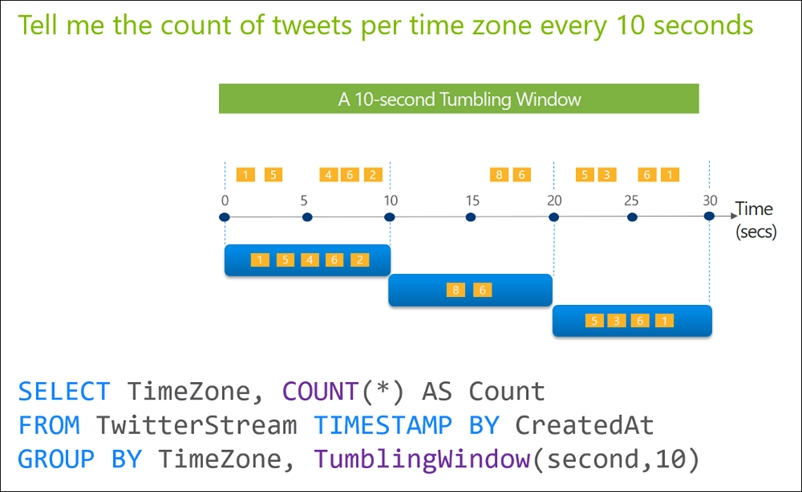

Tumbling windows are a series of fixed-sized, non-overlapping and contiguous time intervals. The following diagram illustrates a stream with a series of events and how they are mapped into 10-second tumbling windows.

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/tumbling-window-azure-stream-analytics

You are designing a solution that will copy Parquet files stored in an Azure Blob storage account to an Azure Data Lake Storage Gen2 account.

The data will be loaded daily to the data lake and will use a folder structure of {Year}/{Month}/{Day}/.

You need to design a daily Azure Data Factory data load to minimize the data transfer between the two accounts.

Which two configurations should you include in the design? Each correct answer presents part of the solut

Specify a file naming pattern for the destination.

Delete the files in the destination before loading the data.

Filter by the last modified date of the source files.

Delete the source files after they are copied.

Answer is Filter by the last modified date of the source files. and Delete the source files after they are copied.

Copy only the daily files by using filtering.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

You have an Azure Data Factory version 2 (V2) resource named Df1. Df1 contains a linked service.

You have an Azure Key vault named vault1 that contains an encryption key named key1.

You need to encrypt Df1 by using key1.

What should you do first?

Add a private endpoint connection to vaul1.

Enable Azure role-based access control on vault1.

Remove the linked service from Df1.

Create a self-hosted integration runtime.

Answer is Remove the linked service from Df1.

Linked services are much like connection strings, which define the connection information needed for Data Factory to connect to external resources.

Incorrect Answers:

D: A self-hosted integration runtime copies data between an on-premises store and cloud storage.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/enable-customer-managed-key

https://docs.microsoft.com/en-us/azure/data-factory/concepts-linked-services

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

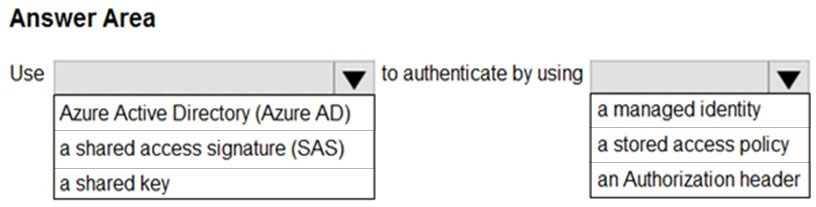

You have an Azure subscription that contains an Azure Data Lake Storage account. The storage account contains a data lake named DataLake1.

You plan to use an Azure data factory to ingest data from a folder in DataLake1, transform the data, and land the data in another folder.

You need to ensure that the data factory can read and write data from any folder in the DataLake1 file system. The solution must meet the following requirements:

● Minimize the risk of unauthorized user access.

● Use the principle of least privilege.

● Minimize maintenance effort.

How should you configure access to the storage account for the data factory?

Box 1: Azure Active Directory (Azure AD)

On Azure, managed identities eliminate the need for developers having to manage credentials by providing an identity for the Azure resource in Azure AD and using it to obtain Azure Active Directory (Azure AD) tokens.

Box 2: a managed identity -

A data factory can be associated with a managed identity for Azure resources, which represents this specific data factory. You can directly use this managed identity for Data Lake Storage Gen2 authentication, similar to using your own service principal. It allows this designated factory to access and copy data to or from your Data Lake Storage Gen2.

Note: The Azure Data Lake Storage Gen2 connector supports the following authentication types.

● Account key authentication

● Service principal authentication

● Managed identities for Azure resources authentication

Reference:

https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/overview

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

HOTSPOT -

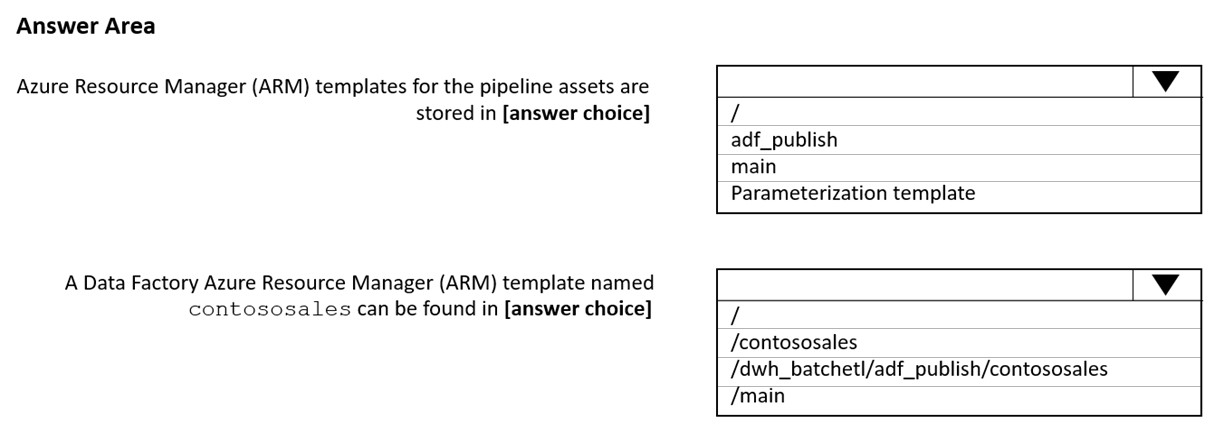

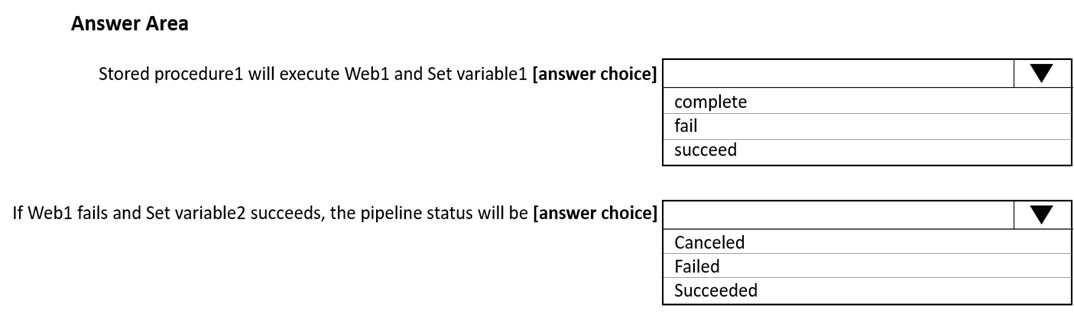

You have an Azure Data Factory pipeline that has the activities shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: succeed

Box 2: failed

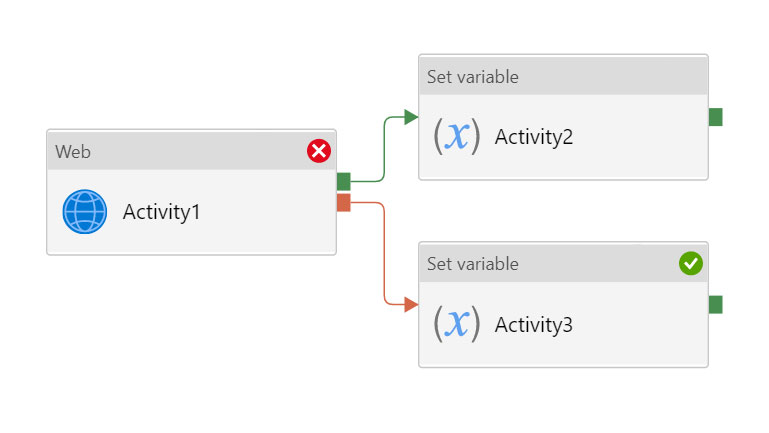

Example:

Now let's say we have a pipeline with 3 activities, where Activity1 has a success path to Activity2 and a failure path to Activity3. If Activity1 fails and Activity3 succeeds, the pipeline will fail. The presence of the success path alongside the failure path changes the outcome reported by the pipeline, even though the activity executions from the pipeline are the same as the previous scenario.

Activity1 fails, Activity2 is skipped, and Activity3 succeeds. The pipeline reports failure.

Reference:

https://datasavvy.me/2021/02/18/azure-data-factory-activity-failures-and-pipeline-outcomes/

You have several Azure Data Factory pipelines that contain a mix of the following types of activities:

● Wrangling data flow

● Notebook

● Copy

● Jar

Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point

Azure Synapse Analytics

Azure HDInsight

Azure Machine Learning

Azure Data Factory

Azure Databricks

1. Data wangling is only supported by ADF not Synapse Analytics.

2. Notebook, Jar activity requires Databricks.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/wrangling-overview

https://docs.microsoft.com/en-us/azure/data-factory/transform-data-databricks-jar