DP-203: Data Engineering on Microsoft Azure

You are planning the deployment of Azure Data Lake Storage Gen2.

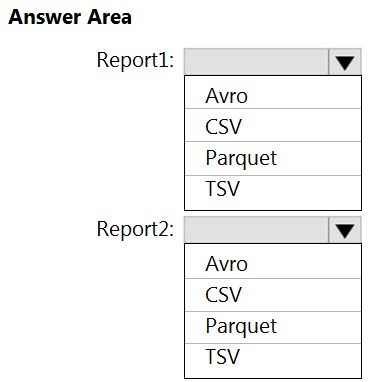

You have the following two reports that will access the data lake:

- Report1: Reads three columns from a file that contains 50 columns.

- Report2: Queries a single record based on a timestamp.

You need to recommend in which format to store the data in the data lake to support the reports. The solution must minimize read times.

What should you recommend for each report?

1: Parquet - column-oriented binary file format

2: AVRO - Row based format, and has logical type timestamp

Not Parquet, TSV: Not options for Azure Data Lake Storage Gen2.

Reference:

https://streamsets.com/documentation/datacollector/latest/help/datacollector/UserGuide/Destinations/ADLS-G2-D.html

https://youtu.be/UrWthx8T3UY

An application will use Microsoft Azure Cosmos DB as its data solution. The application will use the Cassandra API to support a column-based database type that uses containers to store items.

You need to provision Azure Cosmos DB.

Which container name and item name should you use?

Each correct answer presents part of the solutions.

collection

rows

graph

entities

table

Answer is B & E

B because; Depending on which API you use, an Azure Cosmos item can represent either a document in a collection, a row in a table, or a node or edge in a graph. The following table shows the mapping of API-specific entities to an Azure Cosmos item:

| Cosmos entity | SQL API | Cassandra API | Azure Cosmos DB API for MongoDB | Gremlin API | Table API |

|---|---|---|---|---|---|

| Azure Cosmos item | Document | Row | Document | Node or edge | Item |

E because; An Azure Cosmos container is specialized into API-specific entities as shown in the following table:

| Azure Cosmos entity | SQL API | Cassandra API | Azure Cosmos DB API for MongoDB | Gremlin API | Table API |

|---|---|---|---|---|---|

| Azure Cosmos container | Container | Table | Collection | Graph | Table |

References: https://docs.microsoft.com/en-us/azure/cosmos-db/databases-containers-items

You are developing a data engineering solution for a company. The solution will store a large set of key-value pair data by using Microsoft Azure Cosmos DB.

The solution has the following requirements:

- Data must be partitioned into multiple containers.

- Data containers must be configured separately.

- Data must be accessible from applications hosted around the world.

- The solution must minimize latency.

You need to provision Azure Cosmos DB.

Configure account-level throughput.

Provisionan Azure Cosmos DB account with the Azure Table API. Enable geo-redundancy.

Configure table-level throughput.

Replicate the data globally by manually adding regions to the Azure Cosmos DB account.

Provision an Azure Cosmos DB account with the Azure Table API. Enable multi-region writes.

Answer is Provision an Azure Cosmos DB account with the Azure Table API. Enable multi-region writes.

Scale read and write throughput globally. You can enable every region to be writable and elastically scale reads and writes all around the world. The throughput that your application configures on an Azure Cosmos database or a container is guaranteed to be delivered across all regions associated with your Azure Cosmos account. The provisioned throughput is guaranteed up by financially backed SLAs.

References:

https://docs.microsoft.com/en-us/azure/cosmos-db/distribute-data-globally

You want to ensure that there is 99.999% availability for the reading and writing of all your data. How can this be achieved?

By configuring reads and writes of data in a single region.

By configuring reads and writes of data for multi-region accounts with multi region writes.

By configuring reads and writes of data for multi-region accounts with a single region writes.

Answer is "By configuring reads and writes of data for multi-region accounts with multi region writes."

By configuring reads and writes of data for multi-region accounts with multi region writes, you can achieve 99.999% availability

What are the three main advantages to using Cosmos DB?

Cosmos DB offers global distribution capabilities out of the box.

Cosmos DB provides a minimum of 99.99% availability.

Cosmos DB response times of read/write operations are typically in the order of 10s of milliseconds.

All of the above.

Answer is All of the above.

All of the above. Cosmos DB offers global distribution capabilities out of the box, provides a minimum of 99.99% availability and has response times of read/write operations are typically in the order of 10s of milliseconds.

You are a data engineer wanting to make the data that is currently stored in a Table Storage account located in the West US region available globally. Which Cosmos DB model should you migrate to?

Gremlin API

Cassandra API

Table API

Mongo DB API

Answer is Table API

The Table API Cosmos DB model will enable you to provide global availability of your table storage account data. Gremlin API is used to store Graph databases. Cassandra API is used to store date from Cassandra databases and Mongo DB API is used to store Mongo DB databases.

What type of data model provides a traversal language that enables connections and traversals across connected data?

Gremlin API

Cassandra API

Table API

Mongo DB API

Answer is Gremlin API

Gremlin API is used to store Graph databases and provides a traversal language that enables connections and traversals across connected data. Cassandra API is used to store date from Cassandra databases. The Table API is used to store key-value pair of data and Mongo DB API is used to store Mongo DB databases.

Suppose you are using Visual Studio Code to develop a .NET Core application that accesses Azure Cosmos DB. You need to include the connection string for your database in your application configuration. What is the most convenient way to get this information into your project?

Directly from Visual Studio Code

From the Azure portal

Using the Azure CLI

Answer is Directly from Visual Studio Code

The Azure Cosmos DB extension lets you administer and create Azure Cosmos DB accounts and databases from within Visual Studio Code. The Azure Portal does not aid the development of a .Net Core Application, and Azure CLI helps more with automation.

When working with Azure Cosmos DB's SQL API, which of these can be used to perform CRUD operations?

LINQ

Apache Cassandra client libraries

Azure Table Storage libraries

Answer is LINQ

LINQ and SQL are two of the valid methods for querying the SQL API. Apache Cassandra client libraries is used for the Cassandra API and Azure Table Storage libraries for the Table API and not the SQL API.

When working with the Azure Cosmos DB Client SDK's DocumentClient class, you use a NOSQL model. How would you use this class to change the FirstName field of a Person Document from 'Ann' to 'Fran'?

Call UpdateDocumentAsync with FirstName=Fran

Call UpsertDocumentAsync with an updated Person object

Call ReplaceDocumentAsync with an updated Person object

Answer is Call ReplaceDocumentAsync with an updated Person object

ReplaceDocumentAsync will replace the existing document with the new one. In this case we'd intend the old and new to be the same other than FirstName.

The DocumentClient class doesn't have an UpdateDocumentAsync method. Updating a single field is not consistent with the document-style NOSQL approach.

While calling UpsertDocumentAsync with an updated Person object would work, it isn't the minimum necessary access to meet our requirements. Upsert operations will replace a document if its key already exists or add a new document if not. We don't want to add a new one, so using this method risks introducing subtle, hard to track bugs.