DP-203: Data Engineering on Microsoft Azure

You are monitoring an Azure Stream Analytics job.

The Backlogged Input Events count has been 20 for the last hour.

You need to reduce the Backlogged Input Events count.

What should you do?

Add an Azure Storage account to the job

Increase the streaming units for the job

Stop the job

Drop late arriving events from the job

Answer is Increase the streaming units for the job

General symptoms of the job hitting system resource limits include:

- If the backlog event metric keeps increasing, it's an indicator that the system resource is constrained (either because of output sink throttling, or high CPU).

Note: Backlogged Input Events: Number of input events that are backlogged. A non-zero value for this metric implies that your job isn't able to keep up with the number of incoming events. If this value is slowly increasing or consistently non-zero, you should scale out your job: adjust Streaming Units.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-scale-jobs

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-monitoring

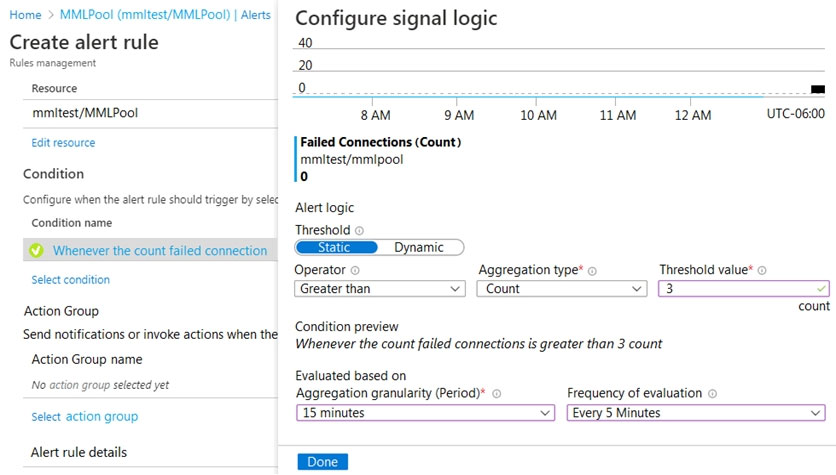

You have an alert on a SQL pool in Azure Synapse that uses the signal logic shown in the exhibit.

On the same day, failures occur at the following times:

- 08:01

- 08:03

- 08:04

- 08:06

- 08:11

- 08:16

- 08:19

The evaluation period starts on the hour.

At which times will alert notifications be sent?

08:15 only

08:10, 08:15, and 08:20

08:05 and 08:10 only

08:10 only

08:05 only

Answer is 08:10, 08:15, and 08:20

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/alerts-insights-configure-portal

https://docs.microsoft.com/en-us/azure/azure-monitor/alerts/alerts-unified-log

You plan to monitor the performance of Azure Blob storage by using Azure Monitor.

You need to be notified when there is a change in the average time it takes for a storage service or API operation type to process requests.

For which two metrics should you set up alerts?

SuccessE2ELatency

SuccessServerLatency

UsedCapacity

Egress

Ingress

Answer are;

SuccessE2ELatency

SuccessServerLatency

Success E2E Latency: The average end-to-end latency of successful requests made to a storage service or the specified API operation. This value includes the required processing time within Azure Storage to read the request, send the response, and receive acknowledgment of the response. Success Server Latency: The average time used to process a successful request by Azure Storage. This value does not include the network latency specified in

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-scalable-app-verify-metrics

You need to receive an alert when Azure Synapse Analytics consumes the maximum allotted resources.

Which resource type and signal should you use to create the alert in Azure Monitor?

A-A

B-C

C-D

A-C

B-D

C-C

D-B

D-C

Answer is C - C

Resource type: SQL data warehouse

DWU limit belongs to the SQL data warehouse resource type.

Signal: DWU limit

SQL Data Warehouse capacity limits are maximum values allowed for various components of Azure SQL Data Warehouse.

Reference:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-insights-alerts-portal

You are designing a highly available Azure Data Lake Storage solution that will include geo-zone-redundant storage (GZRS).

You need to monitor for replication delays that can affect the recovery point objective (RPO).

What should you include in the monitoring solution?

5xx: Server Error errors

Average Success E2E Latency

availability

Last Sync Time

Answer is Last Sync Time

Because geo-replication is asynchronous, it is possible that data written to the primary region has not yet been written to the secondary region at the time an outage occurs. The Last Sync Time property indicates the last time that data from the primary region was written successfully to the secondary region. All writes made to the primary region before the last sync time are available to be read from the secondary location. Writes made to the primary region after the last sync time property may or may not be available for reads yet.

Any blob, file, queue, or table operation latency can cause cascading slowdowns in your application. The Success E2E Latency metric measures the total amount of time it takes for requests to be processed by the storage account APIs, sent to the client, and then acknowledged by the client.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/last-sync-time-get

A company has a SaaS solution that uses Azure SQL Database with elastic pools. The solution contains a dedicated database for each customer organization. Customer organizations have peak usage at different periods during the year.

You need to implement the Azure SQL Database elastic pool to minimize cost.

Which option or options should you configure?

Number of transactions only

eDTUs per database only

Number of databases only

CPU usage only

eDTUs and max data size

Answer is eDTUs and max data size.

The best size for a pool depends on the aggregate resources needed for all databases in the pool. This involves determining the following:

- Maximum resources utilized by all databases in the pool (either maximum DTUs or maximum vCores depending on your choice of resourcing model).

- Maximum storage bytes utilized by all databases in the pool.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

A company manages several on-premises Microsoft SQL Server databases.

You need to migrate the databases to Microsoft Azure by using a backup process of Microsoft SQL Server.

Which data technology should you use?

Azure SQL Database single database

Azure SQL Data Warehouse

Azure Cosmos DB

Azure SQL Database Managed Instance

Answer is Azure SQL Database Managed Instance.

Managed instance is a new deployment option of Azure SQL Database, providing near 100% compatibility with the latest SQL Server on-premises (Enterprise Edition) Database Engine, providing a native virtual network (VNet) implementation that addresses common security concerns, and a business model favorable for on-premises SQL Server customers. The managed instance deployment model allows existing SQL Server customers to lift and shift their on-premises applications to the cloud with minimal application and database changes.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-managed-instance

The data engineering team manages Azure HDInsight clusters. The team spends a large amount of time creating and destroying clusters daily because most of the data pipeline process runs in minutes.

You need to implement a solution that deploys multiple HDInsight clusters with minimal effort.

What should you implement?

Azure Databricks

Azure Traffic Manager

Azure Resource Manager templates

Ambari web user interface

Answer is Azure Resource Manager templates.

A Resource Manager template makes it easy to create the following resources for your application in a single, coordinated operation: HDInsight clusters and their dependent resources (such as the default storage account). Other resources (such as Azure SQL Database to use Apache Sqoop). In the template, you define the resources that are needed for the application. You also specify deployment parameters to input values for different environments. The template consists of JSON and expressions that you use to construct values for your deployment.

References: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-create-linux-clusters-arm-templates

A company is designing a hybrid solution to synchronize data and on-premises Microsoft SQL Server database to Azure SQL Database.

You must perform an assessment of databases to determine whether data will move without compatibility issues. You need to perform the assessment.

Which tool should you use?

SQL Server Migration Assistant (SSMA)

Microsoft Assessment and Planning Toolkit

SQL Vulnerability Assessment (VA)

Azure SQL Data Sync

Data Migration Assistant (DMA)

Answer is Data Migration Assistant (DMA)

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends performance and reliability improvements for your target environment and allows you to move your schema, data, and uncontained objects from your source server to your target server.

References:

DMA

https://docs.microsoft.com/en-us/sql/dma/dma-overview

Azure SQL Data Sync

https://docs.microsoft.com/en-us/azure/azure-sql/database/sql-data-sync-data-sql-server-sql-database

A company plans to use Azure SQL Database to support a mission-critical application.

The application must be highly available without performance degradation during maintenance windows. You need to implement the solution.

Which three technologies should you implement?

Premium service tier

Virtual machine Scale Sets

Basic service tier

SQL Data Sync

Always On availability groups

Zone-redundant configuration

Answer is A,E,F

A: Premium/business critical service tier model that is based on a cluster of database engine processes. This architectural model relies on a fact that there is always a quorum of available database engine nodes and has minimal performance impact on your workload even during maintenance activities.

E: In the premium model, Azure SQL database integrates compute and storage on the single node. High availability in this architectural model is achieved by replication of compute (SQL Server Database Engine process) and storage (locally attached SSD) deployed in 4-node cluster, using technology similar to SQL Server Always On Availability Groups.

F: Zone redundant configuration By default, the quorum-set replicas for the local storage configurations are created in the same datacenter. With the introduction of Azure Availability Zones, you have the ability to place the different replicas in the quorum-sets to different availability zones in the same region. To eliminate a single point of failure, the control ring is also duplicated across multiple zones as three gateway rings (GW).

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-high-availability