DP-203: Data Engineering on Microsoft Azure

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

A company uses Azure Data Lake Gen 1 Storage to store big data related to consumer behavior.

You need to implement logging.

Solution: Configure Azure Data Lake Storage diagnostics to store logs and metrics in a storage account.

Does the solution meet the goal?

Yes

No

Answer is Yes

Explanation:

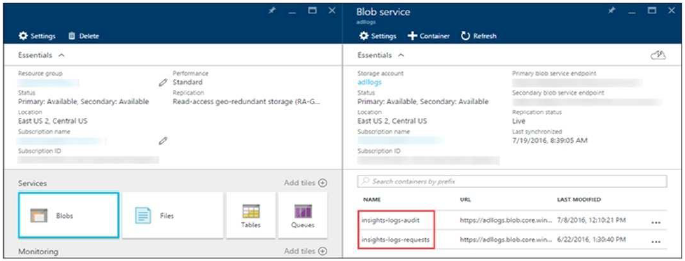

From the Azure Storage account that contains log data, open the Azure Storage account blade associated with Data Lake Storage Gen1 for logging, and then click Blobs. The Blob service blade lists two containers.

References:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-diagnostic-logs

Current environment

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging.

The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

The company has a reporting infrastructure that ingests data from local databases and partner services. Partners services consists of distributors, wholesales, and retailers across the world. The company performs daily, weekly, and monthly reporting.

Requirements

Tier 3 and Tier 6 through Tier 8 application must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Tier 7 and Tier 8 partner access must be restricted to the database only.

In addition to default Azure backup behavior, Tier 4 and 5 databases must be on a backup strategy that performs a transaction log backup eve hour, a differential backup of databases every day and a full back up every week.

Back up strategies must be put in place for all other standalone Azure SQL Databases using Azure SQL-provided backup storage and capabilities.

Databases

Contoso requires their data estate to be designed and implemented in the Azure Cloud. Moving to the cloud must not inhibit access to or availability of data.

Databases:

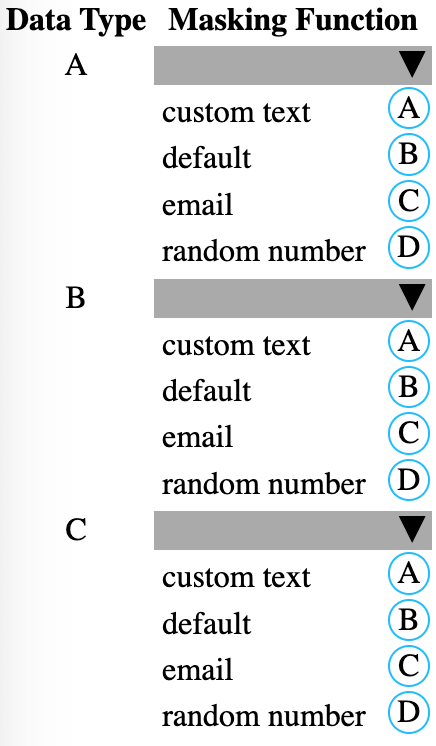

Tier 1 Database must implement data masking using the following masking logic:

Tier 2 databases must sync between branches and cloud databases and in the event of conflicts must be set up for conflicts to be won by on-premises databases.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of a server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Security

A method of managing multiple databases in the cloud at the same time is must be implemented to streamlining data management and limiting management access to only those requiring access.

Monitoring

Monitoring must be set up on every database. Contoso and partners must receive performance reports as part of contractual agreements.

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

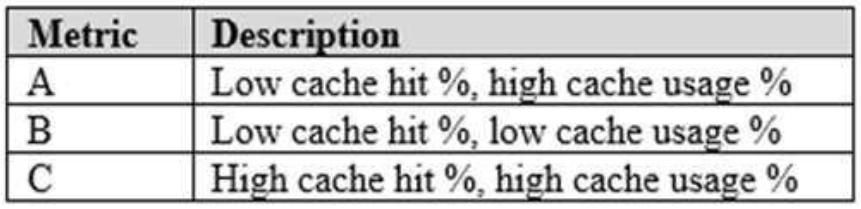

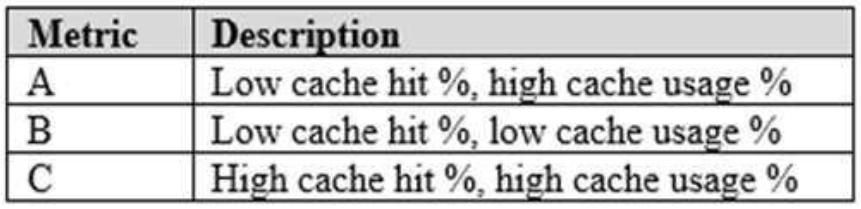

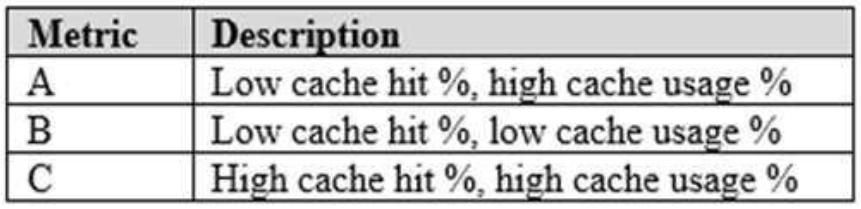

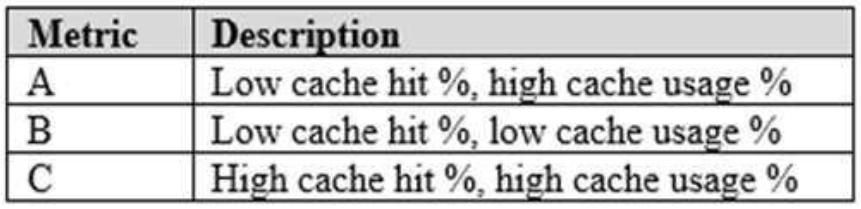

The Azure SQL Data Warehouse cache must be monitored when the database is being used. A dashboard monitoring key performance indicators (KPIs) indicated by traffic lights must be created and displayed based on the following metrics:

Existing Data Protection and Security compliances require that all certificates and keys are internally managed in an on-premises storage.

You identify the following reporting requirements:

- Azure Data Warehousüe must be used to gather and query data from multiple internal and external databases

- Azure Data Warehouse must be optimized to use data from a cache

- Reporting data aggregated for external partners must be stored in Azure Storage and be made available during regular business hours in the connecting regions

- Reporting strategies must be improved to real time or near real time reporting cadence to improve competitiveness and the general supply chain

- Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

- Tier 10 reporting data must be stored in Azure Blobs

Issues

Team members identify the following issues:

- Both internal and external client application run complex joins, equality searches and group-by clauses. Because some systems are managed externally, the queries will not be changed or optimized by Contoso

- External partner organization data formats, types and schemas are controlled by the partner companies

- Internal and external database development staff resources are primarily SQL developers familiar with the Transact-SQL language.

- Size and amount of data has led to applications and reporting solutions not performing are required speeds

- Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

- The company maintains several legacy client applications. Data for these applications remains isolated form other applications. This has led to hundreds of databases being provisioned on a per application basis.

You need to implement diagnostic logging for Data Warehouse monitoring.

Which log should you use?

RequestSteps

DmsWorkers

SqlRequests

ExecRequests

Answer is SqlRequests

The Azure SQL Data Warehouse cache must be monitored when the database is being used.

References:

https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-pdw-sql-requests-transact-sq

Current environment

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging.

The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

The company has a reporting infrastructure that ingests data from local databases and partner services. Partners services consists of distributors, wholesales, and retailers across the world. The company performs daily, weekly, and monthly reporting.

Requirements

Tier 3 and Tier 6 through Tier 8 application must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Tier 7 and Tier 8 partner access must be restricted to the database only.

In addition to default Azure backup behavior, Tier 4 and 5 databases must be on a backup strategy that performs a transaction log backup eve hour, a differential backup of databases every day and a full back up every week.

Back up strategies must be put in place for all other standalone Azure SQL Databases using Azure SQL-provided backup storage and capabilities.

Databases

Contoso requires their data estate to be designed and implemented in the Azure Cloud. Moving to the cloud must not inhibit access to or availability of data.

Databases:

Tier 1 Database must implement data masking using the following masking logic:

Tier 2 databases must sync between branches and cloud databases and in the event of conflicts must be set up for conflicts to be won by on-premises databases.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of a server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Security

A method of managing multiple databases in the cloud at the same time is must be implemented to streamlining data management and limiting management access to only those requiring access.

Monitoring

Monitoring must be set up on every database. Contoso and partners must receive performance reports as part of contractual agreements.

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

The Azure SQL Data Warehouse cache must be monitored when the database is being used. A dashboard monitoring key performance indicators (KPIs) indicated by traffic lights must be created and displayed based on the following metrics:

Existing Data Protection and Security compliances require that all certificates and keys are internally managed in an on-premises storage.

You identify the following reporting requirements:

- Azure Data Warehousüe must be used to gather and query data from multiple internal and external databases

- Azure Data Warehouse must be optimized to use data from a cache

- Reporting data aggregated for external partners must be stored in Azure Storage and be made available during regular business hours in the connecting regions

- Reporting strategies must be improved to real time or near real time reporting cadence to improve competitiveness and the general supply chain

- Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

- Tier 10 reporting data must be stored in Azure Blobs

Issues

Team members identify the following issues:

- Both internal and external client application run complex joins, equality searches and group-by clauses. Because some systems are managed externally, the queries will not be changed or optimized by Contoso

- External partner organization data formats, types and schemas are controlled by the partner companies

- Internal and external database development staff resources are primarily SQL developers familiar with the Transact-SQL language.

- Size and amount of data has led to applications and reporting solutions not performing are required speeds

- Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

- The company maintains several legacy client applications. Data for these applications remains isolated form other applications. This has led to hundreds of databases being provisioned on a per application basis.

You need setup monitoring for tiers 6 through 8.

What should you configure?

extended events for average storage percentage that emails data engineers

an alert rule to monitor CPU percentage in databases that emails data engineers

an alert rule to monitor CPU percentage in elastic pools that emails data engineers

an alert rule to monitor storage percentage in databases that emails data engineers

an alert rule to monitor storage percentage in elastic pools that emails data engineers

Answer is an alert rule to monitor storage percentage in elastic pools that emails data engineers

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

How should you monitor SALESDB to meet the technical requirements?

Query the

sys.resource_stats dynamic management view.Review the Query Performance Insights for SALESDB.

Query the

sys.dm_os_wait_stats dynamic management view.Review the auditing information of SALESDB.

Answer is Query the

sys.resource_stats dynamic management view.Scenario: Disk IO, CPU, and memory usage must be monitored for SALESDB

The

sys.resource_stats returns historical data for CPU, IO, DTU consumption. There’s one row every 5 minute for a database in an Azure logical SQL Server if there’s a change in the metrics.Incorrect Answers:

Query Performance Insight helps you to quickly identify what your longest running queries are, how they change over time, and what waits are affecting them.

sys.dm_os_wait_stats: specific types of wait times during query execution can indicate bottlenecks or stall points within the query. Similarly, high wait times, or wait counts server wide can indicate bottlenecks or hot spots in interaction query interactions within the server instance. For example, lock waits indicate data contention by queries; page IO latch waits indicate slow IO response times; page latch update waits indicate incorrect file layout.References:

https://dataplatformlabs.com/monitoring-azure-sql-database-with-sys-resource_stats/

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

You need to ensure that the missing indexes for REPORTINGDB are added.

What should you use?

SQL Database Advisor

extended events

Query Performance Insight

automatic tuning

Answer is automatic tuning

Automatic tuning options include create index, which identifies indexes that may improve performance of your workload, creates indexes, and automatically verifies that performance of queries has improved.

Scenario:

REPORTINGDB stores reporting data and contains server columnstore indexes.

Migrate SALESDB and REPORTINGDB to an Azure SQL database.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-automatic-tuning

Current environment

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging.

The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

The company has a reporting infrastructure that ingests data from local databases and partner services. Partners services consists of distributors, wholesales, and retailers across the world. The company performs daily, weekly, and monthly reporting.

Requirements

Tier 3 and Tier 6 through Tier 8 application must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Tier 7 and Tier 8 partner access must be restricted to the database only.

In addition to default Azure backup behavior, Tier 4 and 5 databases must be on a backup strategy that performs a transaction log backup eve hour, a differential backup of databases every day and a full back up every week.

Back up strategies must be put in place for all other standalone Azure SQL Databases using Azure SQL-provided backup storage and capabilities.

Databases

Contoso requires their data estate to be designed and implemented in the Azure Cloud. Moving to the cloud must not inhibit access to or availability of data.

Databases:

Tier 1 Database must implement data masking using the following masking logic:

Tier 2 databases must sync between branches and cloud databases and in the event of conflicts must be set up for conflicts to be won by on-premises databases.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of a server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Security

A method of managing multiple databases in the cloud at the same time is must be implemented to streamlining data management and limiting management access to only those requiring access.

Monitoring

Monitoring must be set up on every database. Contoso and partners must receive performance reports as part of contractual agreements.

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

The Azure SQL Data Warehouse cache must be monitored when the database is being used. A dashboard monitoring key performance indicators (KPIs) indicated by traffic lights must be created and displayed based on the following metrics:

Existing Data Protection and Security compliances require that all certificates and keys are internally managed in an on-premises storage.

You identify the following reporting requirements:

- Azure Data Warehousüe must be used to gather and query data from multiple internal and external databases

- Azure Data Warehouse must be optimized to use data from a cache

- Reporting data aggregated for external partners must be stored in Azure Storage and be made available during regular business hours in the connecting regions

- Reporting strategies must be improved to real time or near real time reporting cadence to improve competitiveness and the general supply chain

- Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

- Tier 10 reporting data must be stored in Azure Blobs

Issues

Team members identify the following issues:

- Both internal and external client application run complex joins, equality searches and group-by clauses. Because some systems are managed externally, the queries will not be changed or optimized by Contoso

- External partner organization data formats, types and schemas are controlled by the partner companies

- Internal and external database development staff resources are primarily SQL developers familiar with the Transact-SQL language.

- Size and amount of data has led to applications and reporting solutions not performing are required speeds

- Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

- The company maintains several legacy client applications. Data for these applications remains isolated form other applications. This has led to hundreds of databases being provisioned on a per application basis.

You need to mask tier 1 data. Which functions should you use?

A-D-C

A-B-C

B-B-A

B-C-A

C-D-A

C-B-B

D-C-C

D-D-B

Answer is B-C-A

A: Default

Full masking according to the data types of the designated fields.

For string data types, use XXXX or fewer Xs if the size of the field is less than 4 characters (char, nchar, varchar, nvarchar, text, ntext).

B: email

C: Custom text

Custom String Masking method which exposes the first and last letters and adds a custom padding string in the middle. prefix,[padding],suffix

Tier 1 Database must implement data masking using the following masking logic:

References:

https://docs.microsoft.com/en-us/sql/relational-databases/security/dynamic-data-masking

Background

Proseware, Inc, develops and manages a product named Poll Taker. The product is used for delivering public opinion polling and analysis.

Polling data comes from a variety of sources, including online surveys, house-to-house interviews, and booths at public events.

Polling data

Polling data is stored in one of the two locations:

- An on-premises Microsoft SQL Server 2019 database named PollingData Azure Data Lake Gen 2

- Data in Data Lake is queried by using PolyBase

Poll metadata

Each poll has associated metadata with information about the poll including the date and number of respondents. The data is stored as JSON.

Phone-based polling

Security

- Phone-based poll data must only be uploaded by authorized users from authorized devices

- Contractors must not have access to any polling data other than their own

- Access to polling data must set on a per-active directory user basis

Data migration and loading

- All data migration processes must use Azure Data Factory

- All data migrations must run automatically during non-business hours

- Data migrations must be reliable and retry when needed

Performance

After six months, raw polling data should be moved to a storage account. The storage must be available in the event of a regional disaster. The solution must minimize costs.

Deployments

- All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple environments

- No credentials or secrets should be used during deployments

Reliability

All services and processes must be resilient to a regional Azure outage.

Monitoring

All Azure services must be monitored by using Azure Monitor. On-premises SQL Server performance must be monitored.

You need to ensure phone-based polling data upload reliability requirements are met.

How should you configure monitoring?

| Setting | Value | ||||||||

| Metric |

|

||||||||

| Aggregation |

|

||||||||

A-A

A-B

B-A

B-B

C-A

C-B

Answer is C-A

Box 1: FileCapacity

FileCapacity is the amount of storage used by the storage account’s File service in bytes.

Box 2: Avg

The aggregation type of the FileCapacity metric is Avg.

Scenario:

All services and processes must be resilient to a regional Azure outage.

All Azure services must be monitored by using Azure Monitor. On-premises SQL Server performance must be monitored.

References:

https://docs.microsoft.com/en-us/azure/azure-monitor/platform/metrics-supported

General Overview

Litware, Inc, is an international car racing and manufacturing company that has 1,000 employees. Most employees are located in Europe. The company supports racing teams that complete in a worldwide racing series.

Physical Locations

Litware has two main locations: a main office in London, England, and a manufacturing plant in Berlin, Germany. During each race weekend, 100 engineers set up a remote portable office by using a VPN to connect the datacentre in the London office. The portable office is set up and torn down in approximately 20 different countries each year.

Existing environment

Race Central

During race weekends, Litware uses a primary application named Race Central. Each car has several sensors that send real-time telemetry data to the London datacentre. The data is used for real-time tracking of the cars.

Race Central also sends batch updates to an application named Mechanical Workflow by using Microsoft SQL Server Integration Services (SSIS).

The telemetry data is sent to a MongoDB database. A custom application then moves the data to databases in SQL Server 2017. The telemetry data in MongoDB has more than 500 attributes. The application changes the attribute names when the data is moved to SQL Server 2017.

The database structure contains both OLAP and OLTP databases.

Mechanical Workflow

Mechanical Workflow is used to track changes and improvements made to the cars during their lifetime.

Currently, Mechanical Workflow runs on SQL Server 2017 as an OLAP system.

Mechanical Workflow has a named Table1 that is 1 TB. Large aggregations are performed on a single column of Table 1.

Requirements

Planned Changes

Litware is the process of rearchitecting its data estate to be hosted in Azure. The company plans to decommission the London datacentre and move all its applications to an Azure datacentre.

Technical Requirements

Litware identifies the following technical requirements:

- Data collection for Race Central must be moved to Azure Cosmos DB and Azure SQL Database. The data must be written to the Azure datacentre closest to each race and must converge in the least amount of time.

- The query performance of Race Central must be stable, and the administrative time it takes to perform optimizations must be minimized.

- The datacentre for Mechanical Workflow must be moved to Azure SQL data Warehouse.

- Transparent data encryption (IDE) must be enabled on all data stores, whenever possible.

- An Azure Data Factory pipeline must be used to move data from Cosmos DB to SQL Database for Race Central. If the data load takes longer than 20 minutes, configuration changes must be made to Data Factory.

- The telemetry data must migrate toward a solution that is native to Azure.

- The telemetry data must be monitored for performance issues. You must adjust the Cosmos DB Request Units per second (RU/s) to maintain a performance SLA while minimizing the cost of the Ru/s.

During rare weekends, visitors will be able to enter the remote portable offices. Litware is concerned that some proprietary information might be exposed. The company identifies the following data masking requirements for the Race Central data that will be stored in SQL Database:

- Only show the last four digits of the values in a column named SuspensionSprings.

- Only Show a zero value for the values in a column named ShockOilWeight.

You are building the data store solution for Mechanical Workflow.

How should you configure Table1?

A-A

A-B

A-C

B-B

B-C

C-B

C-C

C-D

Answer is A-B

Table Type: Hash distributed.

Hash-distributed tables improve query performance on large fact tables.

Index type: Clusted columnstore

Scenario:

Mechanical Workflow has a named Table1 that is 1 TB. Large aggregations are performed on a single column of Table 1.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-distribute

General Overview

Litware, Inc, is an international car racing and manufacturing company that has 1,000 employees. Most employees are located in Europe. The company supports racing teams that complete in a worldwide racing series.

Physical Locations

Litware has two main locations: a main office in London, England, and a manufacturing plant in Berlin, Germany. During each race weekend, 100 engineers set up a remote portable office by using a VPN to connect the datacentre in the London office. The portable office is set up and torn down in approximately 20 different countries each year.

Existing environment

Race Central

During race weekends, Litware uses a primary application named Race Central. Each car has several sensors that send real-time telemetry data to the London datacentre. The data is used for real-time tracking of the cars.

Race Central also sends batch updates to an application named Mechanical Workflow by using Microsoft SQL Server Integration Services (SSIS).

The telemetry data is sent to a MongoDB database. A custom application then moves the data to databases in SQL Server 2017. The telemetry data in MongoDB has more than 500 attributes. The application changes the attribute names when the data is moved to SQL Server 2017.

The database structure contains both OLAP and OLTP databases.

Mechanical Workflow

Mechanical Workflow is used to track changes and improvements made to the cars during their lifetime.

Currently, Mechanical Workflow runs on SQL Server 2017 as an OLAP system.

Mechanical Workflow has a named Table1 that is 1 TB. Large aggregations are performed on a single column of Table 1.

Requirements

Planned Changes

Litware is the process of rearchitecting its data estate to be hosted in Azure. The company plans to decommission the London datacentre and move all its applications to an Azure datacentre.

Technical Requirements

Litware identifies the following technical requirements:

- Data collection for Race Central must be moved to Azure Cosmos DB and Azure SQL Database. The data must be written to the Azure datacentre closest to each race and must converge in the least amount of time.

- The query performance of Race Central must be stable, and the administrative time it takes to perform optimizations must be minimized.

- The datacentre for Mechanical Workflow must be moved to Azure SQL data Warehouse.

- Transparent data encryption (IDE) must be enabled on all data stores, whenever possible.

- An Azure Data Factory pipeline must be used to move data from Cosmos DB to SQL Database for Race Central. If the data load takes longer than 20 minutes, configuration changes must be made to Data Factory.

- The telemetry data must migrate toward a solution that is native to Azure.

- The telemetry data must be monitored for performance issues. You must adjust the Cosmos DB Request Units per second (RU/s) to maintain a performance SLA while minimizing the cost of the Ru/s.

During rare weekends, visitors will be able to enter the remote portable offices. Litware is concerned that some proprietary information might be exposed. The company identifies the following data masking requirements for the Race Central data that will be stored in SQL Database:

- Only show the last four digits of the values in a column named SuspensionSprings.

- Only Show a zero value for the values in a column named ShockOilWeight.

On which data store should you configure TDE to meet the technical requirements?

Azure Cosmos DB

Azure Synapse Analytics

Azure SQL Database

Answer is Azure Synapse Analytics

Transparent data encryption (TDE) must be enabled on all data stores, whenever possible. The database for Mechanical Workflow must be moved to Azure Synapse Analytics.

Background

Proseware, Inc, develops and manages a product named Poll Taker. The product is used for delivering public opinion polling and analysis.

Polling data comes from a variety of sources, including online surveys, house-to-house interviews, and booths at public events.

Polling data

Polling data is stored in one of the two locations:

- An on-premises Microsoft SQL Server 2019 database named PollingData Azure Data Lake Gen 2

- Data in Data Lake is queried by using PolyBase

Poll metadata

Each poll has associated metadata with information about the poll including the date and number of respondents. The data is stored as JSON.

Phone-based polling

Security

- Phone-based poll data must only be uploaded by authorized users from authorized devices

- Contractors must not have access to any polling data other than their own

- Access to polling data must set on a per-active directory user basis

Data migration and loading

- All data migration processes must use Azure Data Factory

- All data migrations must run automatically during non-business hours

- Data migrations must be reliable and retry when needed

Performance

After six months, raw polling data should be moved to a storage account. The storage must be available in the event of a regional disaster. The solution must minimize costs.

Deployments

- All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple environments

- No credentials or secrets should be used during deployments

Reliability

All services and processes must be resilient to a regional Azure outage.

Monitoring

All Azure services must be monitored by using Azure Monitor. On-premises SQL Server performance must be monitored.

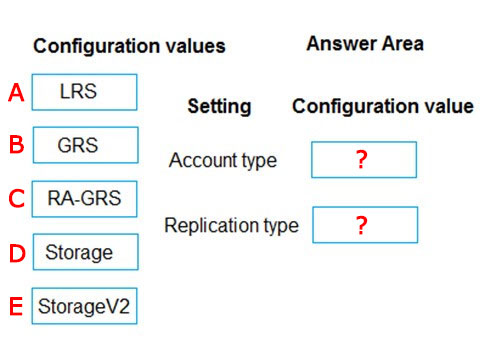

You need to provision the polling data storage account.

How should you configure the storage account?

A - B

B - D

C - A

D - B

E - C

A - D

B - E

C - B

Answer is E - C

Account type: StorageV2

You must create new storage accounts as type StorageV2 (general-purpose V2) to take advantage of Data Lake Storage Gen2 features.

Scenario: Polling data is stored in one of the two locations:

- An on-premises Microsoft SQL Server 2019 database named PollingData

- Azure Data Lake Gen 2

Data in Data Lake is queried by using PolyBase

Replication type: RA-GRS

Scenario: All services and processes must be resilient to a regional Azure outage.

Geo-redundant storage (GRS) is designed to provide at least 99.99999999999999% (16 9's) durability of objects over a given year by replicating your data to a secondary region that is hundreds of miles away from the primary region. If your storage account has GRS enabled, then your data is durable even in the case of a complete regional outage or a disaster in which the primary region isn't recoverable.

If you opt for GRS, you have two related options to choose from:

- GRS replicates your data to another data center in a secondary region, but that data is available to be read only if Microsoft initiates a failover from the primary to secondary region.

- Read-access geo-redundant storage (RA-GRS) is based on GRS. RA-GRS replicates your data to another data center in a secondary region, and also provides you with the option to read from the secondary region. With RA-GRS, you can read from the secondary region regardless of whether Microsoft initiates a failover from the primary to secondary region.

References:

https://docs.microsoft.com/bs-cyrl-ba/azure/storage/blobs/data-lake-storage-quickstart-create-account

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy-grs