DP-203: Data Engineering on Microsoft Azure

You have an Azure data solution that contains an Azure SQL data warehouse named DW1.

Several users execute adhoc queries to DW1 concurrently.

You regularly perform automated data loads to DW1.

You need to ensure that the automated data loads have enough memory available to complete quickly and successfully when the adhoc queries run.

What should you do?

Hash distribute the large fact tables in DW1 before performing the automated data loads.

Assign a larger resource class to the automated data load queries.

Create sampled statistics for every column in each table of DW1.

Assign a smaller resource class to the automated data load queries.

Answer is Assign a larger resource class to the automated data load queries.

To ensure the loading user has enough memory to achieve maximum compression rates, use loading users that are a member of a medium or large resource class.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You apply an Azure policy that tags the storage account.

Does this meet the goal?

Yes

No

Answer is No

Instead apply an Azure Blob storage lifecycle policy.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts?tabs=azure-portal

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You apply an expired tag to the blobs in the storage account.

Does this meet the goal?

Yes

No

Answer is No

Instead apply an Azure Blob storage lifecycle policy.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts?tabs=azure-portal

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You apply an Azure Blob storage lifecycle policy.

Does this meet the goal?

Yes

No

Answer is Yes

Azure Blob storage lifecycle management offers a rich, rule-based policy for GPv2 and Blob storage accounts. Use the policy to transition your data to the appropriate access tiers or expire at the end of the data's lifecycle.

The lifecycle management policy lets you:

- Transition blobs to a cooler storage tier (hot to cool, hot to archive, or cool to archive) to optimize for performance and cost Delete blobs at the end of their lifecycles

- Define rules to be run once per day at the storage account level

- Apply rules to containers or a subset of blobs (using prefixes as filters)

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts?tabs=azure-portal

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

Solution: You schedule an Azure Data Factory pipeline.

Does this meet the goal?

Yes

No

Answer is No

Instead apply an Azure Blob storage lifecycle policy.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts?tabs=azure-portal

You have a table named SalesFact in an Azure SQL data warehouse. SalesFact contains sales data from the past 36 months and has the following characteristics:

- Is partitioned by month

- Contains one billion rows

- Has clustered columnstore indexes

Which three actions should you perform in sequence in a stored procedure?

| Answer Options | Answer Area | |||

| A | Create an empty table named SalesFact_Work that has the same schema as SalesFact | ? | ||

| B | Drop the SalesFact_Work table | ? | ||

| C | Copy the data to a new table by using CREATE TABLE AS SELECT (CTAS) | ? | ||

| D | Truncate the partition containing the state data. | |||

| E | Switch the partition containing the stale data from SalesFact to SalesFact_Work. | |||

| F | Execute a DELETE statement where the value in the Data column is more than 35 months ago. |

A-B-C

B-A-E

C-D-B

D-B-A

E-F-A

F-B-C

A-E-B

B-D-F

Answer is A-E-B

Step 1: Create an empty table named SalesFact_work that has the same schema as SalesFact.

Step 2: Switch the partition containing the stale data from SalesFact to SalesFact_Work.

SQL Data Warehouse supports partition splitting, merging, and switching. To switch partitions between two tables, you must ensure that the partitions align on their respective boundaries and that the table definitions match. Loading data into partitions with partition switching is a convenient way stage new data in a table that is not visible to users the switch in the new data.

Step 3: Drop the SalesFact_Work table.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-partition

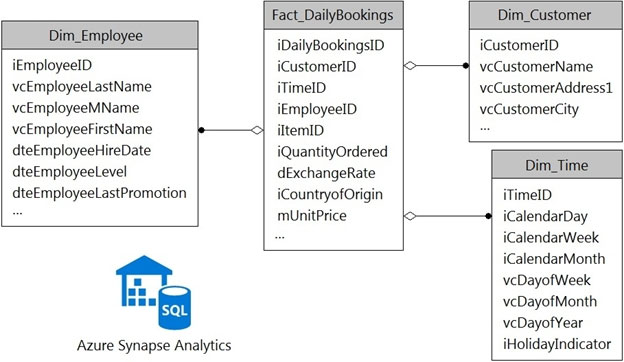

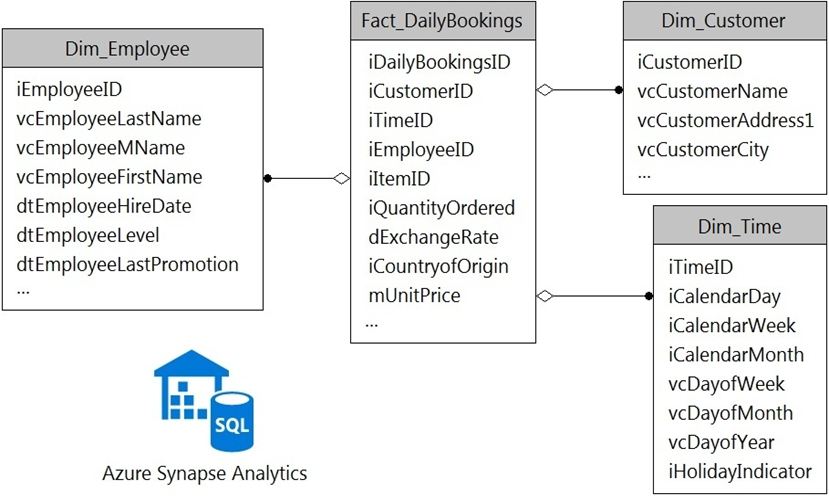

You have a data model that you plan to implement in a data warehouse in Azure Synapse Analytics as shown in the following exhibit.

All the dimension tables will be less than 2 GB after compression, and the fact table will be approximately 6 TB.

Which type of table should you use for each table?

Box 1: Replicated

Replicated tables are ideal for small star-schema dimension tables, because the fact table is often distributed on a column that is not compatible with the connected dimension tables. If this case applies to your schema, consider changing small dimension tables currently implemented as round-robin to replicated.

Box 2: Replicated

Box 3: Replicated

Box 4: Hash-distributed

For Fact tables use hash-distribution with clustered columnstore index. Performance improves when two hash tables are joined on the same distribution column.

Reference:

https://azure.microsoft.com/en-us/updates/reduce-data-movement-and-make-your-queries-more-efficient-with-the-general-availability-of-replicated-tables/

https://azure.microsoft.com/en-us/blog/replicated-tables-now-generally-available-in-azure-sql-data-warehouse/

You have an Azure Data Lake Storage Gen2 container.

Data is ingested into the container, and then transformed by a data integration application. The data is NOT modified after that. Users can read files in the container but cannot modify the files.

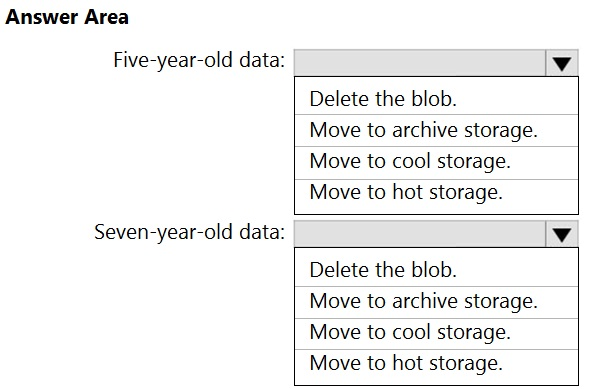

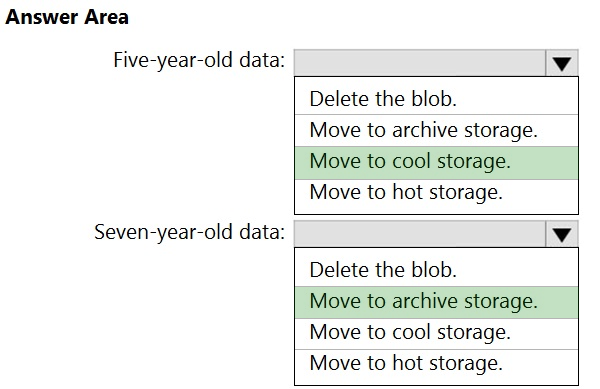

You need to design a data archiving solution that meets the following requirements:

- New data is accessed frequently and must be available as quickly as possible.

- Data that is older than five years is accessed infrequently but must be available within one second when requested.

- Data that is older than seven years is NOT accessed. After seven years, the data must be persisted at the lowest cost possible.

- Costs must be minimized while maintaining the required availability.

How should you manage the data?

Box 1: Move to cool storage

Box 2: Move to archive storage

Archive - Optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements, on the order of hours.

The following table shows a comparison of premium performance block blob storage, and the hot, cool, and archive access tiers.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers

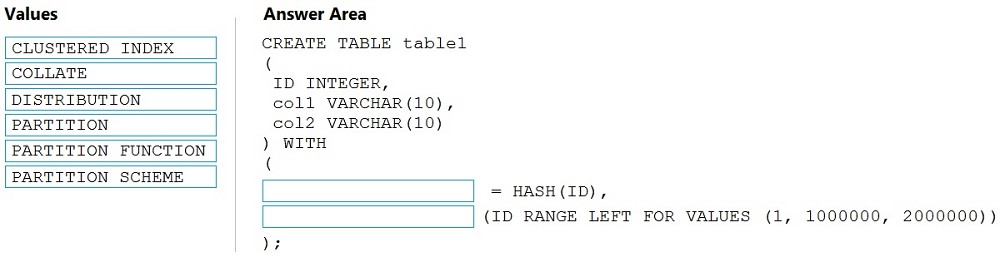

You need to create a partitioned table in an Azure Synapse Analytics dedicated SQL pool.

How should you complete the Transact-SQL statement?

Each value may be used once, more than once, or not at all.

Box 1: DISTRIBUTION

Table distribution options include DISTRIBUTION = HASH ( distribution_column_name ), assigns each row to one distribution by hashing the value stored in distribution_column_name.

Box 2: PARTITION

Table partition options. Syntax:

PARTITION ( partition_column_name RANGE [ LEFT | RIGHT ] FOR VALUES ( [ boundary_value [,...n] ] ))

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse

You are the data engineer for your company. An application uses a NoSQL database to store data. The database uses the key-value and wide-column NoSQL database type.

Developers need to access data in the database using an API.

You need to determine which API to use for the database model and type.

Which two APIs should you use?

Table API

MongoDB API

Gremlin API

SQL API

Cassandra API

Answers are; Table API and Cassandra API

key value --> Cassandra API

graph --> Gremlin API

document --> SQL API and MongoDB API

columnar --> Table API

Reference:

https://cloud.netapp.com/blog/azure-cvo-blg-azure-nosql-types-services-and-a-quick-tutorial#H_H1