DP-203: Data Engineering on Microsoft Azure

A company manufactures automobile parts. The company installs IoT sensors on manufacturing machinery.

You must design a solution that analyzes data from the sensors.

You need to recommend a solution that meets the following requirements:

- Data must be analyzed in real-time.

- Data queries must be deployed using continuous integration.

- Data must be visualized by using charts and graphs.

- Data must be available for ETL operations in the future.

- The solution must support high-volume data ingestion.

Which three actions should you recommend?

Use Azure Analysis Services to query the data. Output query results to Power BI.

Configure an Azure Event Hub to capture data to Azure Data Lake Storage.

Develop an Azure Stream Analytics application that queries the data and outputs to Power BI. Use Azure Data Factory to deploy the Azure Stream Analytics application.

Develop an application that sends the IoT data to an Azure Event Hub.

Develop an Azure Stream Analytics application that queries the data and outputs to Power BI. Use Azure Pipelines to deploy the Azure Stream Analytics application.

Develop an application that sends the IoT data to an Azure Data Lake Storage container.

Answer are;

Configure an Azure Event Hub to capture data to Azure Data Lake Storage.

Develop an application that sends the IoT data to an Azure Event Hub.

Develop an Azure Stream Analytics application that queries the data and outputs to Power BI. Use Azure Pipelines to deploy the Azure Stream Analytics application.

There are two suggested methods to promote a data factory to another environment:

Automated deployment using Data Factory's integration with Azure Pipelines"

References:

https://docs.microsoft.com/en-us/azure/data-factory/continuous-integration-deployment

You are designing a solution for a company. The solution will use model training for objective classification.

You need to design the solution.

What should you recommend?

an Azure Cognitive Services application

a Spark Streaming job

interactive Spark queries

Power BI models

a Spark application that uses Spark MLib.

Answer is a Spark application that uses Spark MLib.

the keyword is Machine Learning "objective classification" to choose the ans choice Spark ML Lib

Spark in SQL Server big data cluster enables AI and machine learning.

You can use Apache Spark MLlib to create a machine learning application to do simple predictive analysis on an open dataset.

MLlib is a core Spark library that provides many utilities useful for machine learning tasks, including utilities that are suitable for:

- Classification

- Regression

- Clustering

- Topic modeling

- Singular value decomposition (SVD) and principal component analysis (PCA)

- Hypothesis testing and calculating sample statistics

Reference:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-machine-learning-mllib-ipython

A company stores data in multiple types of cloud-based databases.

You need to design a solution to consolidate data into a single relational database. Ingestion of data will occur at set times each day.

What should you recommend?

SQL Server Migration Assistant

SQL Data Sync

Azure Data Factory

Azure Database Migration Service

Data Migration Assistant

Answer is Azure Data Factory

We know that A and E are obviously incorrect, and since this is a single-selection question, we've got to select the most appropriate answer from the rest choices. SQL Data Sync can only be used to sync data between a Hub Database and a Member Database, and the Hub Database must be an Azure SQL Database.

Azure Database Migration Service is mainly used to migrate data from on-prem RDMS to Azure Database or from MongoDB to Azure Cosmos DB (you can still do cloud-to-cloud migrations with it but there are strict network topology requirements applied). Considering that we have various types of databases in Azure and the consolidation requirements are not clear, Azure Data Factory is the most universal solution, so the answer is Azure Data Factory.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/introduction

https://azure.microsoft.com/en-us/blog/operationalize-azure-databricks-notebooks-using-data-factory/

https://azure.microsoft.com/en-us/blog/data-ingestion-into-azure-at-scale-made-easier-with-latest-enhancements-to-adf-copy-data-tool/

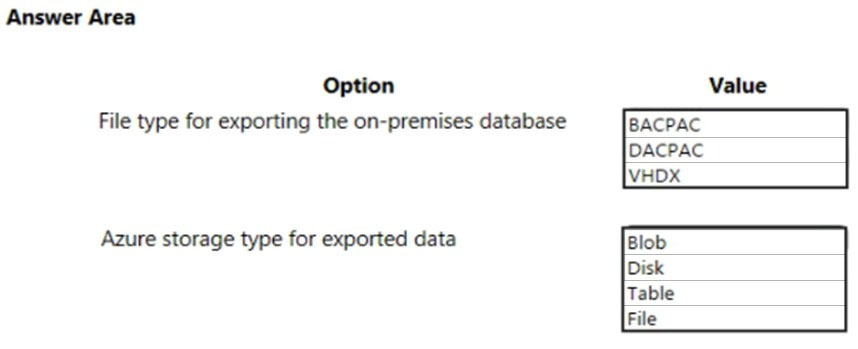

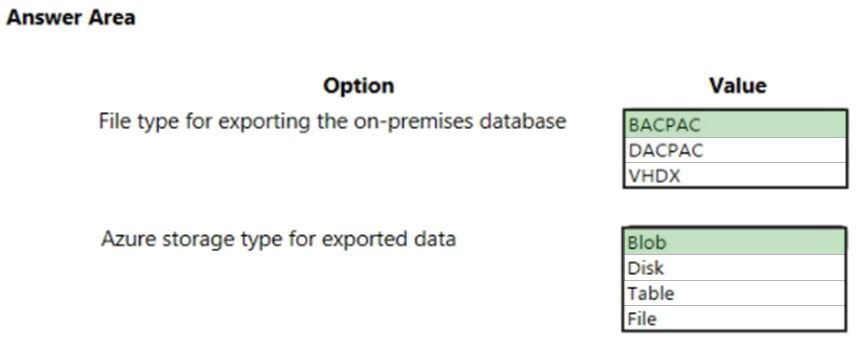

You manage an on-premises server named Server1 that has a database named Database1. The company purchases a new application that can access data from Azure SQL Database.

You recommend a solution to migrate Database1 to an Azure SQL Database instance.

What should you recommend?

If we have an on-premises database and we have a new app(ex: app1). Now app1 can only access data from azure databases. So our plan is to 1. Import data from onpremises to azure sql db.

Backup the on premises db into a bacpac file -> Upload the bacpac file to a blob storage container -> Go to azure sql db you created and in overview click on backup and give the information about sa( blob container) and click backup.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-import

You are designing an application. You plan to use Azure SQL Database to support the application.

The application will extract data from the Azure SQL Database and create text documents. The text documents will be placed into a cloud-based storage solution.

The text storage solution must be accessible from an SMB network share.

You need to recommend a data storage solution for the text documents.

Which Azure data storage type should you recommend?

Queue

Files

Blob

Table

Answer is Files

the keyword is SMB (Server Mesage Block) protocol based on it we can choose the ans choice FILES

Azure Files enables you to set up highly available network file shares that can be accessed by using the standard Server Message Block (SMB) protocol.

Incorrect Answers:

A: The Azure Queue service is used to store and retrieve messages. It is generally used to store lists of messages to be processed asynchronously.

C: Blob storage is optimized for storing massive amounts of unstructured data, such as text or binary data. Blob storage can be accessed via HTTP or HTTPS but not via SMB.

D: Azure Table storage is used to store large amounts of structured data. Azure tables are ideal for storing structured, non-relational data.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-introduction

https://docs.microsoft.com/en-us/azure/storage/tables/table-storage-overview

You are designing an application that will have an Azure virtual machine. The virtual machine will access an Azure SQL database. The database will not be accessible from the Internet.

You need to recommend a solution to provide the required level of access to the database.

What should you include in the recommendation?

Deploy an On-premises data gateway.

Add a virtual network to the Azure SQL server that hosts the database.

Add an application gateway to the virtual network that contains the Azure virtual machine.

Add a virtual network gateway to the virtual network that contains the Azure virtual machine.

Answer is Add a virtual network to the Azure SQL server that hosts the database.

The on-premises data gateway acts as a bridge. It provides quick and secure data transfer between on-premises data, which is data that isn't in the cloud, and several Microsoft cloud services

Azure Application Gateway is a web traffic load balancer that enables you to manage traffic to your web applications

A VPN gateway is a specific type of virtual network gateway that is used to send encrypted traffic between an Azure virtual network and an on-premises location over the public Internet

When you create an Azure virtual machine (VM), you must create a virtual network (VNet) or use an existing VNet. You also need to decide how your VMs are intended to be accessed on the VNet.

Incorrect Answers:

C: Azure Application Gateway is a web traffic load balancer that enables you to manage traffic to your web applications. D: A VPN gateway is a specific type of virtual network gateway that is used to send encrypted traffic between an Azure virtual network and an on-premises location over the public Internet.

References:

https://docs.microsoft.com/en-us/azure/virtual-machines/network-overview

https://azure.microsoft.com/de-de/blog/vnet-service-endpoints-for-azure-sql-database-now-generally-available/

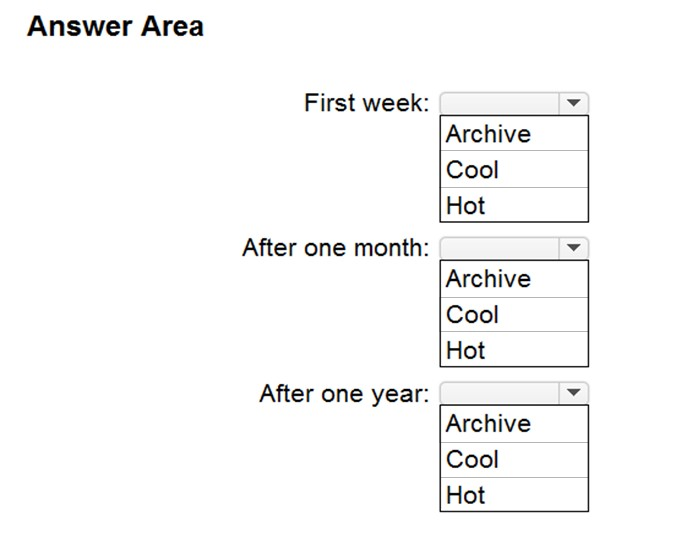

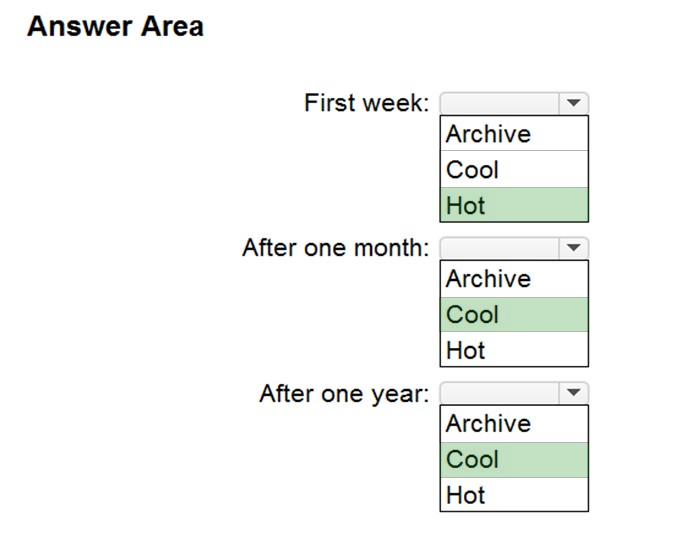

You are designing an application that will store petabytes of medical imaging data.

When the data is first created, the data will be accessed frequently during the first week. After one month, the data must be accessible within 30 seconds, but files will be accessed infrequently. After one year, the data will be accessed infrequently but must be accessible within five minutes.

You need to select a storage strategy for the data. The solution must minimize costs.

Which storage tier should you use for each time frame?

"The archive access tier has the lowest storage cost. But it has higher data retrieval costs compared to the hot and cool tiers. Data in the archive tier can take several hours to retrieve."

First week: Hot

Hot - Optimized for storing data that is accessed frequently.

After one month: Cool

Cool - Optimized for storing data that is infrequently accessed and stored for at least 30 days.

After one year: Cool

Incorrect Answers:

Archive: Optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements (on the order of hours).

References:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers

You are designing a data store that will store organizational information for a company. The data will be used to identify the relationships between users. The data will be stored in an Azure Cosmos DB database and will contain several million objects.

You need to recommend which API to use for the database. The API must minimize the complexity to query the user relationships. The solution must support fast traversals.

Which API should you recommend?

MongoDB

Table

Gremlin

Cassandra

Answer is Gremlin

When we talk about relationships and/or edges/nodes, Gremin API is the answer

Gremlin features fast queries and traversals with the most widely adopted graph query standard.

Reference:

https://docs.microsoft.com/th-th/azure/cosmos-db/graph-introduction?view=azurermps-5.7.0

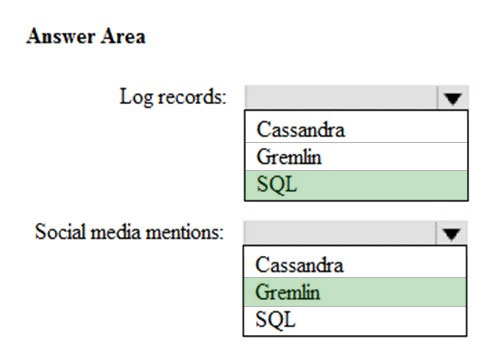

You are designing a new application that uses Azure Cosmos DB. The application will support a variety of data patterns including log records and social media relationships.

You need to recommend which Cosmos DB API to use for each data pattern. The solution must minimize resource utilization.

Which API should you recommend for each data pattern?

Log records: SQL (because logs are row oriented)

Social Media: Gremlin (because it uses relationships)

You can store the actual graph of followers using Azure Cosmos DB Gremlin API to create vertexes for each user and edges that maintain the "A-follows-B" relationships. With the Gremlin API, you can get the followers of a certain user and create more complex queries to suggest people in common. If you add to the graph the Content Categories that people like or enjoy, you can start weaving experiences that include smart content discovery, suggesting content that those people you follow like, or finding people that you might have much in common with.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/social-media-apps