DP-203: Data Engineering on Microsoft Azure

You have an Azure Data Lake Storage Gen2 account that contains JSON files for customers. The files contain two attributes named FirstName and LastName.

You need to copy the data from the JSON files to an Azure Synapse Analytics table by using Azure Databricks. A new column must be created that concatenates the FirstName and LastName values.

You create the following components:

- A destination table in Azure Synapse

- An Azure Blob storage container

- A service principal

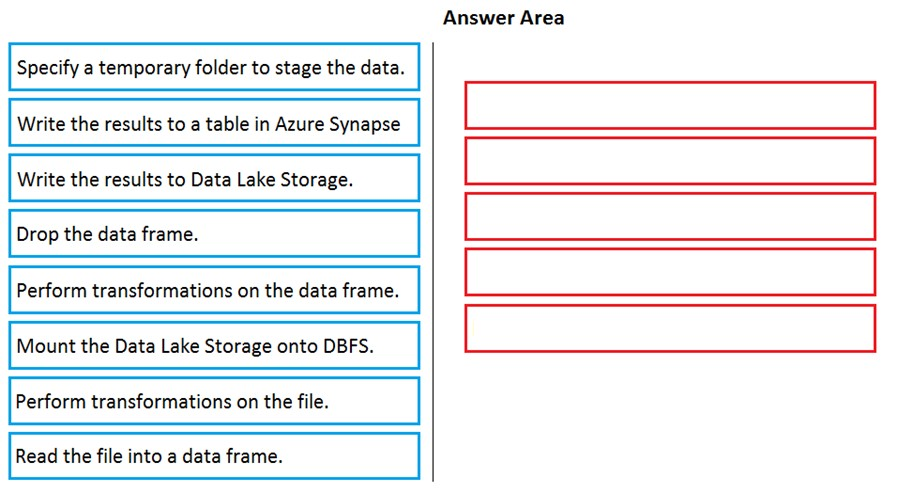

Which five actions should you perform in sequence next in a Databricks notebook?

F-H-E-A-B de olabilir deniyor

Step 1: Read the file into a data frame.

You can load the json files as a data frame in Azure Databricks.

Step 2: Perform transformations on the data frame.

Step 3:Specify a temporary folder to stage the data

Specify a temporary folder to use while moving data between Azure Databricks and Azure Synapse.

Step 4: Write the results to a table in Azure Synapse.

You upload the transformed data frame into Azure Synapse. You use the Azure Synapse connector for Azure Databricks to directly upload a dataframe as a table in a Azure Synapse.

Step 5: Drop the data frame

Clean up resources. You can terminate the cluster. From the Azure Databricks workspace, select Clusters on the left. For the cluster to terminate, under Actions, point to the ellipsis (...) and select the Terminate icon.

Reference:

https://docs.microsoft.com/en-us/azure/azure-databricks/databricks-extract-load-sql-data-warehouse

You are designing an Azure Databricks interactive cluster.

You need to ensure that the cluster meets the following requirements:

- Enable auto-termination

- Retain cluster configuration indefinitely after cluster termination.

What should you recommend?

Start the cluster after it is terminated.

Pin the cluster

Clone the cluster after it is terminated.

Terminate the cluster manually at process completion.

Answer is Pin the cluster

To keep an interactive cluster configuration even after it has been terminated for more than 30 days, an administrator can pin a cluster to the cluster list.

Reference:

https://docs.azuredatabricks.net/user-guide/clusters/terminate.html

https://docs.databricks.com/clusters/clusters-manage.html#pin-a-cluster

You are designing an Azure Databricks table. The table will ingest an average of 20 million streaming events per day.

You need to persist the events in the table for use in incremental load pipeline jobs in Azure Databricks. The solution must minimize storage costs and incremental load times.

What should you include in the solution?

Partition by DateTime fields.

Sink to Azure Queue storage.

Include a watermark column.

Use a JSON format for physical data storage.

Answer is Sink to Azure Queue storage.

The Databricks ABS-AQS connector uses Azure Queue Storage (AQS) to provide an optimized file source that lets you find new files written to an Azure Blob storage (ABS) container without repeatedly listing all of the files. This provides two major advantages:

- Lower latency: no need to list nested directory structures on ABS, which is slow and resource intensive.

- Lower costs: no more costly LIST API requests made to ABS.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/spark/latest/structured-streaming/aqs

You have an Azure Databricks workspace named workspace1 in the Standard pricing tier.

You need to configure workspace1 to support autoscaling all-purpose clusters. The solution must meet the following requirements:

- Automatically scale down workers when the cluster is underutilized for three minutes.

- Minimize the time it takes to scale to the maximum number of workers.

- Minimize costs.

What should you do first?

Enable container services for workspace1.

Upgrade workspace1 to the Premium pricing tier.

Set Cluster Mode to High Concurrency.

Create a cluster policy in workspace1.

Answer is Upgrade workspace1 to the Premium pricing tier.

For clusters running Databricks Runtime 6.4 and above, optimized autoscaling is used by all-purpose clusters in the Premium plan

Optimized autoscaling:

Scales up from min to max in 2 steps.

Can scale down even if the cluster is not idle by looking at shuffle file state.

Scales down based on a percentage of current nodes.

On job clusters, scales down if the cluster is underutilized over the last 40 seconds.

On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds.

The spark.databricks.aggressiveWindowDownS Spark configuration property specifies in seconds how often a cluster makes down-scaling decisions. Increasing the value causes a cluster to scale down more slowly. The maximum value is 600.

Note: Standard autoscaling

Starts with adding 8 nodes. Thereafter, scales up exponentially, but can take many steps to reach the max. You can customize the first step by setting the spark.databricks.autoscaling.standardFirstStepUp Spark configuration property.

Scales down only when the cluster is completely idle and it has been underutilized for the last 10 minutes.

Scales down exponentially, starting with 1 node.

Reference:

https://docs.databricks.com/clusters/configure.html

You are designing an Azure Databricks cluster that runs user-defined local processes.

You need to recommend a cluster configuration that meets the following requirements:

- Minimize query latency.

- Maximize the number of users that can run queries on the cluster at the same time.

- Reduce overall costs without compromising other requirements.

Which cluster type should you recommend?

Standard with Auto Termination

High Concurrency with Autoscaling

High Concurrency with Auto Termination

Standard with Autoscaling

Answer is High Concurrency with Autoscaling

A High Concurrency cluster is a managed cloud resource. The key benefits of High Concurrency clusters are that they provide fine-grained sharing for maximum resource utilization and minimum query latencies.

Databricks chooses the appropriate number of workers required to run your job. This is referred to as autoscaling. Autoscaling makes it easier to achieve high cluster utilization, because you don't need to provision the cluster to match a workload.

Incorrect Answers:

C: The cluster configuration includes an auto terminate setting whose default value depends on cluster mode:

Standard and Single Node clusters terminate automatically after 120 minutes by default.

High Concurrency clusters do not terminate automatically by default.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/clusters/configure

You are planning a solution to aggregate streaming data that originates in Apache Kafka and is output to Azure Data Lake Storage Gen2. The developers who will implement the stream processing solution use Java.

Which service should you recommend using to process the streaming data?

Azure Event Hubs

Azure Data Factory

Azure Stream Analytics

Azure Databricks

Answer is Azure Databricks

The following tables summarize the key differences in capabilities for stream processing technologies in Azure.

General capabilities -

Integration capabilities -

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/stream-processing

You plan to develop a dataset named Purchases by using Azure Databricks. Purchases will contain the following columns:

● ProductID

● ItemPrice

● LineTotal

● Quantity

● StoreID

● Minute

● Month

● Hour

● Year

● Day

You need to store the data to support hourly incremental load pipelines that will vary for each Store ID. The solution must minimize storage costs.

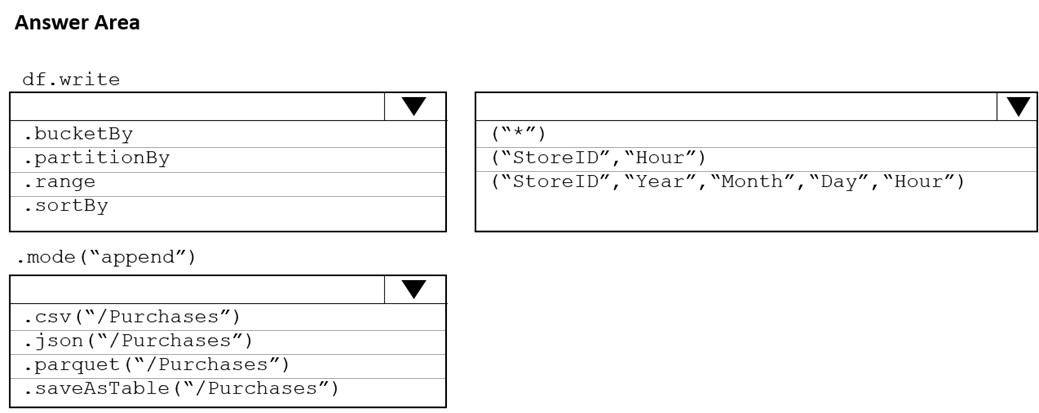

How should you complete the code?

Box 1: partitionBy

We should overwrite at the partition level.

Example:

df.write.partitionBy("y","m","d")

.mode(SaveMode.Append)

.parquet("/data/hive/warehouse/db_name.db/" + tableName)

Box 2: ("StoreID", "Year", "Month", "Day", "Hour", "StoreID")

Box 3: parquet("/Purchases")

Reference:

https://intellipaat.com/community/11744/how-to-partition-and-write-dataframe-in-spark-without-deleting-partitions-with-no-new-data

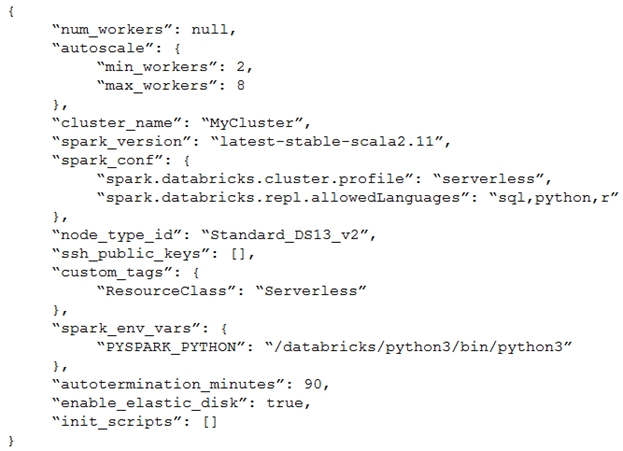

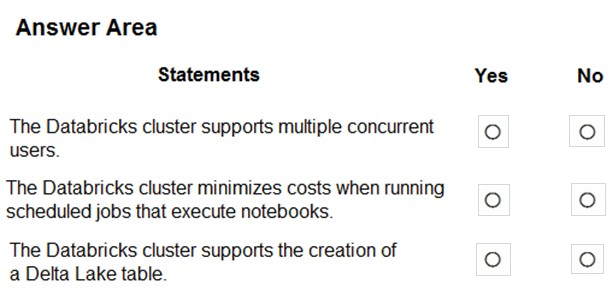

The following code segment is used to create an Azure Databricks cluster.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Box 1: Yes

A cluster mode of High Concurrency is selected, unlike all the others which are Standard. This results in a worker type of Standard_DS13_v2.

Box 2: No

When you run a job on a new cluster, the job is treated as a data engineering (job) workload subject to the job workload pricing. When you run a job on an existing cluster, the job is treated as a data analytics (all-purpose) workload subject to all-purpose workload pricing.

Box 3: Yes

Delta Lake on Databricks allows you to configure Delta Lake based on your workload patterns.

Reference:

https://adatis.co.uk/databricks-cluster-sizing/

https://docs.microsoft.com/en-us/azure/databricks/jobs

https://docs.databricks.com/administration-guide/capacity-planning/cmbp.html

https://docs.databricks.com/delta/index.html

You are designing a statistical analysis solution that will use custom proprietary Python functions on near real-time data from Azure Event Hubs.

You need to recommend which Azure service to use to perform the statistical analysis. The solution must minimize latency.

What should you recommend?

Azure Synapse Analytics

Azure Databricks

Azure Stream Analytics

Azure SQL Database

Answer is Azure Databricks

Stream Analytics supports "extending SQL language with JavaScript and C# user-defined functions (UDFs)". Stream Analytics does not support Python.

Azure Databricks supports near real-time data from Azure Event Hubs. And includes support for R, SQL, Python, Scala, and Java. So I will go for option B.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-introduction

You are creating a new notebook in Azure Databricks that will support R as the primary language but will also support Scala and SQL.

Which switch should you use to switch between languages?

%

@

[]

()

Answer is %

To change the language in Databricks' cells to either Scala, SQL, Python or R, prefix the cell with %, followed by the language.

%python //or r, scala, sql

Reference:

https://www.theta.co.nz/news-blogs/tech-blog/enhancing-digital-twins-part-3-predictive-maintenance-with-azure-databricks