DP-203: Data Engineering on Microsoft Azure

You have an Azure subscription linked to an Azure Active Directory (Azure AD) tenant that contains a service principal named ServicePrincipal1. The subscription contains an Azure Data Lake Storage account named adls1. Adls1 contains a folder named Folder2 that has a URI of

https://adls1.dfs.core.windows.net/ container1/Folder1/Folder2/.

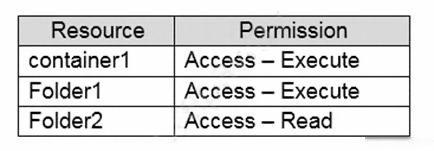

ServicePrincipal1 has the access control list (ACL) permissions shown in the following table.

You need to ensure that ServicePrincipal1 can perform the following actions:

- Traverse child items that are created in Folder2.

- Read files that are created in Folder2.

The solution must use the principle of least privilege.

Which two permissions should you grant to ServicePrincipal1 for Folder2?

Access - Read

Access - Write

Access - Execute

Default - Read

Default - Write

F. Default - Execute

Answer is Default - Read and F. Default - Execute

Execute (X) permission is required to traverse the child items of a folder.

There are two kinds of access control lists (ACLs), Access ACLs and Default ACLs.

Access ACLs: These control access to an object. Files and folders both have Access ACLs.

Default ACLs: A "template" of ACLs associated with a folder that determine the Access ACLs for any child items that are created under that folder. Files do not have Default ACLs.

Reference:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control

You perform a daily SQL Data Warehouse using Polybase with CTAS statements to load the data. User are reporting that the reports are running slow. What should you do to improve the performance of the query?

Try increasing the allocated Request Units (RUs) for your collection.

Install the latest DocumentDB SDK.

Add table statistics are created and kept up to date

Answer is Add table statistics are created and kept up to date

Trying to increase the allocated Request Units (RUs) for a collection and Install the latest DocumentDB SDK is a change that is made to improve Cosmos DB.

A company uses Azure Data Lake Gen 1 Storage to store big data related to consumer behavior.

You need to implement logging.

Solution: Configure an Azure Automation runbook to copy events.

Does the solution meet the goal?

Yes

No

Answer is No

Instead configure Azure Data Lake Storage diagnostics to store logs and metrics in a storage account.

References:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-diagnostic-logs

Your company uses several Azure HDInsight clusters.

The data engineering team reports several errors with some applications using these clusters.

You need to recommend a solution to review the health of the clusters.

What should you include in your recommendation?

Azure Automation

Log Analytics

Application Insights

Answer is Log Analytics

Azure Monitor logs enables data generated by multiple resources such as HDInsight clusters, to be collected and aggregated in one place to achieve a unified monitoring experience.

As a prerequisite, you will need a Log Analytics Workspace to store the collected data. If you have not already created one, you can follow the instructions for creating a Log Analytics Workspace.

You can then easily configure an HDInsight cluster to send many workload-specific metrics to Log Analytics.

References:

https://azure.microsoft.com/sv-se/blog/monitoring-on-azure-hdinsight-part-2-cluster-health-and-availability/

Contoso, Ltd. plans to configure existing applications to use Azure SQL Database.

When security-related operations occur, the security team must be informed.

You need to configure Azure Monitor while minimizing administrative effort.

Which three actions should you perform?

Create a new action group to email alerts@contoso.com.

Use alerts@contoso.com as an alert email address.

Use all security operations as a condition.

Use all Azure SQL Database servers as a resource.

Query audit log entries as a condition.

Answers are; Create a new action group to email alerts@contoso.com. Use all security operations as a condition. Use all Azure SQL Database servers as a resource.

References:

https://docs.microsoft.com/en-us/azure/azure-monitor/platform/alerts-action-rules

You need to receive an alert when Azure SQL Data Warehouse consumes the maximum allotted resources.

Which resource type and signal should you use to create the alert in Azure Monitor?

| Resource Type: |

|

||||||||||

| Signal: |

|

||||||||||

A - A

A - B

B - B

B - C

C - C

C - D

D - D

D - A

Answer is C - C

Resource type: SQL data warehouse

DWU limit belongs to the SQL data warehouse resource type.

Signal: DWU limit

SQL Data Warehouse capacity limits are maximum values allowed for various components of Azure SQL Data Warehouse.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-insights-alerts-portal

A company uses Azure Data Lake Gen 1 Storage to store big data related to consumer behavior.

You need to implement logging.

Solution: Create an Azure Automation runbook to copy events.

Does the solution meet the goal?

Yes

No

Answer is No

Instead configure Azure Data Lake Storage diagnostics to store logs and metrics in a storage account.

References:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-diagnostic-logs

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch processing will run daily and must:

- Scale to minimize costs

- Be monitored for cluster performance

Solution: Monitor cluster load using the Ambari Web UI.

Does the solution meet the goal?

Yes

No

Answer is No

Ambari Web UI does not provide information to suggest how to scale.

Instead monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-manage-ambari

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch processing will run daily and must:

- Scale to minimize costs

- Be monitored for cluster performance

Solution: Monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

Does the solution meet the goal?

Yes

No

Answer is Yes

HDInsight provides cluster-specific management solutions that you can add for Azure Monitor logs. Management solutions add functionality to Azure Monitor logs, providing additional data and analysis tools. These solutions collect important performance metrics from your HDInsight clusters and provide the tools to search the metrics. These solutions also provide visualizations and dashboards for most cluster types supported in HDInsight. By using the metrics that you collect with the solution, you can create custom monitoring rules and alerts.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial

You develop data engineering solutions for a company.

A project requires the deployment of resources to Microsoft Azure for batch data processing on Azure HDInsight. Batch processing will run daily and must:

- Scale to minimize costs

- Be monitored for cluster performance

Solution: Download Azure HDInsight cluster logs by using Azure PowerShell.

Does the solution meet the goal?

Yes

No

Answer is No

Instead monitor clusters by using Azure Log Analytics and HDInsight cluster management solutions.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-oms-log-analytics-tutorial