DP-203: Data Engineering on Microsoft Azure

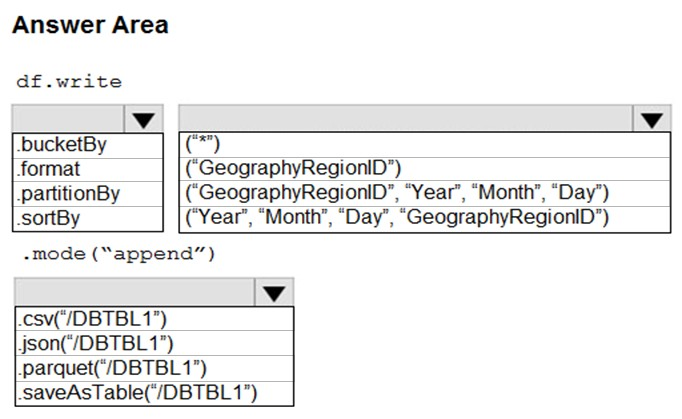

You develop a dataset named DBTBL1 by using Azure Databricks.

DBTBL1 contains the following columns:

● SensorTypeID

● GeographyRegionID

● Year

● Month

● Day

● Hour

● Minute

● Temperature

● WindSpeed

● Other

You need to store the data to support daily incremental load pipelines that vary for each GeographyRegionID. The solution must minimize storage costs.

How should you complete the code?

Box 1: .partitionBy

Incorrect Answers:

- .format:

Method: format():

Arguments: "parquet", "csv", "txt", "json", "jdbc", "orc", "avro", etc.

- .bucketBy:

Method: bucketBy()

Arguments: (numBuckets, col, col..., coln)

The number of buckets and names of columns to bucket by. Uses Hive's bucketing scheme on a filesystem.

Box 2: ("Year", "Month", "Day","GeographyRegionID")

Specify the columns on which to do the partition. Use the date columns followed by the GeographyRegionID column.

Box 3: .saveAsTable("/DBTBL1")

Method: saveAsTable()

Argument: "table_name"

The table to save to.

Reference:

https://www.oreilly.com/library/view/learning-spark-2nd/9781492050032/ch04.html

https://docs.microsoft.com/en-us/azure/databricks/delta/delta-batch

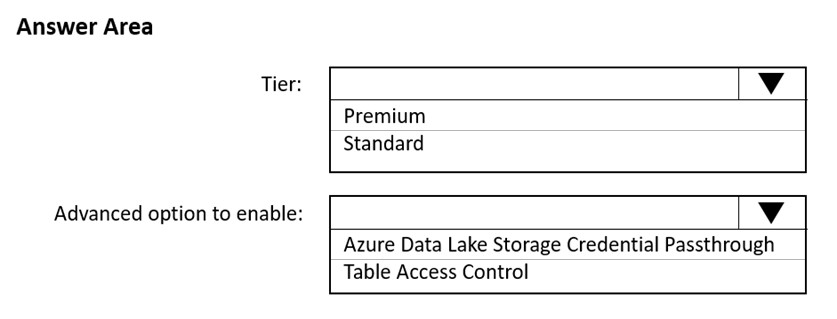

You need to implement an Azure Databricks cluster that automatically connects to Azure Data Lake Storage Gen2 by using Azure Active Directory (Azure AD) integration.

How should you configure the new cluster?

Box 1: Premium

Credential passthrough requires an Azure Databricks Premium Plan

Box 2: Azure Data Lake Storage credential passthrough

You can access Azure Data Lake Storage using Azure Active Directory credential passthrough.

When you enable your cluster for Azure Data Lake Storage credential passthrough, commands that you run on that cluster can read and write data in Azure Data

Lake Storage without requiring you to configure service principal credentials for access to storage.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/security/credential-passthrough/adls-passthrough

You create an Azure Databricks cluster and specify an additional library to install.

When you attempt to load the library to a notebook, the library in not found.

You need to identify the cause of the issue.

What should you review?

notebook logs

cluster event logs

global init scripts logs

workspace logs

Answer is cluster event logs

Azure Databricks provides three kinds of logging of cluster-related activity: Cluster event logs, which capture cluster lifecycle events, like creation, termination, configuration edits, and so on. Apache Spark driver and worker logs, which you can use for debugging. Cluster init-script logs, valuable for debugging init scripts.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/clusters/clusters-manage#event-log

You are designing an Azure Databricks interactive cluster. The cluster will be used infrequently and will be configured for auto-termination.

You need to use that the cluster configuration is retained indefinitely after the cluster is terminated. The solution must minimize costs.

What should you do?

Pin the cluster.

Create an Azure runbook that starts the cluster every 90 days.

Terminate the cluster manually when processing completes.

Clone the cluster after it is terminated.

Answer is Pin the cluster.

Azure Databricks retains cluster configuration information for up to 70 all-purpose clusters terminated in the last 30 days and up to 30 job clusters recently terminated by the job scheduler. To keep an all-purpose cluster configuration even after it has been terminated for more than 30 days, an administrator can pin a cluster to the cluster list.

Reference:

https://docs.microsoft.com/en-us/azure/databricks/clusters/

You have an Azure Databricks resource.

You need to log actions that relate to changes in compute for the Databricks resource.

Which Databricks services should you log?

clusters

workspace

DBFS

SSH

jobs

Answer is clusters

Workspace logs does not have any cluster related resource change. In general, all logs are belongs to workspace-level audit log if you check the title of the table. But if you check in details, cluster related logs belongs to clusters part.

Reference:

https://docs.databricks.com/administration-guide/account-settings/audit-logs.html#workspace-level-audit-log-events

You are planning a streaming data solution that will use Azure Databricks. The solution will stream sales transaction data from an online store. The solution has the following specifications:

- The output data will contain items purchased, quantity, line total sales amount, and line total tax amount.

- Line total sales amount and line total tax amount will be aggregated in Databricks.

- Sales transactions will never be updated. Instead, new rows will be added to adjust a sale.

You need to recommend an output mode for the dataset that will be processed by using Structured Streaming. The solution must minimize duplicate data.

What should you recommend?

Update

Complete

Append

Answer is Append

Append: This mode adds new rows to the output sink for each received event. It's perfect for your scenario where sales transactions are never updated but adjusted by adding new rows. This guarantees no duplicate data due to updates, minimizing duplicates.

Update: This mode updates existing rows in the output sink if a matching key is found. Since you mentioned no updates occur, using this mode would lead to unnecessary operations and potential inconsistencies.

Complete: This mode writes the entire dataset to the output sink for each micro-batch interval. This is unnecessary and inefficient for your scenario since only new rows need to be added, and it potentially duplicates data across micro-batches.

Reference:

https://docs.databricks.com/delta/delta-streaming.html

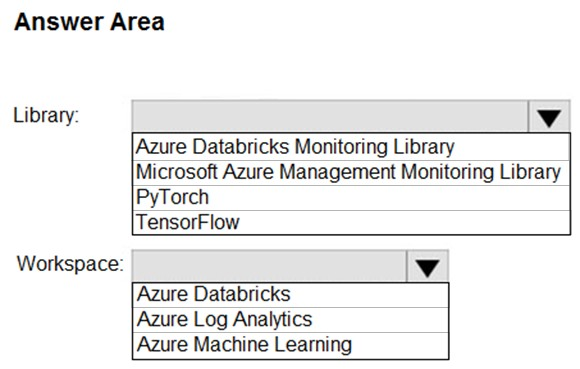

You need to collect application metrics, streaming query events, and application log messages for an Azure Databrick cluster.

Which type of library and workspace should you implement?

Answer is Azure Databricks Monitoring Library and Azure Log Analytics

You can send application logs and metrics from Azure Databricks to a Log Analytics workspace. It uses the Azure Databricks Monitoring Library, which is available on GitHub.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/databricks-monitoring/application-logs

You are planning a solution to aggregate streaming data that originates in Apache Kafka and is output to Azure Data Lake Storage Gen2. The developers who will implement the stream processing solution use Java.

Which service should you recommend using to process the streaming data?

Azure Event Hubs

Azure Data Factory

Azure Stream Analytics

Azure Databricks

Answer is Azure Databricks

Azure Databrics supports Java programming.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/stream-processing

You have an Azure Data Lake Storage Gen2 account that contains a JSON file for customers. The file contains two attributes named FirstName and LastName.

You need to copy the data from the JSON file to an Azure Synapse Analytics table by using Azure Databricks. A new column must be created that concatenates the FirstName and LastName values.

You create the following components:

- A destination table in Azure Synapse

- An Azure Blob storage container

- A service principal

Which five actions should you perform in sequence next in is Databricks notebook?

Check the answer area

Step 1: Mount the Data Lake Storage onto DBFS

Begin with creating a file system in the Azure Data Lake Storage Gen2 account.

Step 2: Read the file into a data frame.

You can load the json files as a data frame in Azure Databricks.

Step 3: Perform transformations on the data frame.

Step 4: Specify a temporary folder to stage the data

Specify a temporary folder to use while moving data between Azure Databricks and Azure Synapse.

Step 5: Write the results to a table in Azure Synapse.

You upload the transformed data frame into Azure Synapse. You use the Azure Synapse connector for Azure Databricks to directly upload a dataframe as a table in a Azure Synapse.

Reference:

https://docs.microsoft.com/en-us/azure/azure-databricks/databricks-extract-load-sql-data-warehouse

Which Azure Data Factory process involves using compute services to produce data to feed production environments with cleansed data?

Connect and collect

Transform and enrich

Publish

Monitor

Answer is Transform and enrich

This is used to invoke compute services to produce date to feed production environments with cleansed data. Connect and collect defines and connect all the required sources of data together. Publish load the data into a data store or analytical engine for your business users to point their business intelligence tools. Monitor is used to monitor the scheduled activities and pipelines for success and failure rates.