DP-203: Data Engineering on Microsoft Azure

Which Azure Data Platform technology is commonly used to process data in an ELT framework?

Azure Data Factory

Azure Databricks

Azure Data Lake

Answer is Azure Data Factory

Azure Data Factory (ADF) is a cloud integration service that orchestrates that movement of data between various data stores. Azure Databricks adds additional capabilities to Apache Spark including fully managed Spark clusters and an interactive workspace. Azure Data Lake storage is a Hadoop-compatible data repository that can store any size or type of data.

You are designing an HDInsight/Hadoop cluster solution that uses Azure Data Lake Gen1 Storage.

The solution requires POSIX permissions and enables diagnostics logging for auditing.

You need to recommend solutions that optimize storage.

Proposed Solution: Ensure that files stored are larger than 250MB.

Does the solution meet the goal?

Yes

No

Answer is Yes

Depending on what services and workloads are using the data, a good size to consider for files is 256 MB or greater. If the file sizes cannot be batched when landing in Data Lake Storage Gen1, you can have a separate compaction job that combines these files into larger ones.

Note: POSIX permissions and auditing in Data Lake Storage Gen1 comes with an overhead that becomes apparent when working with numerous small files. As a best practice, you must batch your data into larger files versus writing thousands or millions of small files to Data Lake Storage Gen1.

Avoiding small file sizes can have multiple benefits, such as:

- Lowering the authentication checks across multiple files

- Reduced open file connections

- Faster copying/replication

- Fewer files to process when updating Data Lake Storage Gen1 POSIX permissions

Reference:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-best-practices

You are designing an HDInsight/Hadoop cluster solution that uses Azure Data Lake Gen1 Storage.

The solution requires POSIX permissions and enables diagnostics logging for auditing.

You need to recommend solutions that optimize storage.

Proposed Solution: Implement compaction jobs to combine small files into larger files.

Does the solution meet the goal?

Yes

No

Answer is Yes

Depending on what services and workloads are using the data, a good size to consider for files is 256 MB or greater. If the file sizes cannot be batched when landing in Data Lake Storage Gen1, you can have a separate compaction job that combines these files into larger ones.

Note: POSIX permissions and auditing in Data Lake Storage Gen1 comes with an overhead that becomes apparent when working with numerous small files. As a best practice, you must batch your data into larger files versus writing thousands or millions of small files to Data Lake Storage Gen1. Avoiding small file sizes can have multiple benefits, such as:

- Lowering the authentication checks across multiple files

- Reduced open file connections

- Faster copying/replication

- Fewer files to process when updating Data Lake Storage Gen1 POSIX permissions

Reference:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-best-practices

You are designing an HDInsight/Hadoop cluster solution that uses Azure Data Lake Gen1 Storage.

The solution requires POSIX permissions and enables diagnostics logging for auditing.

You need to recommend solutions that optimize storage.

Proposed Solution: Ensure that files stored are smaller than 250MB.

Does the solution meet the goal?

Yes

No

Answer is No

Ensure that files stored are larger, not smaller than 250MB.

You can have a separate compaction job that combines these files into larger ones.

Note: The file POSIX permissions and auditing in Data Lake Storage Gen1 comes with an overhead that becomes apparent when working with numerous small files. As a best practice, you must batch your data into larger files versus writing thousands or millions of small files to Data Lake Storage Gen1. Avoiding small file sizes can have multiple benefits, such as:

- Lowering the authentication checks across multiple files

- Reduced open file connections

- Faster copying/replication

- Fewer files to process when updating Data Lake Storage Gen1 POSIX permissions

Reference:

https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-best-practices

You are designing an Azure SQL Database that will use elastic pools. You plan to store data about customers in a table. Each record uses a value for CustomerID.

You need to recommend a strategy to partition data based on values in CustomerID.

Proposed Solution: Separate data into customer regions by using vertical partitioning.

Does the solution meet the goal?

Yes

No

Answer is

Vertical partitioning is used for cross-database queries. Instead we should use Horizontal Partitioning, which also is called charding.

Customer scenarios for elastic query are characterized by the following topologies:

Vertical partitioning - Cross-database queries (Topology 1): The data is partitioned vertically between a number of databases in a data tier. Typically, different sets of tables reside on different databases. That means that the schema is different on different databases. For instance, all tables for inventory are on one database while all accounting-related tables are on a second database. Common use cases with this topology require one to query across or to compile reports across tables in several databases.

Horizontal Partitioning - Sharding (Topology 2): Data is partitioned horizontally to distribute rows across a scaled out data tier. With this approach, the schema is identical on all participating databases. This approach is also called “sharding”. Sharding can be performed and managed using (1) the elastic database tools libraries or (2) self-sharding. An elastic query is used to query or compile reports across many shards. Shards are typically databases within an elastic pool. You can think of elastic query as an efficient way for querying all databases of elastic pool at once, as long as databases share the common schema.

Reference :

https://docs.microsoft.com/en-us/azure/architecture/best-practices/data-partitioning

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-query-overview

You are designing an Azure SQL Database that will use elastic pools. You plan to store data about customers in a table. Each record uses a value for CustomerID.

You need to recommend a strategy to partition data based on values in CustomerID.

Proposed Solution: Separate data into customer regions by using horizontal partitioning.

Does the solution meet the goal?

Yes

No

Answer is

We should use Horizontal Partitioning through Sharding, not divide through regions.

Note: Horizontal Partitioning - Sharding: Data is partitioned horizontally to distribute rows across a scaled out data tier. With this approach, the schema is identical on all participating databases. This approach is also called sharding. Sharding can be performed and managed using (1) the elastic database tools libraries or

(2) self-sharding. An elastic query is used to query or compile reports across many shards.

Horizontal partitioning splits one or more tables by row, usually within a single instance of a schema and a database server.

Sharding goes beyond this: it partitions the problematic table(s) in the same way, but it does this across potentially multiple instances of the schema. The obvious advantage would be that search load for the large partitioned table can now be split across multiple servers (logical or physical), not just multiple indexes on the same logical server.

Reference:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-query-overview

https://en.wikipedia.org/wiki/Shard_(database_architecture)#Shards_compared_to_horizontal_partitioning

You are designing an Azure SQL Database that will use elastic pools. You plan to store data about customers in a table. Each record uses a value for CustomerID.

You need to recommend a strategy to partition data based on values in CustomerID.

Proposed Solution: Separate data into shards by using horizontal partitioning.

Does the solution meet the goal?

Yes

No

Answer is Yes

Horizontal Partitioning - Sharding: Data is partitioned horizontally to distribute rows across a scaled out data tier. With this approach, the schema is identical on all participating databases. This approach is also called sharding. Sharding can be performed and managed using (1) the elastic database tools libraries or (2) self- sharding. An elastic query is used to query or compile reports across many shards.

Reference:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-query-overview

You are designing a data processing solution that will run as a Spark job on an HDInsight cluster. The solution will be used to provide near real-time information about online ordering for a retailer.

The solution must include a page on the company intranet that displays summary information.

The summary information page must meet the following requirements:

- Display a summary of sales to date grouped by product categories, price range, and review scope.

- Display sales summary information including total sales, sales as compared to one day ago and sales as compared to one year ago.

- Reflect information for new orders as quickly as possible.

You need to recommend a design for the solution.

What should you recommend?

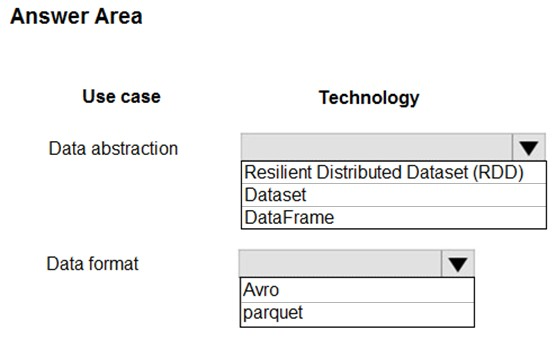

Box 1: DataFrame

Best choice in most situations.

Provides query optimization through Catalyst.

Whole-stage code generation.

Direct memory access.

Low garbage collection (GC) overhead.

Not as developer-friendly as DataSets, as there are no compile-time checks or domain object programming.

Box 2: parquet

The best format for performance is parquet with snappy compression, which is the default in Spark 2.x. Parquet stores data in columnar format, and is highly optimized in Spark.

Incorrect Answers:

DataSets

Good in complex ETL pipelines where the performance impact is acceptable.

Not good in aggregations where the performance impact can be considerable.

RDDs

You do not need to use RDDs, unless you need to build a new custom RDD.

No query optimization through Catalyst.

No whole-stage code generation.

High GC overhead.

Reference:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-perf

You are evaluating data storage solutions to support a new application.

You need to recommend a data storage solution that represents data by using nodes and relationships in graph structures.

Which data storage solution should you recommend?

Blob Storage

Azure Cosmos DB

Azure Data Lake Store

HDInsight

Answer is Azure Cosmos DB

For large graphs with lots of entities and relationships, you can perform very complex analyses very quickly. Many graph databases provide a query language that you can use to traverse a network of relationships efficiently.

Relevant Azure service: Cosmos DB

Reference:

https://docs.microsoft.com/en-us/azure/architecture/guide/technology-choices/data-store-overview

You are designing a data processing solution that will implement the lambda architecture pattern. The solution will use Spark running on HDInsight for data processing.

You need to recommend a data storage technology for the solution.

Which two technologies should you recommend?

Azure Cosmos DB

Azure Service Bus

Azure Storage Queue

Apache Cassandra

Kafka HDInsight

Answers are;

for batch processing - cosmos DB

for Stream processing - Kafka HDinsight

To implement a lambda architecture on Azure, you can combine the following technologies to accelerate real-time big data analytics:

- Azure Cosmos DB, the industry's first globally distributed, multi-model database service.

- Apache Spark for Azure HDInsight, a processing framework that runs large-scale data analytics applications

Azure Cosmos DB change feed, which streams new data to the batch layer for HDInsight to process

- The Spark to Azure Cosmos DB Connector

E: You can use Apache Spark to stream data into or out of Apache Kafka on HDInsight using DStreams.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/lambda-architecture