DP-203: Data Engineering on Microsoft Azure

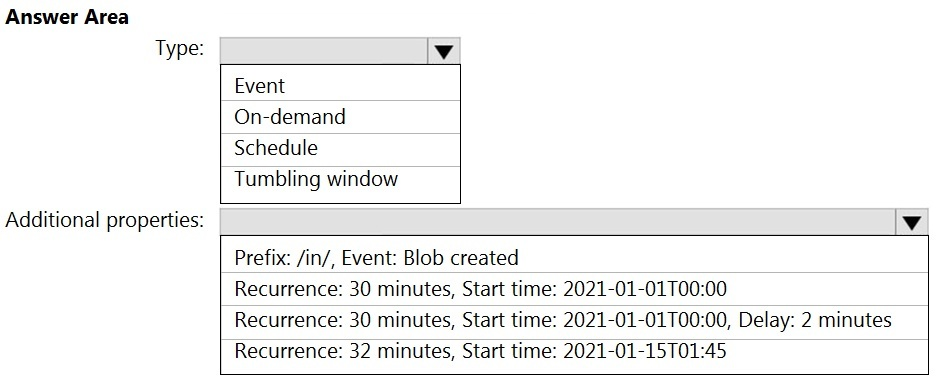

You build an Azure Data Factory pipeline to move data from an Azure Data Lake Storage Gen2 container to a database in an Azure Synapse Analytics dedicated SQL pool.

Data in the container is stored in the following folder structure.

/in/{YYYY}/{MM}/{DD}/{HH}/{mm}

The earliest folder is /in/2021/01/01/00/00. The latest folder is /in/2021/01/15/01/45.You need to configure a pipeline trigger to meet the following requirements:

- Existing data must be loaded.

- Data must be loaded every 30 minutes.

- Late-arriving data of up to two minutes must he included in the load for the time at which the data should have arrived.

How should you configure the pipeline trigger?

Box 1: Tumbling window

To be able to use the Delay parameter we select Tumbling window.

Box 2: Recurrence: 30 minutes, not 32 minutes. Delay: 2 minutes.

The amount of time to delay the start of data processing for the window. The pipeline run is started after the expected execution time plus the amount of delay.

The delay defines how long the trigger waits past the due time before triggering a new run. The delay doesn't alter the window startTime.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-tumbling-window-trigger

You need to schedule an Azure Data Factory pipeline to execute when a new file arrives in an Azure Data Lake Storage Gen2 container.

Which type of trigger should you use?

on-demand

tumbling window

schedule

event

Answer is event

Event-driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption, and reaction to events. Data integration scenarios often require Data Factory customers to trigger pipelines based on events happening in storage account, such as the arrival or deletion of a file in Azure Blob Storage account.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-event-trigger

You have two Azure Data Factory instances named ADFdev and ADFprod. ADFdev connects to an Azure DevOps Git repository.

You publish changes from the main branch of the Git repository to ADFdev.

You need to deploy the artifacts from ADFdev to ADFprod.

What should you do first?

From ADFdev, modify the Git configuration.

From ADFdev, create a linked service.

From Azure DevOps, create a release pipeline.

From Azure DevOps, update the main branch.

Answer is From Azure DevOps, create a release pipeline.

In Azure Data Factory, continuous integration and delivery (CI/CD) means moving Data Factory pipelines from one environment (development, test, production) to another.

Note:

The following is a guide for setting up an Azure Pipelines release that automates the deployment of a data factory to multiple environments.

1. In Azure DevOps, open the project that's configured with your data factory.

2. On the left side of the page, select Pipelines, and then select Releases.

3. Select New pipeline, or, if you have existing pipelines, select New and then New release pipeline.

4. In the Stage name box, enter the name of your environment.

5. Select Add artifact, and then select the git repository configured with your development data factory. Select the publish branch of the repository for the Default branch. By default, this publish branch is adf_publish.

6. Select the Empty job template.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/continuous-integration-deployment

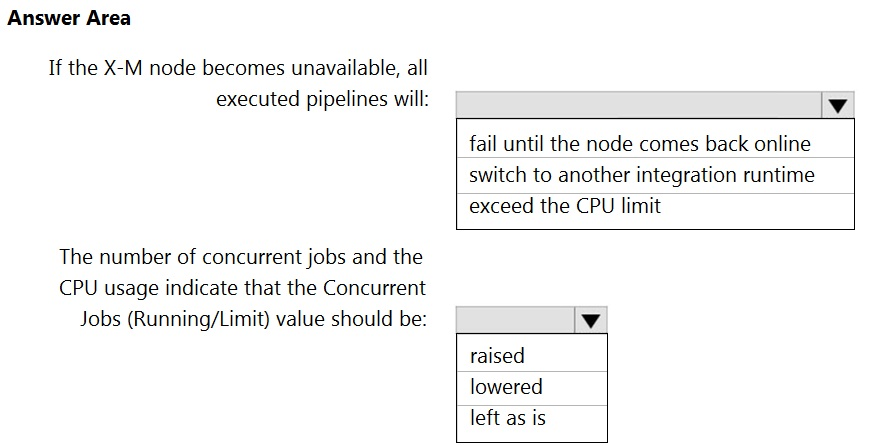

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:

- Status: Running

- Type: Self-Hosted

- Version: 4.4.7292.1

- Running / Registered Node(s): 1/1

- High Availability Enabled: False

- Linked Count: 0

- Queue Length: 0

- Average Queue Duration. 0.00s

The integration runtime has the following node details:

- Name: X-M

- Status: Running

- Version: 4.4.7292.1

- Available Memory: 7697MB

- CPU Utilization: 6%

- Network (In/Out): 1.21KBps/0.83KBps

- Concurrent Jobs (Running/Limit): 2/14

- Role: Dispatcher/Worker

- Credential Status: In Sync

Use the drop-down menus to select the answer choice that completes each statement based on the information presented.

Box 1: fail until the node comes back online

We see: High Availability Enabled: False

Note: Higher availability of the self-hosted integration runtime so that it's no longer the single point of failure in your big data solution or cloud data integration with Data Factory.

Box 2: lowered

We see:

Concurrent Jobs (Running/Limit): 2/14

CPU Utilization: 6%

Note: When the processor and available RAM aren't well utilized, but the execution of concurrent jobs reaches a node's limits, scale up by increasing the number of concurrent jobs that a node can run

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime

You have an Azure data factory.

You need to examine the pipeline failures from the last 60 days.

What should you use?

the Activity log blade for the Data Factory resource

the Monitor & Manage app in Data Factory

the Resource health blade for the Data Factory resource

Azure Monitor

Answer is Azure Monitor

Data Factory stores pipeline-run data for only 45 days. Use Azure Monitor if you want to keep that data for a longer time.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/monitor-using-azure-monitor

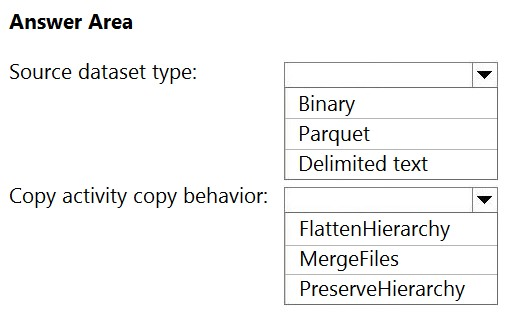

You have two Azure Storage accounts named Storage1 and Storage2. Each account contains an Azure Data Lake Storage file system. The system has files that contain data stored in the Apache Parquet format.

You need to copy folders and files from Storage1 to Storage2 by using a Data Factory copy activity. The solution must meet the following requirements:

- No transformations must be performed.

- The original folder structure must be retained.

How should you configure the copy activity?

Box 1: Parquet

For Parquet datasets, the type property of the copy activity source must be set to ParquetSource..

Box 2: PreserveHierarchy

PreserveHierarchy (default): Preserves the file hierarchy in the target folder. The relative path of the source file to the source folder is identical to the relative path of the target file to the target folder.

Incorrect Answers:

FlattenHierarchy: All files from the source folder are in the first level of the target folder. The target files have autogenerated names. MergeFiles: Merges all files from the source folder to one file. If the file name is specified, the merged file name is the specified name. Otherwise, it's an autogenerated file name.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/format-parquet

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

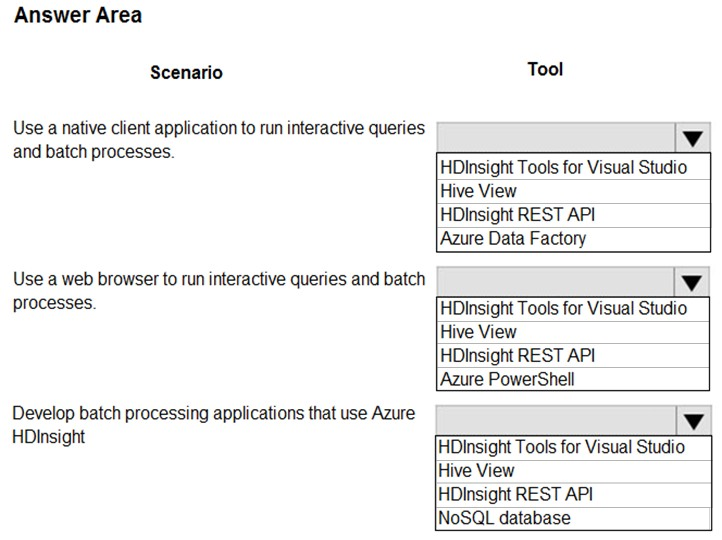

A company plans to develop solutions to perform batch processing of multiple sets of geospatial data.

You need to implement the solutions.

Which Azure services should you use?

Box 1: HDInsight Tools for Visual Studio

Azure HDInsight Tools for Visual Studio Code is an extension in the Visual Studio Code Marketplace for developing Hive Interactive Query, Hive Batch Job and PySpark Job against Microsoft HDInsight.

Box 2: Hive View

You can use Apache Ambari Hive View with Apache Hadoop in HDInsight. The Hive View allows you to author, optimize, and run Hive queries from your web browser.

Box 3: HDInsight REST API

Azure HDInsight REST APIs are used to create and manage HDInsight resources through Azure Resource Manager.

References:

https://visualstudiomagazine.com/articles/2019/01/25/vscode-hdinsight.aspx

https://docs.microsoft.com/en-us/azure/hdinsight/hadoop/apache-hadoop-use-hive-ambari-view

https://docs.microsoft.com/en-us/rest/api/hdinsight/

You have an Azure Data Factory pipeline that performs an incremental load of source data to an Azure Data Lake Storage Gen2 account.

Data to be loaded is identified by a column named LastUpdatedDate in the source table.

You plan to execute the pipeline every four hours.

You need to ensure that the pipeline execution meets the following requirements:

- Automatically retries the execution when the pipeline run fails due to concurrency or throttling limits.

- Supports backfilling existing data in the table.

Which type of trigger should you use?

event

on-demand

schedule

tumbling window

Answer is tumbling window

Azure Data Factory pipeline executions using Triggers:

• Schedule Trigger: The schedule trigger is used to execute the Azure Data Factory pipelines on a wall-clock schedule.

• Tumbling Window Trigger: Can be used to process history data. Also can define Delay, Max concurrency, retry policy etc.

• Event-Based Triggers : The event-based trigger executes the pipelines in response to a blob-related event, such as creating or deleting a blob file, in an Azure Blob Storage

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/how-to-create-tumbling-window-trigger

https://www.sqlshack.com/how-to-schedule-azure-data-factory-pipeline-executions-using-triggers/

You are building an Azure Data Factory solution to process data received from Azure Event Hubs, and then ingested into an Azure Data Lake Storage Gen2 container.

The data will be ingested every five minutes from devices into JSON files. The files have the following naming pattern.

/{deviceType}/in/{YYYY}/{MM}/{DD}/{HH}/{deviceID}_{YYYY}{MM}{DD}HH}{mm}.json

You need to prepare the data for batch data processing so that there is one dataset per hour per deviceType. The solution must minimize read times.

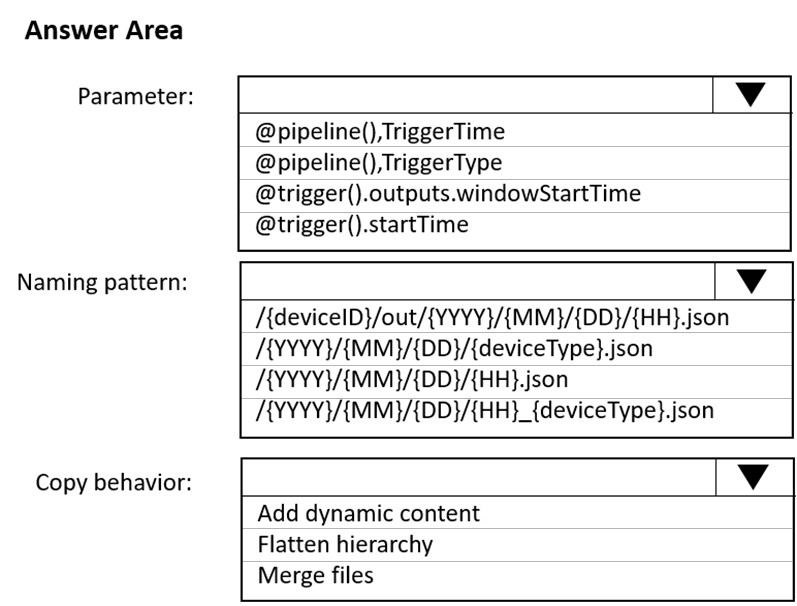

How should you configure the sink for the copy activity?

1) @trigger().outputs.windowStartTime - this output is from a tumbling window trigger, and is required to identify the correct directory at the /{HH}/ level. Using windowStartTime will give the hour with complete data. The @trigger().startTime is for a schedule trigger, which corresponds to the hour for which data has not arrived yet.

2) /{YYYY}/{MM}/{DD}/{HH}_{deviceType}.json is the naming pattern to achieve an hourly dataset for each device type.

3) Multiple files for each device type will exist on the source side, since the naming pattern starts with {deviceID}... so the files must be merged in the sink to create a single file per device type.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/concepts-pipeline-execution-triggers

https://docs.microsoft.com/en-us/azure/data-factory/connector-file-system

You have the following Azure Data Factory pipelines:

- Ingest Data from System1

- Ingest Data from System2

- Populate Dimensions

- Populate Facts

Ingest Data from System1 and Ingest Data from System2 have no dependencies. Populate Dimensions must execute after Ingest Data from System1 and Ingest Data from System2. Populate Facts must execute after Populate Dimensions pipeline. All the pipelines must execute every eight hours.

What should you do to schedule the pipelines for execution?

Add an event trigger to all four pipelines.

Add a schedule trigger to all four pipelines.

Create a parent pipeline that contains the four pipelines and use a schedule trigger.

Create a patient pipeline that contains the four pipelines and use an event trigger.

Answer is Create a parent pipeline that contains the four pipelines and use a schedule trigger.

The parent pipeline has 4 execute pipeline activities. Ingest 1 and Ingest 2 have no dependencies. Dimension pipeline has two dependencies from 'on completion' outputs of both Ingest 1 and Ingest 2 pipelines. Fact pipeline has one 'on completion' dependency on the Dimension pipeline. Absolutely nothing to do with a tumbling window trigger