DP-203: Data Engineering on Microsoft Azure

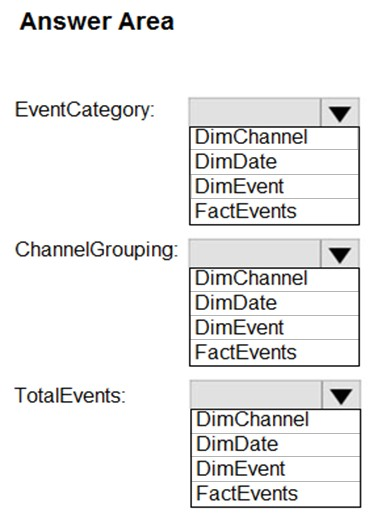

From a website analytics system, you receive data extracts about user interactions such as downloads, link clicks, form submissions, and video plays.

The data contains the following columns.

You need to design a star schema to support analytical queries of the data. The star schema will contain four tables including a date dimension.

To which table should you add each column?

Box 1: DimEvent

Box 2: DimChannel

Box 3: FactEvents

Fact tables store observations or events, and can be sales orders, stock balances, exchange rates, temperatures, etc

Reference:

https://docs.microsoft.com/en-us/power-bi/guidance/star-schema

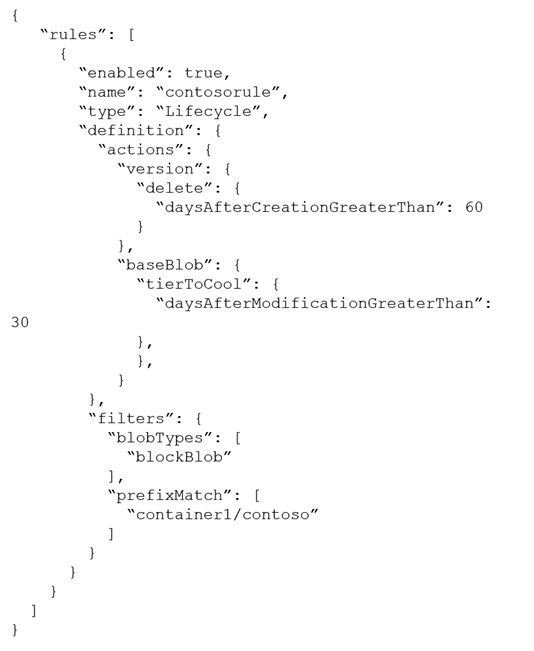

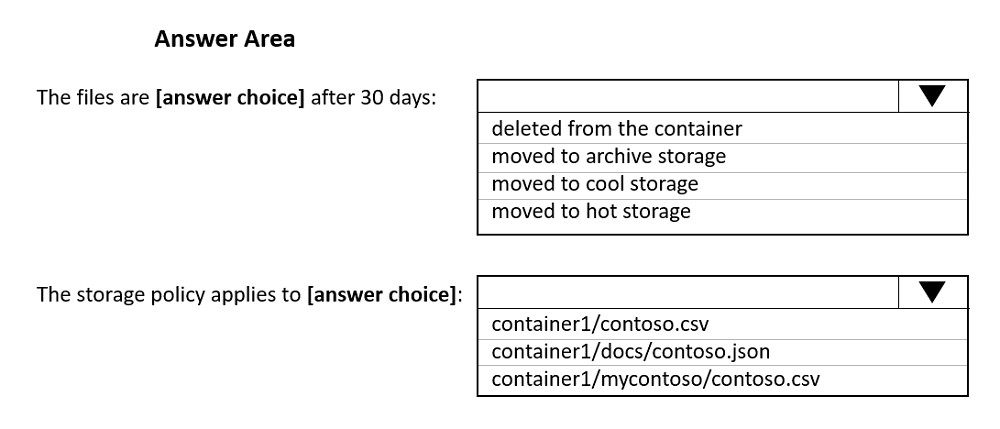

You store files in an Azure Data Lake Storage Gen2 container. The container has the storage policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Box 1: moved to cool storage

The ManagementPolicyBaseBlob.TierToCool property gets or sets the function to tier blobs to cool storage. Support blobs currently at Hot tier.

Box 2: container1/contoso.csv

As defined by prefixMatch.

prefixMatch: An array of strings for prefixes to be matched. Each rule can define up to 10 case-senstive prefixes. A prefix string must start with a container name.

Reference:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.management.storage.fluent.models.managementpolicybaseblob.tiertocool

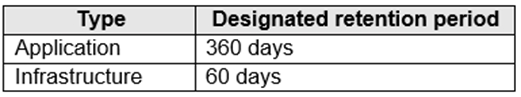

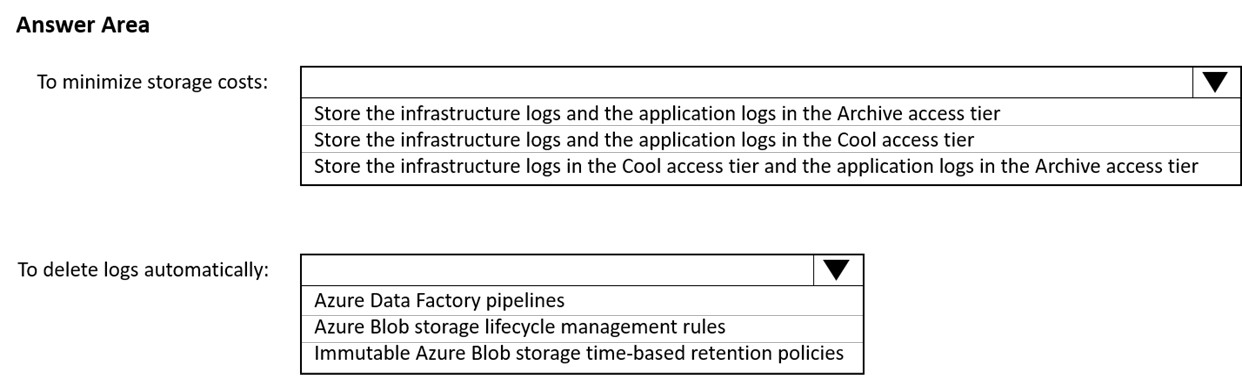

You have an Azure Data Lake Storage Gen2 account named account1 that stores logs as shown in the following table.

You do not expect that the logs will be accessed during the retention periods.

You need to recommend a solution for account1 that meets the following requirements:

● Automatically deletes the logs at the end of each retention period

● Minimizes storage costs

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

Box 1: Store the infrastructure logs in the Cool access tier and the application logs in the Archive access tier

For infrastructure logs: Cool tier - An online tier optimized for storing data that is infrequently accessed or modified. Data in the cool tier should be stored for a minimum of 30 days. The cool tier has lower storage costs and higher access costs compared to the hot tier.

For application logs: Archive tier - An offline tier optimized for storing data that is rarely accessed, and that has flexible latency requirements, on the order of hours.

Data in the archive tier should be stored for a minimum of 180 days.

Box 2: Azure Blob storage lifecycle management rules

Blob storage lifecycle management offers a rule-based policy that you can use to transition your data to the desired access tier when your specified conditions are met. You can also use lifecycle management to expire data at the end of its life.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/access-tiers-overview https://docs.microsoft.com/en-us/azure/storage/blobs/access-tiers-overview

A company plans to use Platform-as-a-Service (PaaS) to create the new data pipeline process. The process must meet the following requirements:

Ingest:

● Access multiple data sources.

● Provide the ability to orchestrate workflow.

● Provide the capability to run SQL Server Integration Services packages.

Store:

● Optimize storage for big data workloads.

● Provide encryption of data at rest.

● Operate with no size limits.

Prepare and Train:

● Provide a fully-managed and interactive workspace for exploration and visualization.

● Provide the ability to program in R, SQL, Python, Scala, and Java.

Provide seamless user authentication with Azure Active Directory.

Model & Serve:

● Implement native columnar storage.

● Support for the SQL language

● Provide support for structured streaming.

You need to build the data integration pipeline.

Which technologies should you use? To answer, select the appropriate options in the answer area.

Answer is

Ingest: Azure Data Factory

Azure Data Factory pipelines can execute SSIS packages.

In Azure, the following services and tools will meet the core requirements for pipeline orchestration, control flow, and data movement: Azure Data Factory, Oozie on HDInsight, and SQL Server Integration Services (SSIS).

Store: Data Lake Storage

Data Lake Storage Gen1 provides unlimited storage.

Note: Data at rest includes information that resides in persistent storage on physical media, in any digital format. Microsoft Azure offers a variety of data storage solutions to meet different needs, including file, disk, blob, and table storage. Microsoft also provides encryption to protect Azure SQL Database, Azure Cosmos

DB, and Azure Data Lake.

Prepare and Train: Azure Databricks

Azure Databricks provides enterprise-grade Azure security, including Azure Active Directory integration.

With Azure Databricks, you can set up your Apache Spark environment in minutes, autoscale and collaborate on shared projects in an interactive workspace.

Azure Databricks supports Python, Scala, R, Java and SQL, as well as data science frameworks and libraries including TensorFlow, PyTorch and scikit-learn.

Model and Serve: Azure Synapse Analytics

Azure Synapse Analytics/ SQL Data Warehouse stores data into relational tables with columnar storage.

Azure SQL Data Warehouse connector now offers efficient and scalable structured streaming write support for SQL Data Warehouse. Access SQL Data

Warehouse from Azure Databricks using the SQL Data Warehouse connector.

Note: As of November 2019, Azure SQL Data Warehouse is now Azure Synapse Analytics.

Reference:

https://docs.microsoft.com/bs-latn-ba/azure/architecture/data-guide/technology-choices/pipeline-orchestration-data-movement

https://docs.microsoft.com/en-us/azure/azure-databricks/what-is-azure-databricks

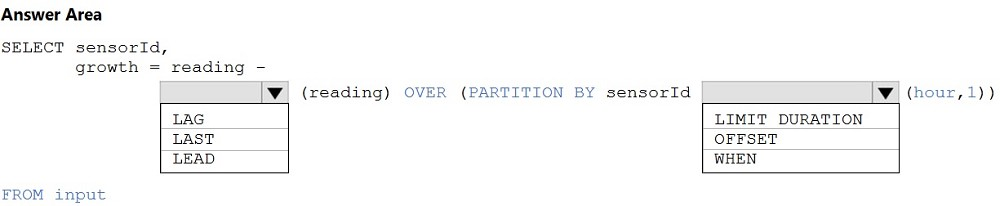

You are building an Azure Analytics query that will receive input data from Azure IoT Hub and write the results to Azure Blob storage.

You need to calculate the difference in the number of readings per sensor per hour.

How should you complete the query?

Box 1: LAG

The LAG analytic operator allows one to look up a previous event in an event stream, within certain constraints. It is very useful for computing the rate of growth of a variable, detecting when a variable crosses a threshold, or when a condition starts or stops being true.

Box 2: LIMIT DURATION

Example: Compute the rate of growth, per sensor:

SELECT sensorId,

growth = reading

LAG(reading) OVER (PARTITION BY sensorId LIMIT DURATION(hour, 1))

FROM input

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/lag-azure-stream-analytics

You are designing a star schema for a dataset that contains records of online orders. Each record includes an order date, an order due date, and an order ship date.

You need to ensure that the design provides the fastest query times of the records when querying for arbitrary date ranges and aggregating by fiscal calendar attributes.

Which two actions should you perf

Create a date dimension table that has a DateTime key.

Use built-in SQL functions to extract date attributes.

Create a date dimension table that has an integer key in the format of YYYYMMDD.

In the fact table, use integer columns for the date fields.

Use DateTime columns for the date fields.

Answers are;

Create a date dimension table that has an integer key in the format of YYYYMMDD.

In the fact table, use integer columns for the date fields.

we are designing a star schema so we need a dimension table for the date

Reference:

https://community.idera.com/database-tools/blog/b/community_blog/posts/why-use-a-date-dimension-table-in-a-data-warehouse

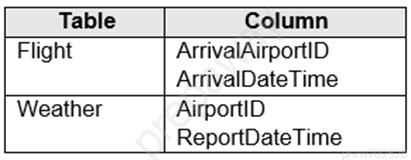

You have two fact tables named Flight and Weather. Queries targeting the tables will be based on the join between the following columns.

You need to recommend a solution that maximizes query performance.

What should you include in the recommendation?

In the tables use a hash distribution of ArrivalDateTime and ReportDateTime.

In the tables use a hash distribution of ArrivalAirportID and AirportID.

In each table, create an IDENTITY column.

In each table, create a column as a composite of the other two columns in the table.

Answer is In the tables use a hash distribution of ArrivalAirportID and AirportID.

Hash-distribution improves query performance on large fact tables.

Incorrect Answers:

A: Do not use a date column for hash distribution. All data for the same date lands in the same distribution. If several users are all filtering on the same date, then only 1 of the 60 distributions do all the processing work.

You have an Azure Synapse Analytics workspace named WS1 that contains an Apache Spark pool named Pool1.

You plan to create a database named DB1 in Pool1.

You need to ensure that when tables are created in DB1, the tables are available automatically as external tables to the built-in serverless SQL pool.

Which format should you use for the tables in DB1?

CSV

ORC

JSON

Parquet

Answer is Parquet

For each Spark external table based on Parquet or CSV and located in Azure Storage, an external table is created in a serverless SQL pool database. As such, you can shut down your Spark pools and still query Spark external tables from serverless SQL pool.

So A and D. Parquet are faster so D

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/metadata/database

You plan to implement an Azure Data Lake Storage Gen2 container that will contain CSV files. The size of the files will vary based on the number of events that occur per hour.

File sizes range from 4 KB to 5 GB.

You need to ensure that the files stored in the container are optimized for batch processing.

What should you do?

Convert the files to JSON

Convert the files to Avro

Compress the files

Merge the files

Answer is Merge the files

Option B: Convert the files to Avro

While converting the files to Avro is a valid option for optimizing data storage and processing, it may not be the most suitable choice in this specific scenario. Avro is a binary serialization format that is efficient for compact storage and fast data processing. It provides schema evolution support and is widely used in big data processing frameworks like Apache Hadoop and Apache Spark.

However, in the given scenario, the files are already in CSV format. Converting them to Avro would require additional processing and potentially introduce complexity. Avro is better suited for scenarios where data is generated or consumed by systems that natively support Avro or for cases where schema evolution is a critical requirement.

On the other hand, merging the files (Option D) is a more straightforward and common approach to optimize batch processing. It helps reduce the overhead associated with managing a large number of small files, improves data scanning efficiency, and enhances overall processing performance. Merging files is a recommended practice to achieve better performance and cost efficiency in scenarios where file sizes vary.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-best-practices#optimize-for-data-ingest

Who's responsible for performing software updates on your Azure SQL databases and the underlying OS?

You are. It's up to you to periodically log in and install the latest security patches and updates.

Microsoft Azure. Azure manages the hardware, software updates, and OS patches for you.

No one. Your database stays with its original OS and software configuration.

Answer is "Microsoft Azure. Azure manages the hardware, software updates, and OS patches for you."

Azure SQL databases are a Platform-as-a-Service (PaaS) offering, meaning much less maintenance to manage yourself.