DP-203: Data Engineering on Microsoft Azure

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes mapping data flow, and then inserts the data into the data warehouse.

Does this meet the goal?

Yes

No

Answer is No

If you need to transform data in a way that is not supported by Data Factory, you can create a custom activity, not a mapping flow,5 with your own data processing logic and use the activity in the pipeline. You can create a custom activity to run R scripts on your HDInsight cluster with R installed.

Reference:

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You use an Azure Data Factory schedule trigger to execute a pipeline that executes mapping data flow, and then inserts the data into the data warehouse.

Does this meet the goal?

Yes

No

Answer is No

If you need to transform data in a way that is not supported by Data Factory, you can create a custom activity, not a mapping flow,5 with your own data processing logic and use the activity in the pipeline. You can create a custom activity to run R scripts on your HDInsight cluster with R installed.

Reference:

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

You have an Azure Data Lake Storage account that contains a staging zone.

You need to design a daily process to ingest incremental data from the staging zone, transform the data by executing an R script, and then insert the transformed data into a data warehouse in Azure Synapse Analytics.

Solution: You schedule an Azure Databricks job that executes an R notebook, and then inserts the data into the data warehouse.

Does this meet the goal?

Yes

No

Answer is No

Must use an Azure Data Factory, not an Azure Databricks job.

Reference:

https://docs.microsoft.com/en-US/azure/data-factory/transform-data

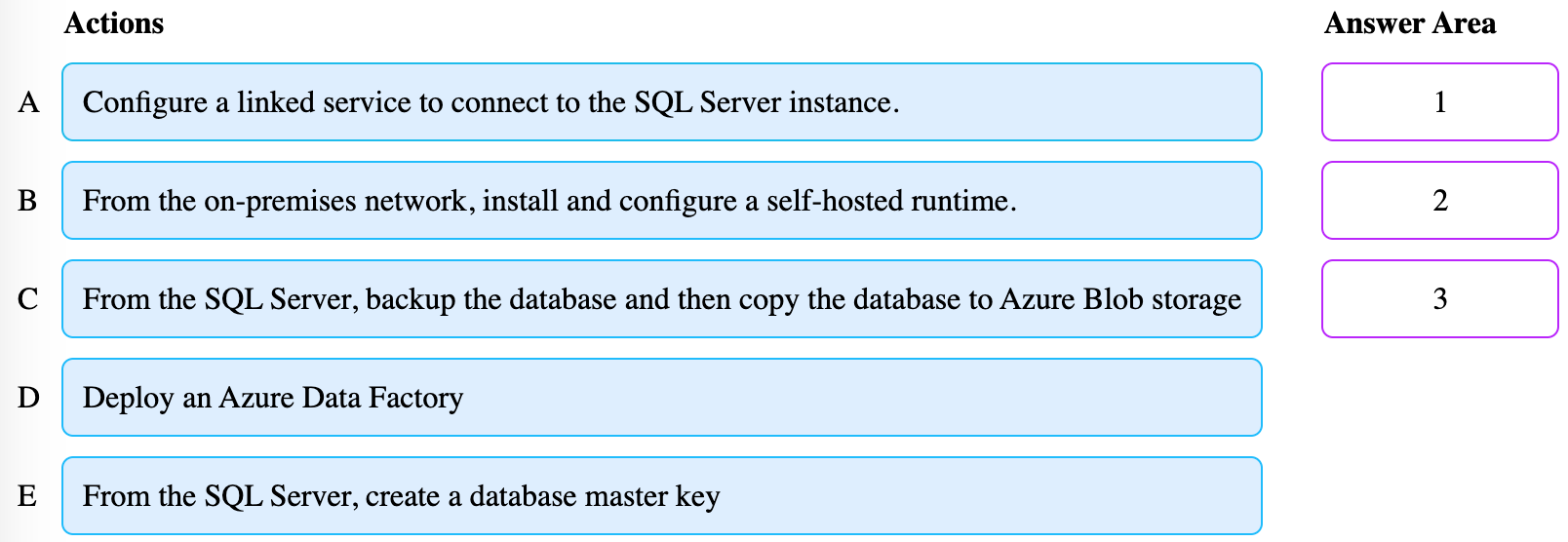

Your company has on-premises Microsoft SQL Server instance.

The data engineering team plans to implement a process that copies data from the SQL Server instance to Azure Blob storage. The process must orchestrate and manage the data lifecycle.

You need to configure Azure Data Factory to connect to the SQL Server instance.

Which three actions should you perform in sequence?

A-C-D

B-A-E

C-D-B

D-B-A

A-B-C

B-C-D

C-D-E

E-D-B

Answer is D-B-A

Step 1: Deploy an Azure Data Factory

You need to create a data factory and start the Data Factory UI to create a pipeline in the data factory.

Step 2: From the on-premises network, install and configure a self-hosted runtime.

To use copy data from a SQL Server database that isn't publicly accessible, you need to set up a self-hosted integration runtime.

Step 3: Configure a linked service to connect to the SQL Server instance.

References:

https://docs.microsoft.com/en-us/azure/data-factory/connector-sql-server

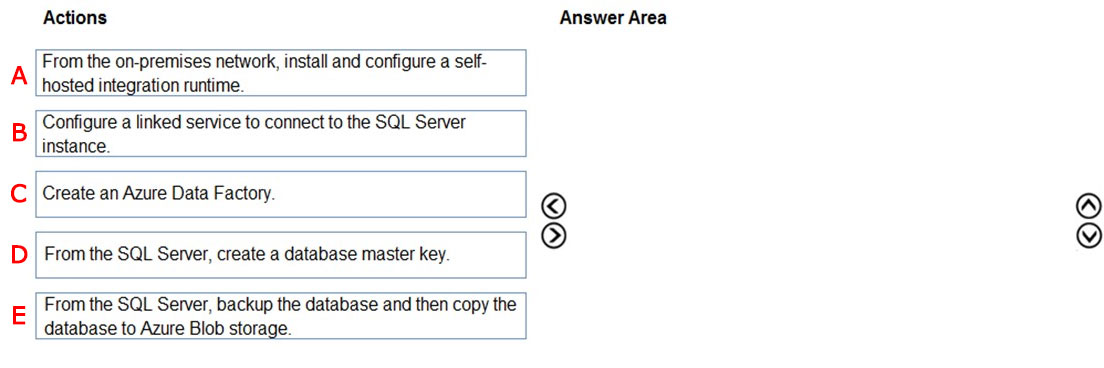

Your company has an on-premises Microsoft SQL Server instance.

The data engineering team plans to implement a process that copies data from the SQL Server instance to Azure Blob storage once a day. The process must orchestrate and manage the data lifecycle.

You need to create Azure Data Factory to connect to the SQL Server instance.

Which three actions should you perform in sequence?

A - B - C

B - A - D

E - C - B

C - D - A

D - E - C

C - A - B

B - C - E

E - B - A

Answer is C - A - B

Step 1: Create an Azure Data Factory

You need to create a data factory and start the Data Factory UI to create a pipeline in the data factory.

Step 2: From the on-premises network, install and configure a self-hosted runtime.

To use copy data from a SQL Server database that isn't publicly accessible, you need to set up a self-hosted integration runtime.

Step 3: Configure a linked service to connect to the SQL Server instance.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-sql-server

https://www.mssqltips.com/sqlservertip/5812/connect-to-onpremises-data-in-azure-data-factory-with-the-selfhosted-integration-runtime--part-1/

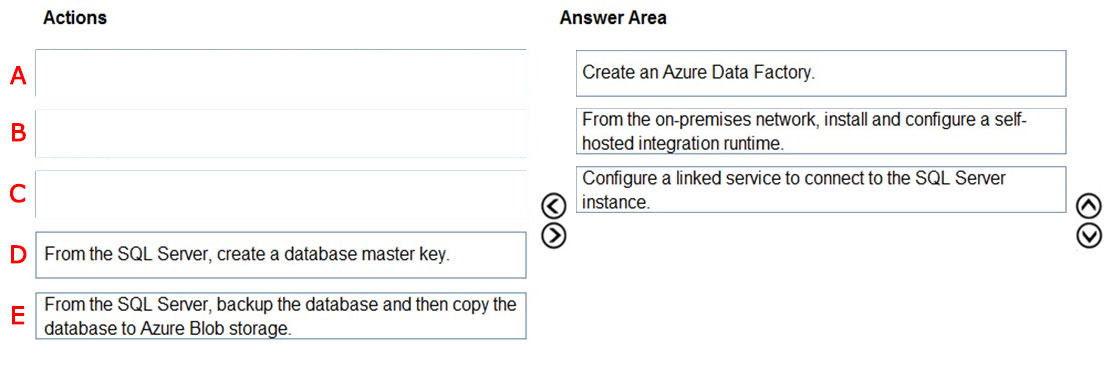

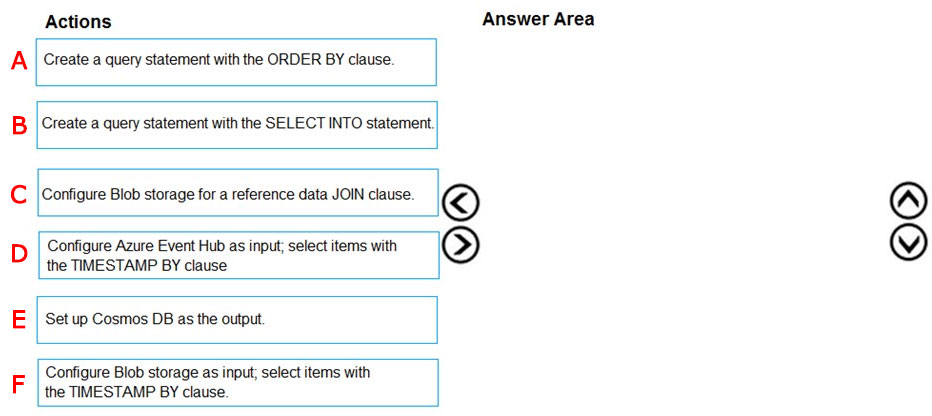

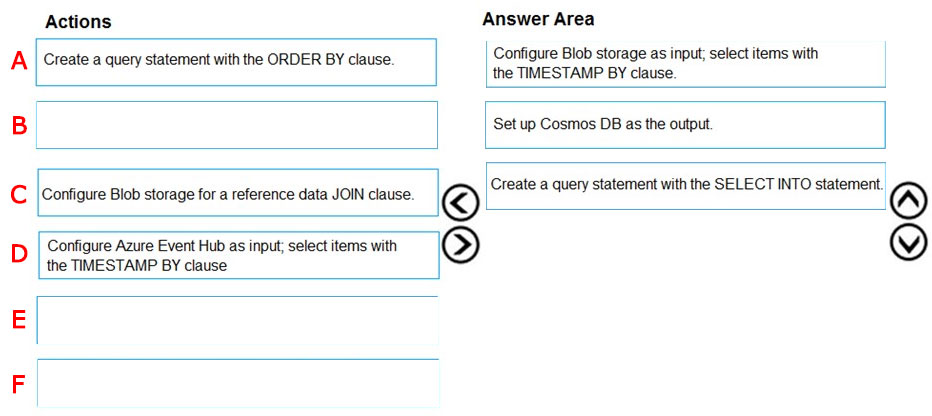

You implement an event processing solution using Microsoft Azure Stream Analytics.

The solution must meet the following requirements:

- Ingest data from Blob storage

- Analyze data in real time

- Store processed data in Azure Cosmos DB

Which three actions should you perform in sequence?

A-B-C

B-C-E

C-D-B

D-B-A

E-C-B

F-E-B

A-E-B

B-D-F

Answer is F-E-B

Step 1: Configure Blob storage as input; select items with the TIMESTAMP BY clause

The default timestamp of Blob storage events in Stream Analytics is the timestamp that the blob was last modified, which is BlobLastModifiedUtcTime. To process the data as a stream using a timestamp in the event payload, you must use the TIMESTAMP BY keyword.

Example:

The following is a TIMESTAMP BY example which uses the EntryTime column as the application time for events:

SELECT TollId, EntryTime AS VehicleEntryTime, LicensePlate, State, Make, Model, VehicleType, VehicleWeight, Toll, Tag FROM TollTagEntry TIMESTAMP BY EntryTimeStep 2: Set up cosmos DB as the output

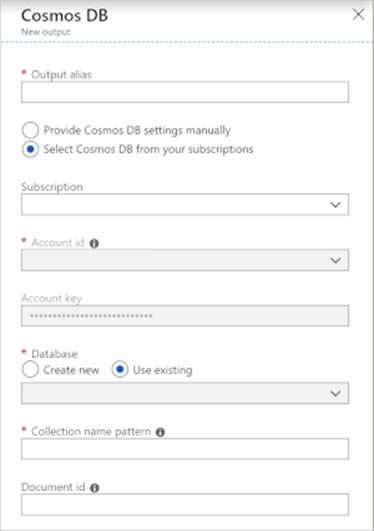

Creating Cosmos DB as an output in Stream Analytics generates a prompt for information as seen below.

Step 3: Create a query statement with the SELECT INTO statement.

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-define-inputs

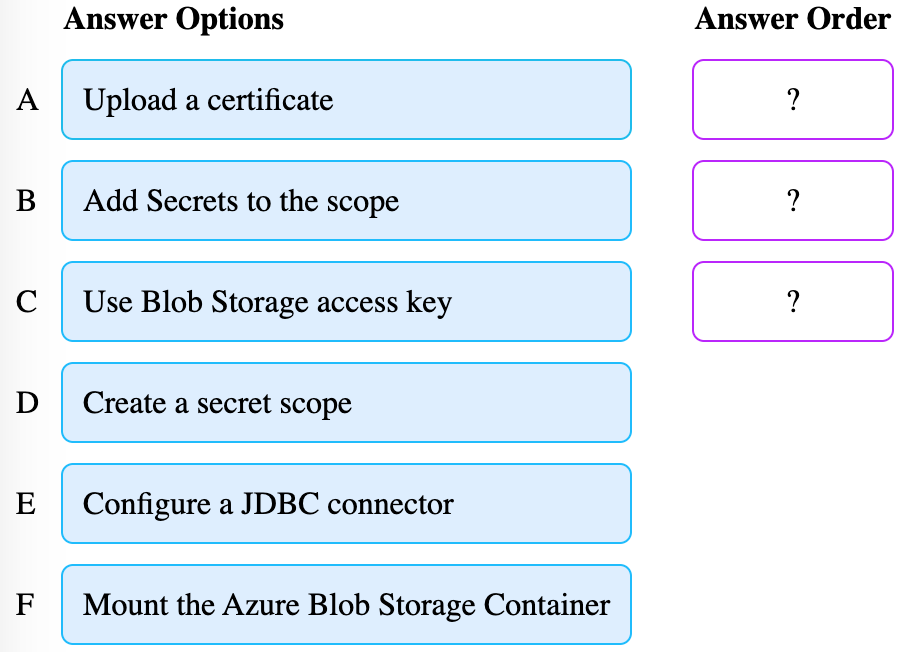

You manage the Microsoft Azure Databricks environment for a company. You must be able to access a private Azure Blob Storage account. Data must be available to all Azure Databricks workspaces. You need to provide the data access.

Which three actions should you perform in sequence?

A-B-C

B-A-E

C-D-B

D-B-F

E-F-A

F-E-B

A-E-B

B-D-F

Step 1: Create a secret scope

Step 2: Add secrets to the scope

Note: dbutils.secrets.get(scope = "

Step 3: Mount the Azure Blob Storage container

You can mount a Blob Storage container or a folder inside a container through Databricks File System - DBFS. The mount is a pointer to a Blob Storage container, so the data is never synced locally.

References:

https://docs.databricks.com/spark/latest/data-sources/azure/azure-storage.html

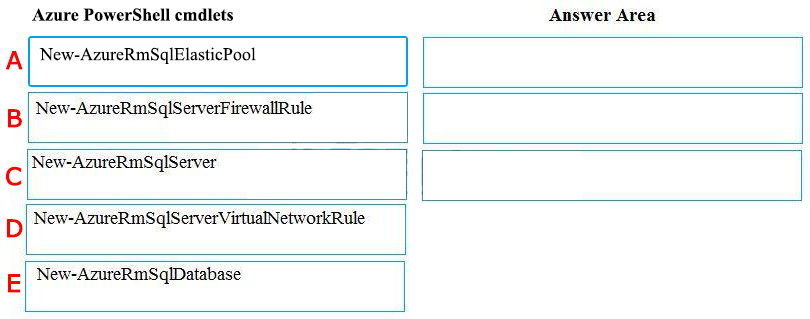

You plan to create a new single database instance of Microsoft Azure SQL Database.

The database must only allow communication from the data engineer's workstation. You must connect directly to the instance by using Microsoft SQL Server Management Studio.

You need to create and configure the Database. Which three Azure PowerShell cmdlets should you use to develop the solution the correct order?

A-B-C

B-A-D

C-B-E

D-A-C

E-B-A

Answer is C-B-E

Step 1: New-AzureRMSqlServer

Create a server.

Step 2: New-AzureRmSqlServerFirewallRule

New-AzureRmSqlServerFirewallRule creates a firewall rule for a SQL Database server.

Can be used to create a server firewall rule that allows access from the specified IP range.

Step 3: New-AzureRmSqlDatabase

Example: Create a database on a specified server

PS C:>New-AzureRmSqlDatabase -ResourceGroupName "ResourceGroup01" -ServerName "Server01" -DatabaseName "Database01

References:

https://docs.microsoft.com/en-us/azure/sql-database/scripts/sql-database-create-and-configure-database-powershell?toc=%2fpowershell%2fmodule%2ftoc.json

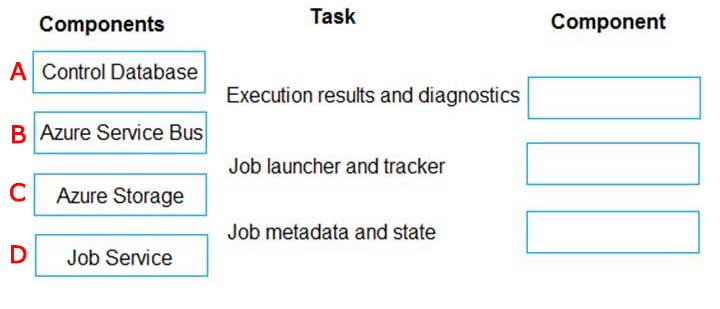

Your company uses Microsoft Azure SQL Database configured with Elastic pools. You use Elastic Database jobs to run queries across all databases in the pool.

You need to analyze, troubleshoot, and report on components responsible for running Elastic Database jobs.

You need to determine the component responsible for running job service tasks.

Which components should you use for each Elastic pool job services task?

A-B-D

B-C-D

C-D-A

D-A-C

A-C-D

B-D-A

C-A-B

D-B-A

Answer is C-D-A

Execution results and diagnostics: Azure Storage

Job launcher and tracker: Job Service

Job metadata and state: Control database

The Job database is used for defining jobs and tracking the status and history of job executions. The Job database is also used to store agent metadata, logs, results, job definitions, and also contains many useful stored procedures, and other database objects, for creating, running, and managing jobs using T-SQL.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-job-automation-overview

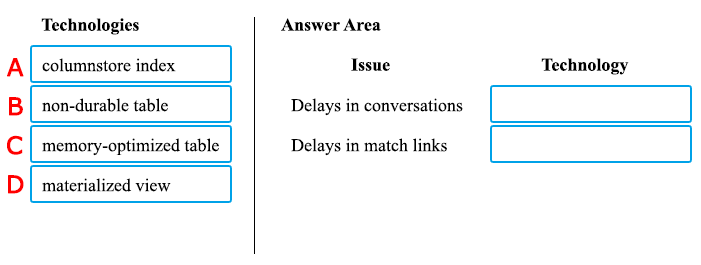

A company builds an application to allow developers to share and compare code. The conversations, code snippets, and links shared by people in the application are stored in a Microsoft Azure SQL Database instance. The application allows for searches of historical conversations and code snippets.

When users share code snippets, the code snippet is compared against previously share code snippets by using a combination of Transact-SQL functions including SUBSTRING, FIRST_VALUE, and SQRT. If a match is found, a link to the match is added to the conversation.

Customers report the following issues:

- Delays occur during live conversations

- A delay occurs before matching links appear after code snippets are added to conversations

You need to resolve the performance issues.

Which technologies should you use?

A-B

A-C

A-D

B-C

B-D

C-D

D-A

B-A

Answer is C-D

Box 1: memory-optimized table

In-Memory OLTP can provide great performance benefits for transaction processing, data ingestion, and transient data scenarios.

Box 2: materialized view

To support efficient querying, a common solution is to generate, in advance, a view that materializes the data in a format suited to the required results set. The Materialized View pattern describes generating prepopulated views of data in environments where the source data isn't in a suitable format for querying, where generating a suitable query is difficult, or where query performance is poor due to the nature of the data or the data store.

These materialized views, which only contain data required by a query, allow applications to quickly obtain the information they need. In addition to joining tables or combining data entities, materialized views can include the current values of calculated columns or data items, the results of combining values or executing transformations on the data items, and values specified as part of the query. A materialized view can even be optimized for just a single query.

References:

https://docs.microsoft.com/en-us/azure/architecture/patterns/materialized-view