DP-203: Data Engineering on Microsoft Azure

General Overview

Litware, Inc, is an international car racing and manufacturing company that has 1,000 employees. Most employees are located in Europe. The company supports racing teams that complete in a worldwide racing series.

Physical Locations

Litware has two main locations: a main office in London, England, and a manufacturing plant in Berlin, Germany. During each race weekend, 100 engineers set up a remote portable office by using a VPN to connect the datacentre in the London office. The portable office is set up and torn down in approximately 20 different countries each year.

Existing environment

Race Central

During race weekends, Litware uses a primary application named Race Central. Each car has several sensors that send real-time telemetry data to the London datacentre. The data is used for real-time tracking of the cars.

Race Central also sends batch updates to an application named Mechanical Workflow by using Microsoft SQL Server Integration Services (SSIS).

The telemetry data is sent to a MongoDB database. A custom application then moves the data to databases in SQL Server 2017. The telemetry data in MongoDB has more than 500 attributes. The application changes the attribute names when the data is moved to SQL Server 2017.

The database structure contains both OLAP and OLTP databases.

Mechanical Workflow

Mechanical Workflow is used to track changes and improvements made to the cars during their lifetime.

Currently, Mechanical Workflow runs on SQL Server 2017 as an OLAP system.

Mechanical Workflow has a named Table1 that is 1 TB. Large aggregations are performed on a single column of Table 1.

Requirements

Planned Changes

Litware is the process of rearchitecting its data estate to be hosted in Azure. The company plans to decommission the London datacentre and move all its applications to an Azure datacentre.

Technical Requirements

Litware identifies the following technical requirements:

- Data collection for Race Central must be moved to Azure Cosmos DB and Azure SQL Database. The data must be written to the Azure datacentre closest to each race and must converge in the least amount of time.

- The query performance of Race Central must be stable, and the administrative time it takes to perform optimizations must be minimized.

- The datacentre for Mechanical Workflow must be moved to Azure SQL data Warehouse.

- Transparent data encryption (IDE) must be enabled on all data stores, whenever possible.

- An Azure Data Factory pipeline must be used to move data from Cosmos DB to SQL Database for Race Central. If the data load takes longer than 20 minutes, configuration changes must be made to Data Factory.

- The telemetry data must migrate toward a solution that is native to Azure.

- The telemetry data must be monitored for performance issues. You must adjust the Cosmos DB Request Units per second (RU/s) to maintain a performance SLA while minimizing the cost of the Ru/s.

During rare weekends, visitors will be able to enter the remote portable offices. Litware is concerned that some proprietary information might be exposed. The company identifies the following data masking requirements for the Race Central data that will be stored in SQL Database:

- Only show the last four digits of the values in a column named SuspensionSprings.

- Only Show a zero value for the values in a column named ShockOilWeight.

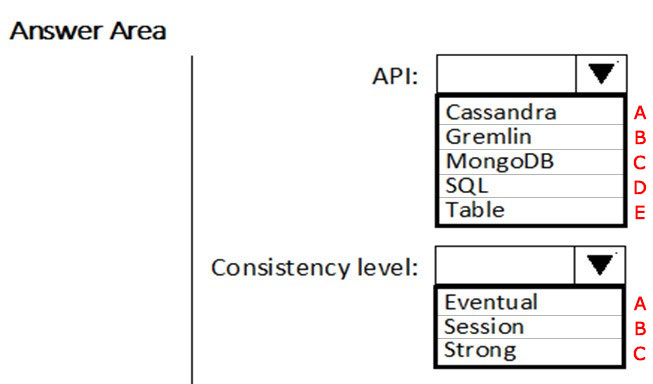

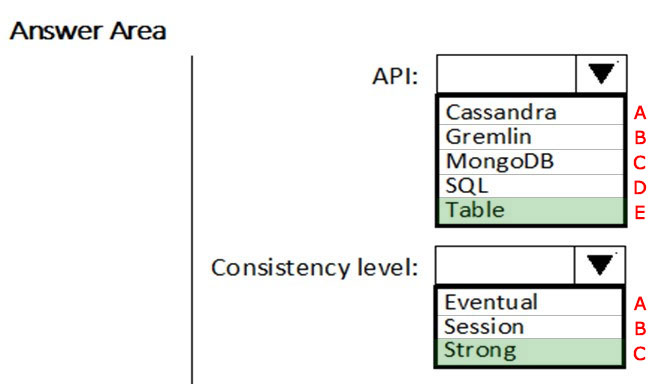

You need to build a solution to collect the telemetry data for Race Central.

What should you use?

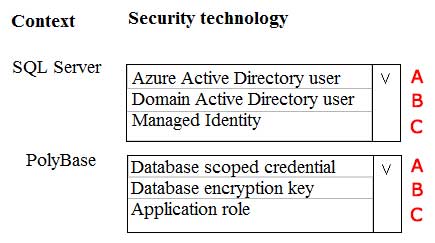

A - A

B - A

C - B

D - B

E - C

A - C

B - B

C - B

Answer is E - C

API: Table

Azure Cosmos DB provides native support for wire protocol-compatible APIs for popular databases. These include MongoDB, Apache Cassandra, Gremlin, and Azure Table storage.

Scenario: The telemetry data must migrate toward a solution that is native to Azure.

Consistency level: Strong

Use the strongest consistency Strong to minimize convergence time.

Scenario: The data must be written to the Azure datacenter closest to each race and must converge in the least amount of time.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

General Overview

Litware, Inc, is an international car racing and manufacturing company that has 1,000 employees. Most employees are located in Europe. The company supports racing teams that complete in a worldwide racing series.

Physical Locations

Litware has two main locations: a main office in London, England, and a manufacturing plant in Berlin, Germany. During each race weekend, 100 engineers set up a remote portable office by using a VPN to connect the datacentre in the London office. The portable office is set up and torn down in approximately 20 different countries each year.

Existing environment

Race Central

During race weekends, Litware uses a primary application named Race Central. Each car has several sensors that send real-time telemetry data to the London datacentre. The data is used for real-time tracking of the cars.

Race Central also sends batch updates to an application named Mechanical Workflow by using Microsoft SQL Server Integration Services (SSIS).

The telemetry data is sent to a MongoDB database. A custom application then moves the data to databases in SQL Server 2017. The telemetry data in MongoDB has more than 500 attributes. The application changes the attribute names when the data is moved to SQL Server 2017.

The database structure contains both OLAP and OLTP databases.

Mechanical Workflow

Mechanical Workflow is used to track changes and improvements made to the cars during their lifetime.

Currently, Mechanical Workflow runs on SQL Server 2017 as an OLAP system.

Mechanical Workflow has a named Table1 that is 1 TB. Large aggregations are performed on a single column of Table 1.

Requirements

Planned Changes

Litware is the process of rearchitecting its data estate to be hosted in Azure. The company plans to decommission the London datacentre and move all its applications to an Azure datacentre.

Technical Requirements

Litware identifies the following technical requirements:

- Data collection for Race Central must be moved to Azure Cosmos DB and Azure SQL Database. The data must be written to the Azure datacentre closest to each race and must converge in the least amount of time.

- The query performance of Race Central must be stable, and the administrative time it takes to perform optimizations must be minimized.

- The datacentre for Mechanical Workflow must be moved to Azure SQL data Warehouse.

- Transparent data encryption (IDE) must be enabled on all data stores, whenever possible.

- An Azure Data Factory pipeline must be used to move data from Cosmos DB to SQL Database for Race Central. If the data load takes longer than 20 minutes, configuration changes must be made to Data Factory.

- The telemetry data must migrate toward a solution that is native to Azure.

- The telemetry data must be monitored for performance issues. You must adjust the Cosmos DB Request Units per second (RU/s) to maintain a performance SLA while minimizing the cost of the Ru/s.

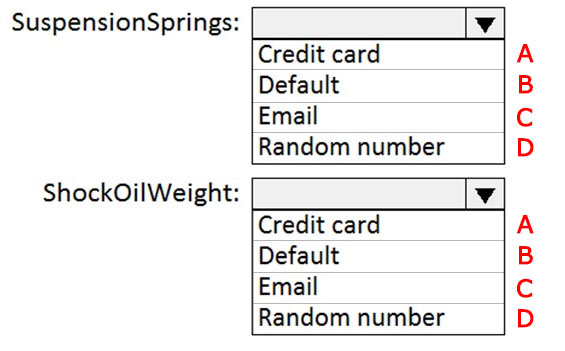

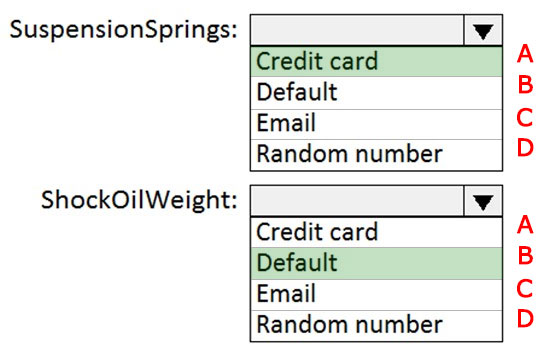

During rare weekends, visitors will be able to enter the remote portable offices. Litware is concerned that some proprietary information might be exposed. The company identifies the following data masking requirements for the Race Central data that will be stored in SQL Database:

- Only show the last four digits of the values in a column named SuspensionSprings.

- Only Show a zero value for the values in a column named ShockOilWeight.

Which masking functions should you implement for each column to meet the data masking requirements?

A - B

B - C

C - D

D - A

A - C

B - D

C - B

D - C

Answer is A - B

Box 1: Credit Card

The Credit Card Masking method exposes the last four digits of the designated fields and adds a constant string as a prefix in the form of a credit card.

Example: XXXX-XXXX-XXXX-1234

- Only show the last four digits of the values in a column named SuspensionSprings.

Box 2: Default

Default uses a zero value for numeric data types (bigint, bit, decimal, int, money, numeric, smallint, smallmoney, tinyint, float, real).

- Only show a zero value for the values in a column named ShockOilWeight.

Scenario:

The company identifies the following data masking requirements for the Race Central data that will be stored in SQL Database:

- Only show a zero value for the values in a column named ShockOilWeight.

- Only show the last four digits of the values in a column named SuspensionSprings.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

Background

Proseware, Inc, develops and manages a product named Poll Taker. The product is used for delivering public opinion polling and analysis.

Polling data comes from a variety of sources, including online surveys, house-to-house interviews, and booths at public events.

Polling data

Polling data is stored in one of the two locations:

- An on-premises Microsoft SQL Server 2019 database named PollingData Azure Data Lake Gen 2

- Data in Data Lake is queried by using PolyBase

Poll metadata

Each poll has associated metadata with information about the poll including the date and number of respondents. The data is stored as JSON.

Phone-based polling

Security

- Phone-based poll data must only be uploaded by authorized users from authorized devices

- Contractors must not have access to any polling data other than their own

- Access to polling data must set on a per-active directory user basis

Data migration and loading

- All data migration processes must use Azure Data Factory

- All data migrations must run automatically during non-business hours

- Data migrations must be reliable and retry when needed

Performance

After six months, raw polling data should be moved to a storage account. The storage must be available in the event of a regional disaster. The solution must minimize costs.

Deployments

- All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple environments

- No credentials or secrets should be used during deployments

Reliability

All services and processes must be resilient to a regional Azure outage.

Monitoring

All Azure services must be monitored by using Azure Monitor. On-premises SQL Server performance must be monitored.

You need to ensure that phone-based polling data can be analyzed in the PollingData database.

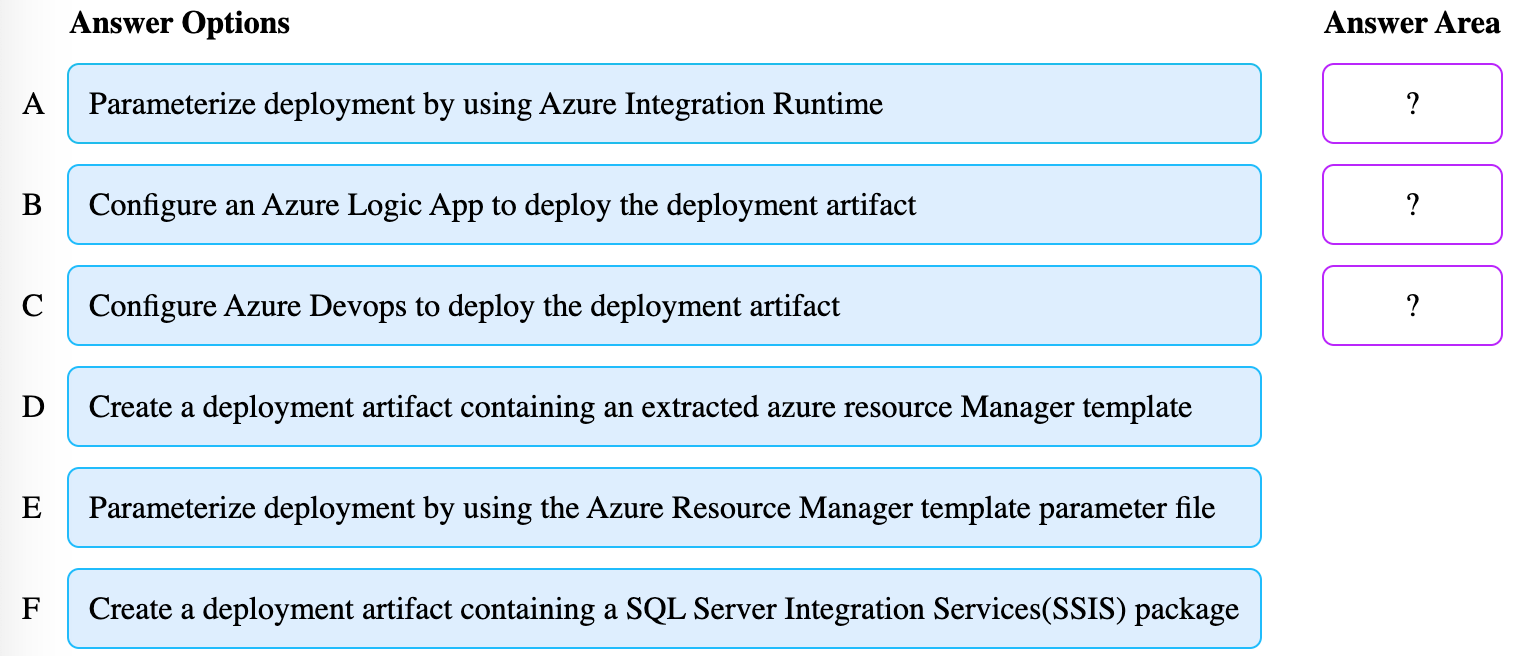

Which three actions should you perform in sequence?

A-B-C

B-A-E

C-D-B

D-E-C

E-F-A

F-E-B

A-E-B

B-D-F

Answer is D-E-C

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple environments

No credentials or secrets should be used during deployments

1) Create a deployment artifact containing an extracted azure resource Manager template

Download the Arm template basically from the portal where ADF is configured

2) Parameterize deployment by using the Azure Resource Manager template parameter file

When ARM is downloaded it has two pieces, one of them is configuration file to set connections and other variables, set the environment variables there

3) Configure Azure Devops to deploy the deployment artifact

Reference:

https://www.youtube.com/watch?v=WhUAX8YxxLk

Current environment

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging.

The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

The company has a reporting infrastructure that ingests data from local databases and partner services. Partners services consists of distributors, wholesales, and retailers across the world. The company performs daily, weekly, and monthly reporting.

Requirements

Tier 3 and Tier 6 through Tier 8 application must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Tier 7 and Tier 8 partner access must be restricted to the database only.

In addition to default Azure backup behavior, Tier 4 and 5 databases must be on a backup strategy that performs a transaction log backup eve hour, a differential backup of databases every day and a full back up every week.

Back up strategies must be put in place for all other standalone Azure SQL Databases using Azure SQL-provided backup storage and capabilities.

Databases

Contoso requires their data estate to be designed and implemented in the Azure Cloud. Moving to the cloud must not inhibit access to or availability of data.

Databases:

Tier 1 Database must implement data masking using the following masking logic:

Tier 2 databases must sync between branches and cloud databases and in the event of conflicts must be set up for conflicts to be won by on-premises databases.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of a server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Security

A method of managing multiple databases in the cloud at the same time is must be implemented to streamlining data management and limiting management access to only those requiring access.

Monitoring

Monitoring must be set up on every database. Contoso and partners must receive performance reports as part of contractual agreements.

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

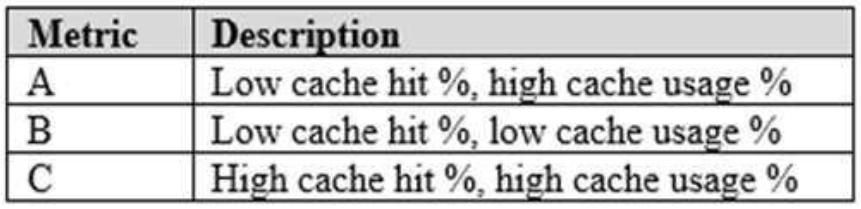

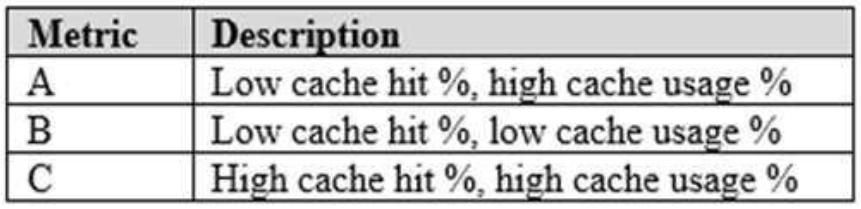

The Azure SQL Data Warehouse cache must be monitored when the database is being used. A dashboard monitoring key performance indicators (KPIs) indicated by traffic lights must be created and displayed based on the following metrics:

Existing Data Protection and Security compliances require that all certificates and keys are internally managed in an on-premises storage.

You identify the following reporting requirements:

- Azure Data Warehousüe must be used to gather and query data from multiple internal and external databases

- Azure Data Warehouse must be optimized to use data from a cache

- Reporting data aggregated for external partners must be stored in Azure Storage and be made available during regular business hours in the connecting regions

- Reporting strategies must be improved to real time or near real time reporting cadence to improve competitiveness and the general supply chain

- Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

- Tier 10 reporting data must be stored in Azure Blobs

Issues

Team members identify the following issues:

- Both internal and external client application run complex joins, equality searches and group-by clauses. Because some systems are managed externally, the queries will not be changed or optimized by Contoso

- External partner organization data formats, types and schemas are controlled by the partner companies

- Internal and external database development staff resources are primarily SQL developers familiar with the Transact-SQL language.

- Size and amount of data has led to applications and reporting solutions not performing are required speeds

- Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

- The company maintains several legacy client applications. Data for these applications remains isolated form other applications. This has led to hundreds of databases being provisioned on a per application basis.

Which three actions should you perform in sequence?

A-B-D

B-D-E

C-D-E

D-E-F

E-B-A

F-C-B

A-E-B

B-D-F

Answer is B-D-E

Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

Step 1: Set the Allow Azure Services to Access Server setting to Disabled

Set Allow access to Azure services to OFF for the most secure configuration.

By default, access through the SQL Database firewall is enabled for all Azure services, under Allow access to Azure services. Choose OFF to disable access for all Azure services.

Note: The firewall pane has an ON/OFF button that is labeled Allow access to Azure services. The ON setting allows communications from all Azure IP addresses and all Azure subnets. These Azure IPs or subnets might not be owned by you. This ON setting is probably more open than you want your SQL Database to be.

The virtual network rule feature offers much finer granular control.

Step 2: In the Azure portal, create a server firewall rule

Set up SQL Database server firewall rules

Server-level IP firewall rules apply to all databases within the same SQL Database server.

To set up a server-level firewall rule:

1. In Azure portal, select SQL databases from the left-hand menu, and select your database on the SQL databases page.

2. On the Overview page, select Set server firewall. The Firewall settings page for the database server opens.

Step 3: Connect to the database and use Transact-SQL to create a database firewall rule

Database-level firewall rules can only be configured using Transact-SQL (T-SQL) statements, and only after you've configured a server-level firewall rule.

To setup a database-level firewall rule:

1. Connect to the database, for example using SQL Server Management Studio.

2. In Object Explorer, right-click the database and select New Query.

3. In the query window, add this statement and modify the IP address to your public IP address:

- EXECUTE sp_set_database_firewall_rule N'Example DB Rule','0.0.0.4','0.0.0.4';

4. On the toolbar, select Execute to create the firewall rule.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-security-tutorial

Background

Proseware, Inc, develops and manages a product named Poll Taker. The product is used for delivering public opinion polling and analysis.

Polling data comes from a variety of sources, including online surveys, house-to-house interviews, and booths at public events.

Polling data

Polling data is stored in one of the two locations:

- An on-premises Microsoft SQL Server 2019 database named PollingData Azure Data Lake Gen 2

- Data in Data Lake is queried by using PolyBase

Poll metadata

Each poll has associated metadata with information about the poll including the date and number of respondents. The data is stored as JSON.

Phone-based polling

Security

- Phone-based poll data must only be uploaded by authorized users from authorized devices

- Contractors must not have access to any polling data other than their own

- Access to polling data must set on a per-active directory user basis

Data migration and loading

- All data migration processes must use Azure Data Factory

- All data migrations must run automatically during non-business hours

- Data migrations must be reliable and retry when needed

Performance

After six months, raw polling data should be moved to a storage account. The storage must be available in the event of a regional disaster. The solution must minimize costs.

Deployments

- All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple environments

- No credentials or secrets should be used during deployments

Reliability

All services and processes must be resilient to a regional Azure outage.

Monitoring

All Azure services must be monitored by using Azure Monitor. On-premises SQL Server performance must be monitored.

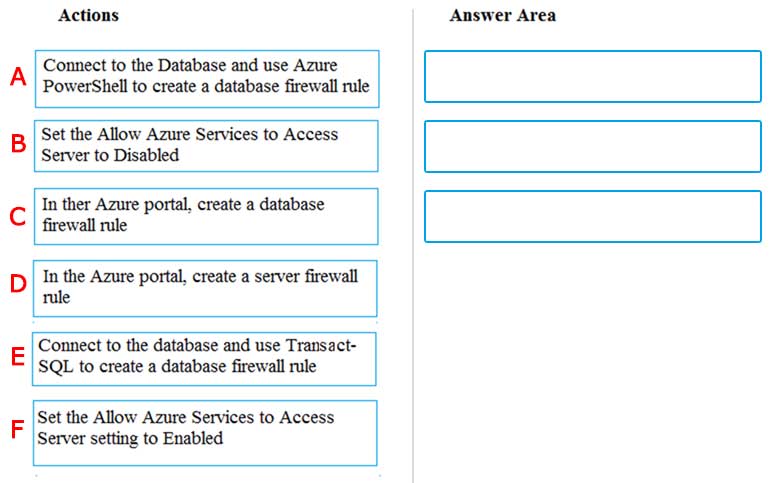

You need to ensure polling data security requirements are met.

Which security technologies should you use?

A-A

A-B

A-C

B-A

B-B

B-C

C-A

C-B

Answer is A-A

Box 1: Azure Active Directory user

Scenario:

Access to polling data must set on a per-active directory user basis

Box 2: DataBase Scoped Credential

SQL Server uses a database scoped credential to access non-public Azure blob storage or Kerberos-secured Hadoop clusters with PolyBase.

PolyBase cannot authenticate by using Azure AD authentication.

References:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-database-scoped-credential-transact-sql

Current environment

Contoso relies on an extensive partner network for marketing, sales, and distribution. Contoso uses external companies that manufacture everything from the actual pharmaceutical to the packaging.

The majority of the company’s data reside in Microsoft SQL Server database. Application databases fall into one of the following tiers:

The company has a reporting infrastructure that ingests data from local databases and partner services. Partners services consists of distributors, wholesales, and retailers across the world. The company performs daily, weekly, and monthly reporting.

Requirements

Tier 3 and Tier 6 through Tier 8 application must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Tier 7 and Tier 8 partner access must be restricted to the database only.

In addition to default Azure backup behavior, Tier 4 and 5 databases must be on a backup strategy that performs a transaction log backup eve hour, a differential backup of databases every day and a full back up every week.

Back up strategies must be put in place for all other standalone Azure SQL Databases using Azure SQL-provided backup storage and capabilities.

Databases

Contoso requires their data estate to be designed and implemented in the Azure Cloud. Moving to the cloud must not inhibit access to or availability of data.

Databases:

Tier 1 Database must implement data masking using the following masking logic:

Tier 2 databases must sync between branches and cloud databases and in the event of conflicts must be set up for conflicts to be won by on-premises databases.

Tier 3 and Tier 6 through Tier 8 applications must use database density on the same server and Elastic pools in a cost-effective manner.

Applications must still have access to data from both internal and external applications keeping the data encrypted and secure at rest and in transit.

A disaster recovery strategy must be implemented for Tier 3 and Tier 6 through 8 allowing for failover in the case of a server going offline.

Selected internal applications must have the data hosted in single Microsoft Azure SQL Databases.

- Tier 1 internal applications on the premium P2 tier

- Tier 2 internal applications on the standard S4 tier

Security

A method of managing multiple databases in the cloud at the same time is must be implemented to streamlining data management and limiting management access to only those requiring access.

Monitoring

Monitoring must be set up on every database. Contoso and partners must receive performance reports as part of contractual agreements.

Tiers 6 through 8 must have unexpected resource storage usage immediately reported to data engineers.

The Azure SQL Data Warehouse cache must be monitored when the database is being used. A dashboard monitoring key performance indicators (KPIs) indicated by traffic lights must be created and displayed based on the following metrics:

Existing Data Protection and Security compliances require that all certificates and keys are internally managed in an on-premises storage.

You identify the following reporting requirements:

- Azure Data Warehousüe must be used to gather and query data from multiple internal and external databases

- Azure Data Warehouse must be optimized to use data from a cache

- Reporting data aggregated for external partners must be stored in Azure Storage and be made available during regular business hours in the connecting regions

- Reporting strategies must be improved to real time or near real time reporting cadence to improve competitiveness and the general supply chain

- Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

- Tier 10 reporting data must be stored in Azure Blobs

Issues

Team members identify the following issues:

- Both internal and external client application run complex joins, equality searches and group-by clauses. Because some systems are managed externally, the queries will not be changed or optimized by Contoso

- External partner organization data formats, types and schemas are controlled by the partner companies

- Internal and external database development staff resources are primarily SQL developers familiar with the Transact-SQL language.

- Size and amount of data has led to applications and reporting solutions not performing are required speeds

- Tier 7 and 8 data access is constrained to single endpoints managed by partners for access

- The company maintains several legacy client applications. Data for these applications remains isolated form other applications. This has led to hundreds of databases being provisioned on a per application basis.

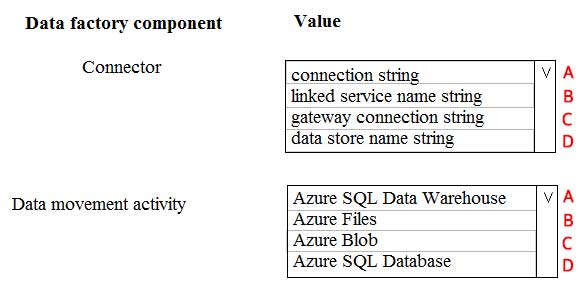

You need set up the Azure Data Factory JSON definition for Tier 10 data.

What should you use? To answer, select the appropriate options in the answer area.

A-A

A-B

A-C

B-B

B-C

B-D

C-C

C-D

Answer is A-C

Box 1: Connection String

To use storage account key authentication, you use the ConnectionString property, which xpecify the information needed to connect to Blobl Storage.

Mark this field as a SecureString to store it securely in Data Factory. You can also put account key in Azure Key Vault and pull the accountKey configuration out of the connection string.

Box 2: Azure Blob

Tier 10 reporting data must be stored in Azure Blobs

References:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-blob-storage

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

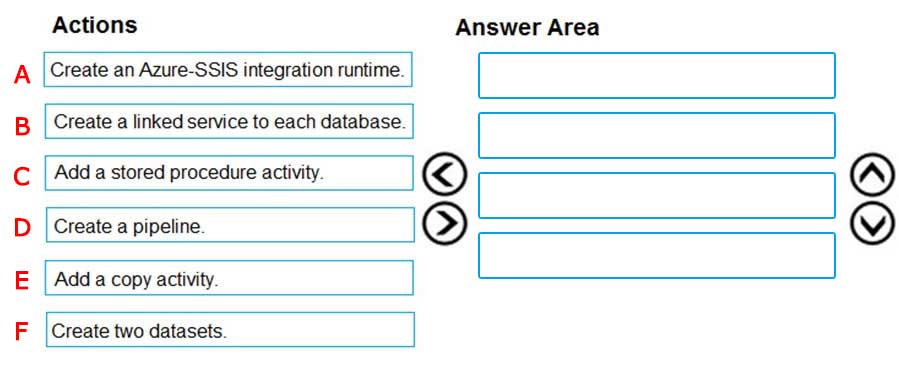

You need to replace the SSIS process by using Data Factory.

Which four actions should you perform in sequence?

A-B-F-D

B-F-D-E

C-D-E-F

D-E-A-B

E-A-B-F

F-D-E-A

A-B-E-F

B-D-F-E

Answer is B-F-D-E

Scenario: A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration

Services (SSIS) package that runs a stored procedure from SALESDB.

Step 1: Create a linked service to each database

Step 2: Create two datasets

You can create two datasets: InputDataset and OutputDataset. These datasets are of type AzureBlob. They refer to the Azure Storage linked service that you created in the previous section.

Step 3: Create a pipeline

You create and validate a pipeline with a copy activity that uses the input and output datasets.

Step 4: Add a copy activity

References:

https://docs.microsoft.com/en-us/azure/data-factory/quickstart-create-data-factory-portal

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

Which counter should you monitor for real-time processing to meet the technical requirements?

Concurrent users

SU% Utilization

Data Conversion Errors

CPU % utilization

Answer is SU% Utilization

Scenario:

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- The sales data, including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics.

Streaming Units (SUs) represents the computing resources that are allocated to execute a Stream Analytics job. The higher the number of SUs, the more CPU and memory resources are allocated for your job. This capacity lets you focus on the query logic and abstracts the need to manage the hardware to run your Stream Analytics job in a timely manner.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-streaming-unit-consumption

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

- Migrate SALESDB and REPORTINGDB to an Azure SQL database.

- Migrate DOCDB to Azure Cosmos DB.

- The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

- As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

- Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

The new Azure data infrastructure must meet the following technical requirements:

- Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

- SALESDB must be restorable to any given minute within the past three weeks.

- Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

- Missing indexes must be created automatically for REPORTINGDB.

- Disk IO, CPU, and memory usage must be monitored for SALESDB.

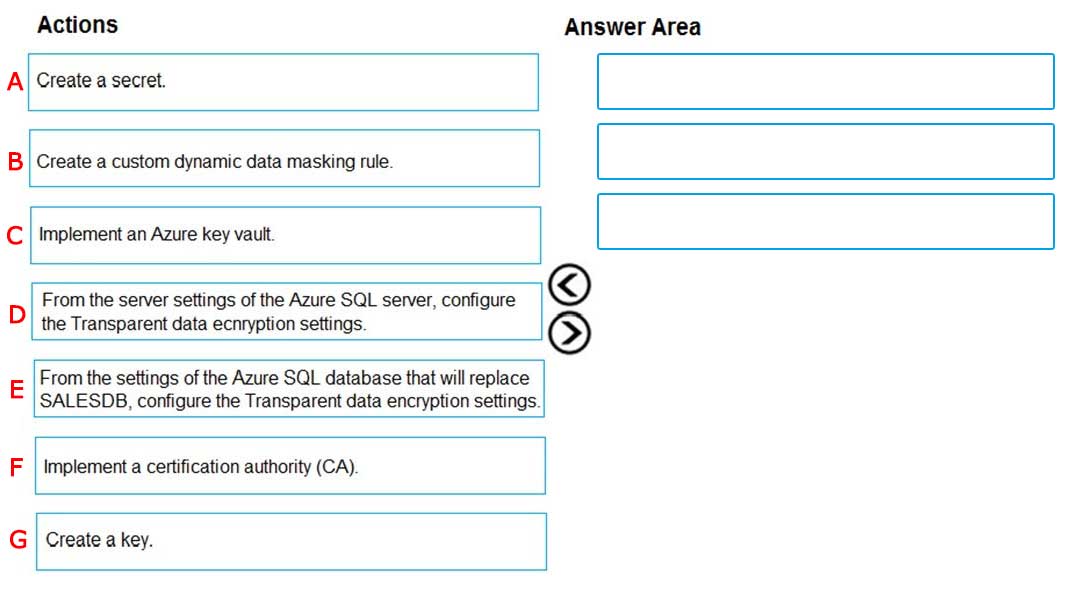

You need to implement the encryption for SALESDB. Which three actions should you perform in sequence?

A-B-C

B-C-D

C-G-E

D-E-F

E-C-B

F-A-B

G-F-B

A-D-F

Answer is C-G-E

Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

Step 1: Implement an Azure key vault

You must create an Azure Key Vault and Key to use for TDE

Step 2: Create a key

Step 3: From the settings of the Azure SQL database

You turn transparent data encryption on and off on the database level.

Reference:

https://docs.microsoft.com/en-us/azure/sql-database/transparent-data-encryption-byok-azure-sql-configure

Contoso, Ltd. is a clothing retailer based in Seattle. The company has 2,000 retail stores across the United States and an emerging online presence.

The network contains an Active Directory forest named contoso.com. The forest it integrated with an Azure Active Directory (Azure AD) tenant named contoso.com. Contoso has an Azure subscription associated to the contoso.com Azure AD tenant.

Existing Environment

Transactional Data

Contoso has three years of customer, transactional, operational, sourcing, and supplier data comprised of 10 billion records stored across multiple on-premises Microsoft SQL Server servers. The SQL Server instances contain data from various operational systems. The data is loaded into the instances by using SQL Server Integration Services (SSIS) packages.

You estimate that combining all product sales transactions into a company-wide sales transactions dataset will result in a single table that contains 5 billion rows, with one row per transaction.

Most queries targeting the sales transactions data will be used to identify which products were sold in retail stores and which products were sold online during different time periods. Sales transaction data that is older than three years will be removed monthly.

You plan to create a retail store table that will contain the address of each retail store. The table will be approximately 2 MB. Queries for retail store sales will include the retail store addresses.

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific product. The product will be identified by a product ID. The table will be approximately 5 GB.

Streaming Twitter Data

The ecommerce department at Contoso develops an Azure logic app that captures trending Twitter feeds referencing the company's products and pushes the products to Azure Event Hubs.

Planned Changes and Requirements

Planned Changes

Contoso plans to implement the following changes:

Load the sales transaction dataset to Azure Synapse Analytics.

Integrate on-premises data stores with Azure Synapse Analytics by using SSIS packages.

Use Azure Synapse Analytics to analyze Twitter feeds to assess customer sentiments about products.

Sales Transaction Dataset Requirements

Contoso identifies the following requirements for the sales transaction dataset:

Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by month. Boundary values must belong to the partition on the right.

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

Implement a surrogate key to account for changes to the retail store addresses.

Ensure that data storage costs and performance are predictable.

Minimize how long it takes to remove old records.

Customer Sentiment Analytics Requirements

Contoso identifies the following requirements for customer sentiment analytics:

Allow Contoso users to use PolyBase in an Azure Synapse Analytics dedicated SQL pool to query the content of the data records that host the Twitter feeds.

Data must be protected by using row-level security (RLS). The users must be authenticated by using their own Azure AD credentials.

Maximize the throughput of ingesting Twitter feeds from Event Hubs to Azure Storage without purchasing additional throughput or capacity units.

Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files.

Ensure that the data store supports Azure AD-based access control down to the object level.

Minimize administrative effort to maintain the Twitter feed data records.

Purge Twitter feed data records that are older than two years.

Data Integration Requirements

Contoso identifies the following requirements for data integration:

Use an Azure service that leverages the existing SSIS packages to ingest on-premises data into datasets stored in a dedicated SQL pool of Azure Synapse Analytics and transform the data.

Identify a process to ensure that changes to the ingestion and transformation activities can be version-controlled and developed independently by multiple data engineers.

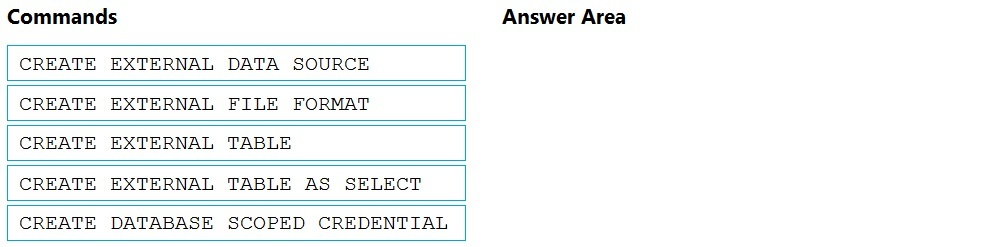

You need to ensure that the Twitter feed data can be analyzed in the dedicated SQL pool. The solution must meet the customer sentiment analytic requirements.

Which three Transact-SQL DDL commands should you run in sequence?

Scenario: Allow Contoso users to use PolyBase in an Azure Synapse Analytics dedicated SQL pool to query the content of the data records that host the Twitter feeds. Data must be protected by using row-level security (RLS). The users must be authenticated by using their own Azure AD credentials.

Box 1: CREATE EXTERNAL DATA SOURCE

External data sources are used to connect to storage accounts.

Box 2: CREATE EXTERNAL FILE FORMAT

CREATE EXTERNAL FILE FORMAT creates an external file format object that defines external data stored in Azure Blob Storage or Azure Data Lake Storage.

Creating an external file format is a prerequisite for creating an external table.

Box 3: CREATE EXTERNAL TABLE AS SELECT

When used in conjunction with the CREATE TABLE AS SELECT statement, selecting from an external table imports data into a table within the SQL pool. In addition to the COPY statement, external tables are useful for loading data.

Incorrect Answers:

CREATE EXTERNAL TABLE

The CREATE EXTERNAL TABLE command creates an external table for Synapse SQL to access data stored in Azure Blob Storage or Azure Data Lake Storage.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-external-tables