DP-100: Designing and Implementing a Data Science Solution on Azure

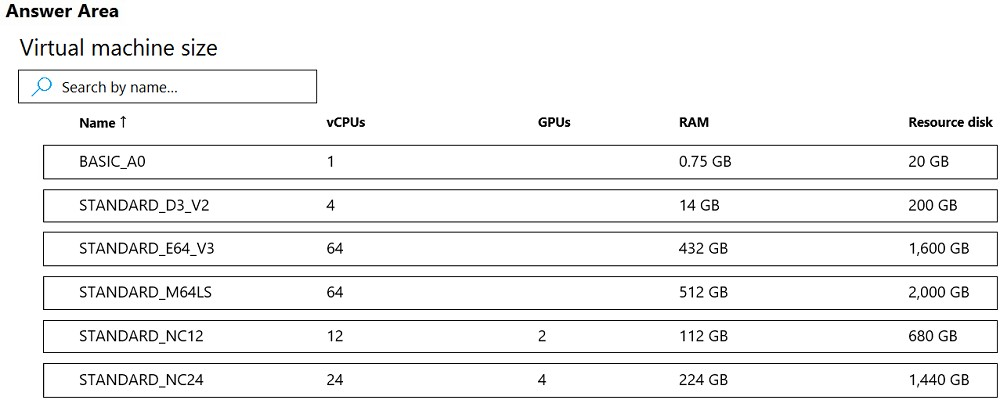

You are developing a deep learning model by using TensorFlow. You plan to run the model training workload on an Azure Machine Learning Compute Instance.

You must use CUDA-based model training.

You need to provision the Compute Instance.

Which two virtual machines sizes can you use?

BASIC_AO

STANDART_D3_V2

STANDART_E64_V3

STANDART_M64LS

STANDART_NC12

STANDART_NC24

Answer is STANDART_NC12 & STANDART_NC24

NC series support CUDA model training. CUDA is a parallel computing platform and programming model developed by Nvidia for general computing on its own GPUs (graphics processing units). CUDA enables developers to speed up compute-intensive applications by harnessing the power of GPUs for the parallelizable part of the computation.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/concept-compute-target#compute-isolation

https://www.infoworld.com/article/3299703/what-is-cuda-parallel-programming-for-gpus.html

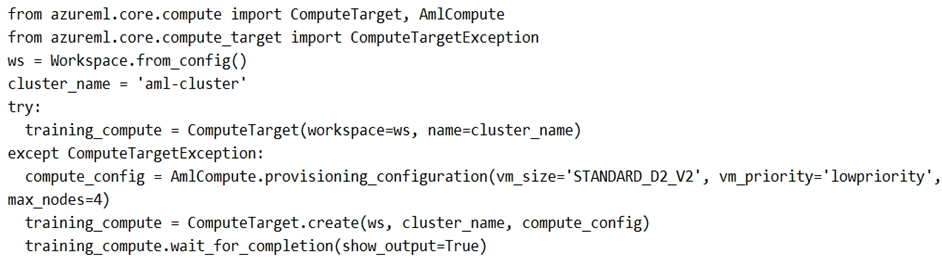

You are preparing to use the Azure ML SDK to run an experiment and need to create compute. You run the following code:

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

NO - If it doesn't exist an exception is raised, then a new compute target is created. It doesn't delete and replace an existing one.

NO - The wait_for_completion() method will return when the compute target is in the 'Succeeded' or 'Failed' provisioning state. It doesn't specifically wait for all four nodes to become active.

YES - The compute target is being created with 'lowpriority' VMs, which can be preempted if Azure needs the capacity.

NO - This code snippet does not include any code to delete the compute target after the training experiment completes.

Reference:

https://notebooks.azure.com/azureml/projects/azureml-getting-started/html/how-to-use-azureml/training/train-on-amlcompute/train-on-amlcompute.ipynb

https://docs.microsoft.com/en-us/python/api/azureml-core/azureml.core.compute.computetarget

You are using the Azure Machine Learning Python SDK, and you need to create a reference to the default datastore in your workspace.

You have written the following code:

--

from azureml.core import Workspace

#Get the workspace

ws = Workspace.from_config()

#Get the default datastore

--

Which line of code should you add?

default_ds = ws.get_default_datastore()

default_ds = ws.set_default_datastore()

default_ds = ws.datastores.get('default')

Answer is default_ds = ws.get_default_datastore()

Use the Workspace.get_default_datastore() method to get the default datastore.

You are using the Azure Machine Learning Python SDK to run experiments.

You need to create an environment from a Conda configuration (.yml) file.

Which method of the Environment class should you use?

createcreate_from_conda_specificationcreate_from_existing_conda_environmentAnswer is

create_from_conda_specification Use the create_from_conda_specification method to create an environment from a configuration file. The create method requires you to explicitly specify conda and pip packages, and the create_from_existing_conda_environment requires an existing environment on the computer.

You have registered an environment in your workspace, and retrieved it using the following code:

from azureml.core import Environment, Estimator env = Environment.get(workspace=ws, name='my_environment') estimator = Estimator(entry_script='training_script.py', ...)

You want to use the environment as the Python context for an experiment script that is run using an estimator.

Which property of the estimator should you set to assign the environment?

compute_target = envenvironment_definition = envsource_directory = envAnswer is

environment_definition = env

To specify an environment for an estimator, use the environment_definition property. The compute_target specifies the compute (for example cluster or local) on which the environment will be created and the script run, and the source_directory specifies the local folder containing the entry script.

You are building an intelligent solution using machine learning models.

The environment must support the following requirements:

- Data scientists must build notebooks in a cloud environment

- Data scientists must use automatic feature engineering and model building in machine learning pipelines.

- Notebooks must be deployed to retrain using Spark instances with dynamic worker allocation.

- Notebooks must be exportable to be version controlled locally.

You need to create the environment.

Which four actions should you perform in sequence?

Install the Azure Machine Learning SDK for Python on the cluster

When the cluster is ready, export the Zeppelin notebooks to a local environment

Create and execute the Zeppelin notebooks on the cluster

Install Microsot Machine Learning for Apache Spark

When the cluster is ready, export Zeppelin notebooks to a local environment.

Create an Azure HDInsight cluster to include the Apache Spark Mlib library

Create and execute the Zeppelin notebooks on the cluster

Create an Azure Databricks cluster

Step 1: Create an Azure HDInsight cluster to include the Apache Spark Mlib library

Step 2: Install Microsot Machine Learning for Apache Spark

You install AzureML on your Azure HDInsight cluster. Microsoft Machine Learning for Apache Spark (MMLSpark) provides a number of deep learning and data science tools for Apache Spark, including seamless integration of Spark Machine Learning pipelines with Microsoft Cognitive Toolkit (CNTK) and OpenCV, enabling you to quickly create powerful, highly-scalable predictive and analytical models for large image and text datasets.

Step 3: Create and execute the Zeppelin notebooks on the cluster

Step 4: When the cluster is ready, export Zeppelin notebooks to a local environment. Notebooks must be exportable to be version controlled locally.

References:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-zeppelin-notebook

https://azuremlbuild.blob.core.windows.net/pysparkapi/intro.html

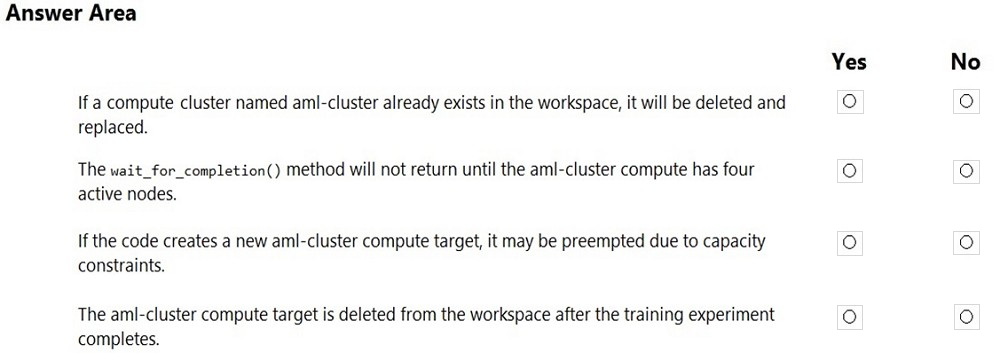

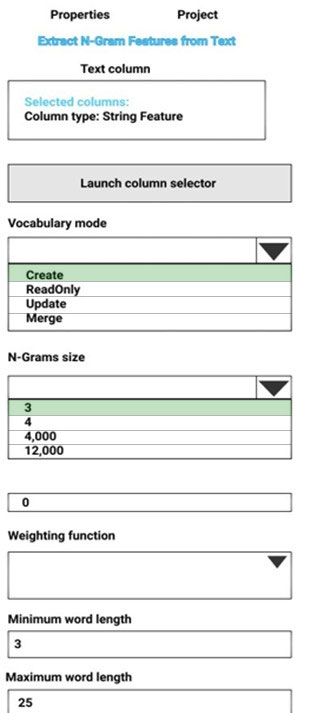

You are performing sentiment analysis using a CSV file that includes 12,000 customer reviews written in a short sentence format. You add the CSV file to Azure

Machine Learning Studio and configure it as the starting point dataset of an experiment. You add the Extract N-Gram Features from Text module to the experiment to extract key phrases from the customer review column in the dataset.

You must create a new n-gram dictionary from the customer review text and set the maximum n-gram size to trigrams.

What should you select? To answer, select the appropriate options in the answer area.

Please check the answer section

Vocabulary mode: Create

For Vocabulary mode, select Create to indicate that you are creating a new list of n-gram features.

N-Grams size: 3

For N-Grams size, type a number that indicates the maximum size of the n-grams to extract and store. For example, if you type 3, unigrams, bigrams, and trigrams will be created.

Weighting function: Leave blank The option, Weighting function, is required only if you merge or update vocabularies. It specifies how terms in the two vocabularies and their scores should be weighted against each other.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/extract-n-gram-features-from-text

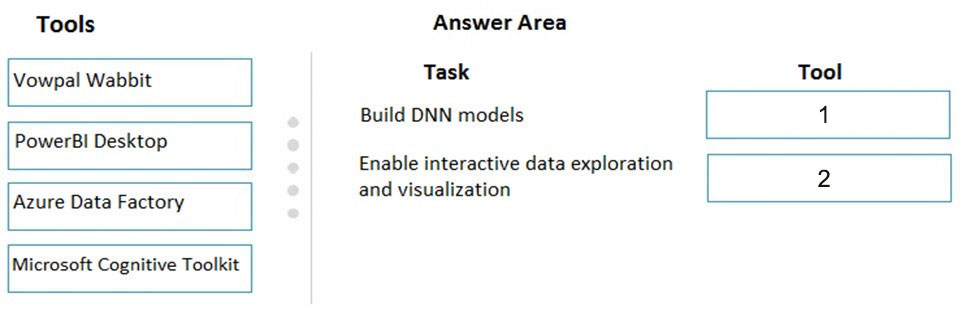

You configure a Deep Learning Virtual Machine for Windows.

You need to recommend tools and frameworks to perform the following:

- Build deep neural network (DNN) models

- Perform interactive data exploration and visualization

Which two tools and frameworks should you recommend?

Vowpal Wabbit - PowerBI desktop

PowerBI desktop - Azure Data Factory

Azure Data Factory - Microsoft Cognitive Toolkit

PowerBI desktop - Microsoft Cognitive Toolkit

Box 1: Vowpal Wabbit

Use the Train Vowpal Wabbit Version 8 module in Azure Machine Learning Studio (classic), to create a machine learning model by using Vowpal Wabbit.

Box 2: PowerBI Desktop

Power BI Desktop is a powerful visual data exploration and interactive reporting tool

BI is a name given to a modern approach to business decision making in which users are empowered to find, explore, and share insights from data across the enterprise.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/train-vowpal-wabbit-version-8-model

https://docs.microsoft.com/en-us/azure/architecture/data-guide/scenarios/interactive-data-exploration

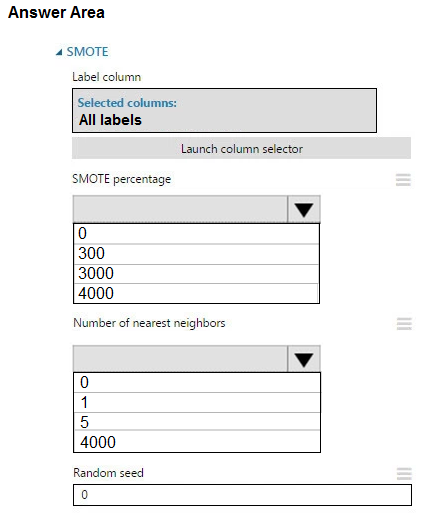

You create an experiment in Azure Machine Learning Studio. You add a training dataset that contains 10,000 rows. The first 9,000 rows represent class 0 (90 percent).

The remaining 1,000 rows represent class 1 (10 percent).

The training set is imbalances between two classes. You must increase the number of training examples for class 1 to 4,000 by using 5 data rows. You add the Synthetic Minority Oversampling Technique (SMOTE) module to the experiment.

You need to configure the module.

Which values should you use?

Please check the answer section

Box 1: 300

You type 300 (%), the module triples the percentage of minority cases (3000) compared to the original dataset (1000).

Box 2: 5

We should use 5 data rows.

Use the Number of nearest neighbors option to determine the size of the feature space that the SMOTE algorithm uses when in building new cases. A nearest neighbor is a row of data (a case) that is very similar to some target case. The distance between any two cases is measured by combining the weighted vectors of all features.

By increasing the number of nearest neighbors, you get features from more cases.

By keeping the number of nearest neighbors low, you use features that are more like those in the original sample.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/smote

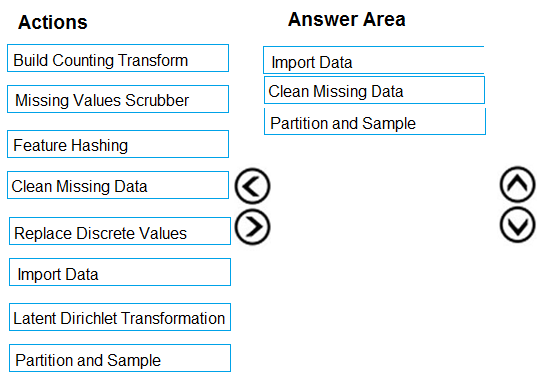

You are creating an experiment by using Azure Machine Learning Studio.

You must divide the data into four subsets for evaluation. There is a high degree of missing values in the data. You must prepare the data for analysis.

You need to select appropriate methods for producing the experiment.

Which three modules should you run in sequence?

Build Counting Transform

Missing Values Scubber

Feature Hashing

Clean Missing Data

Replace Discrete Values

Import Data

Latent Dirichlet Transformation

Partititon and Sample

The Clean Missing Data module in Azure Machine Learning Studio, to remove, replace, or infer missing values.

Incorrect Answers:

- Latent Direchlet Transformation: Latent Dirichlet Allocation module in Azure Machine Learning Studio, to group otherwise unclassified text into a number of categories. Latent Dirichlet Allocation (LDA) is often used in natural language processing (NLP) to find texts that are similar. Another common term is topic modeling.

- Build Counting Transform: Build Counting Transform module in Azure Machine Learning Studio, to analyze training data. From this data, the module builds a count table as well as a set of count-based features that can be used in a predictive model.

- Missing Value Scrubber: The Missing Values Scrubber module is deprecated.

- Feature hashing: Feature hashing is used for linguistics, and works by converting unique tokens into integers.

- Replace discrete values: the Replace Discrete Values module in Azure Machine Learning Studio is used to generate a probability score that can be used to represent a discrete value. This score can be useful for understanding the information value of the discrete values.

References:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/clean-missing-data