DP-100: Designing and Implementing a Data Science Solution on Azure

You are planning to make use of Azure Machine Learning designer to train models.

You need choose a suitable compute type.

Recommendation: You choose Inference cluster.

Will the requirements be satisfied?

Yes

No

Answer is No

It is compute cluster. Inference cluster is to deploy not train.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-studio

You are planning to make use of Azure Machine Learning designer to train models.

You need choose a suitable compute type.

Recommendation: You choose Compute cluster.

Will the requirements be satisfied?

Yes

No

Answer is Yes

Create a cloud-based compute instance to use for your development environment.

Create a cloud-based compute cluster to use for training your model.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-studio

You are making use of the Azure Machine Learning to designer construct an experiment.

After dividing a dataset into training and testing sets, you configure the algorithm to be Two-Class Boosted Decision Tree.

You are preparing to ascertain the Area Under the Curve (AUC).

Which of the following is a sequential combination of the models required to achieve your goal?

Train, Score, Evaluate.

Score, Evaluate, Train.

Evaluate, Export Data, Train.

Train, Score, Export Data.

Answer is Train, Score, Evaluate.

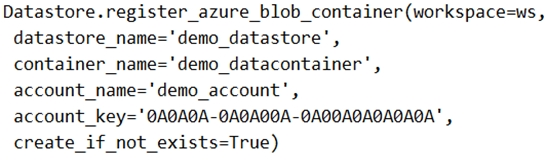

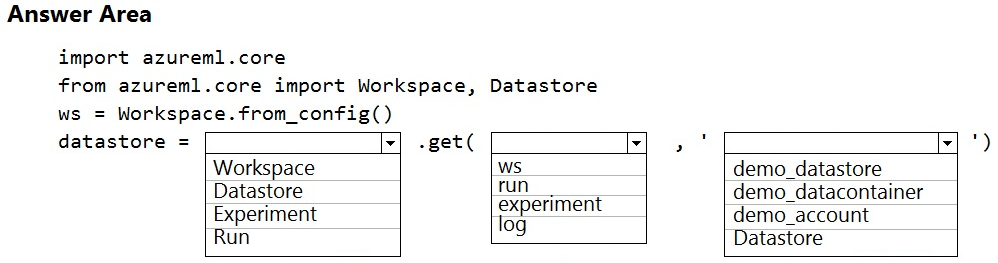

A coworker registers a datastore in a Machine Learning services workspace by using the following code:

You need to write code to access the datastore from a notebook.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

Check the answer section

Box 1: DataStore

To get a specific datastore registered in the current workspace, use the get() static method on the Datastore class:

# Get a named datastore from the current workspace

datastore = Datastore.get(ws, datastore_name='your datastore name')

Box 2: ws

Box 3: demo_datastore

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-access-data

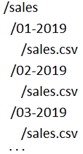

A set of CSV files contains sales records. All the CSV files have the same data schema.

Each CSV file contains the sales record for a particular month and has the filename sales.csv. Each file is stored in a folder that indicates the month and year when the data was recorded. The folders are in an Azure blob container for which a datastore has been defined in an Azure Machine Learning workspace. The folders are organized in a parent folder named sales to create the following hierarchical structure:

At the end of each month, a new folder with that month's sales file is added to the sales folder.

You plan to use the sales data to train a machine learning model based on the following requirements:

- You must define a dataset that loads all of the sales data to date into a structure that can be easily converted to a dataframe.

- You must be able to create experiments that use only data that was created before a specific previous month, ignoring any data that was added after that month.

- You must register the minimum number of datasets possible.

You need to register the sales data as a dataset in Azure Machine Learning service workspace.

What should you do?

Create a tabular dataset that references the datastore and explicitly specifies each 'sales/mm-yyyy/sales.csv' file every month. Register the dataset with the name sales_dataset each month, replacing the existing dataset and specifying a tag named month indicating the month and year it was registered. Use this dataset for all experiments.

Create a tabular dataset that references the datastore and specifies the path 'sales/*/sales.csv', register the dataset with the name sales_dataset and a tag named month indicating the month and year it was registered, and use this dataset for all experiments.

Create a new tabular dataset that references the datastore and explicitly specifies each 'sales/mm-yyyy/sales.csv' file every month. Register the dataset with the name sales_dataset_MM-YYYY each month with appropriate MM and YYYY values for the month and year. Use the appropriate month-specific dataset for experiments.

Create a tabular dataset that references the datastore and explicitly specifies each 'sales/mm-yyyy/sales.csv' file. Register the dataset with the name sales_dataset each month as a new version and with a tag named month indicating the month and year it was registered. Use this dataset for all experiments, identifying the version to be used based on the month tag as necessary.

Answer is Create a tabular dataset that references the datastore and explicitly specifies each 'sales/mm-yyyy/sales.csv' file. Register the dataset with the name sales_dataset each month as a new version and with a tag named month indicating the month and year it was registered. Use this dataset for all experiments, identifying the version to be used based on the month tag as necessary.

A: *replaces* the the existing dataset -> can't directly filter data before the specific month

B: captures all the sales data from different folders in *one dataset* -> can't can't directly filter data before the specific month

C: requires registering multiple datasets

D: satisfies all the requirements

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-version-track-datasets#versioning-best-practice

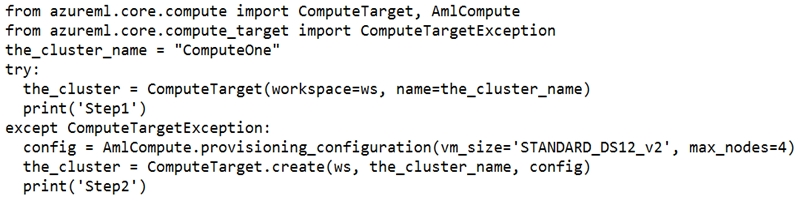

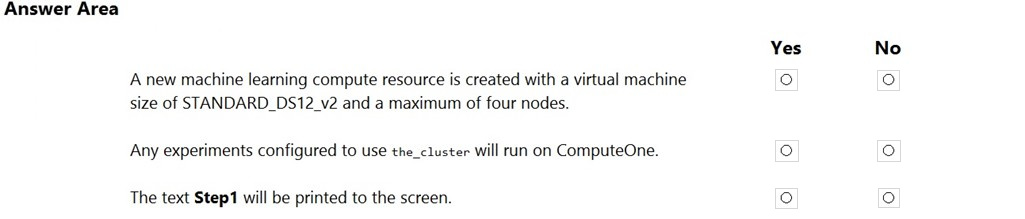

You create an Azure Machine Learning compute target named ComputeOne by using the STANDARD_D1 virtual machine image.

ComputeOne is currently idle and has zero active nodes.

You define a Python variable named ws that references the Azure Machine Learning workspace. You run the following Python code:

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

Answer is No - Yes - Yes

The code given actually represents the compute creation, if there was no computeOne then the code will create one but as in this case there is already a computeOne, Exception block will not be executed.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/tutorial-1st-experiment-sdk-setup-local

You have been tasked with moving data into Azure Blob Storage for the purpose of supporting Azure Machine Learning.

Which of the following can be used to complete your task?

AzCopy

Bulk Copy Program (BCP)

SSIS

Bulk Insert SQL Query

Azure Storage Explorer

Answers are;

AzCopy SSIS Azure Storage Explorer

You can move data to and from Azure Blob storage using different technologies:

- Azure Storage-Explorer

- AzCopy

- Python

- SSIS

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-azure-blob

Complete the sentence by selecting the correct option in the answer area.

CSV

DOCX

ARFF

TXT

Answer is ARFF

Weka, a popular machine learning and data mining software, supports the ARFF (Attribute-Relation File Format) as its standard data format. The ARFF format is a plain text file format that allows you to define the attributes and data instances in a structured manner.

Use the Convert to ARFF module in Azure Machine Learning Studio, to convert datasets and results in Azure Machine Learning to the attribute-relation file format used by the Weka toolset.

The ARFF data specification for Weka supports multiple machine learning tasks, including data preprocessing, classification, and feature selection. In this format, data is organized by entities and their attributes, and is contained in a single text file.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/convert-to-arff

You have been tasked with designing a deep learning model, which accommodates the most recent edition of Python, to recognize language.

You have to include a suitable deep learning framework in the Data Science Virtual Machine (DSVM).

Which of the following actions should you take?

You should consider including Rattle.

You should consider including TensorFlow.

You should consider including Theano.

You should consider including Chainer.

Answer is You should consider including TensorFlow.

TensorFlow is an open-source software library for data flow and differentiable programming across a range of tasks. It was developed by the Google Brain team and is used for building and training machine learning models, particularly neural networks. TensorFlow provides a flexible and efficient platform for implementing machine learning algorithms, and supports a variety of programming languages including Python, C++, and Java. The library provides a comprehensive set of tools and functionality, including visualization and debugging tools, and it has a strong community of developers and users contributing to its ongoing development. TensorFlow is widely used in both academia and industry for various applications such as image classification, natural language processing, and reinforcement learning.

Reference:

https://www.infoworld.com/article/3278008/what-is-tensorflow-the-machine-learning-library-explained.html

You have been tasked with evaluating your model on a partial data sample via k-fold cross-validation.

You have already configured a k parameter as the number of splits. You now have to configure the k parameter for the cross-validation with the usual value choice.

Recommendation: You configure the use of the value k=10.

Will the requirements be satisfied?

Yes

No

Answer is Yes

k=10 is a common choice for the number of folds in k-fold cross-validation, and it can satisfy the requirement to evaluate the model on a partial data sample. However, the appropriate value for k may depend on the size of the dataset and the desired level of accuracy. In some cases, a value other than 10 might be more appropriate.