DP-600: Implementing Analytics Solutions Using Microsoft Fabric

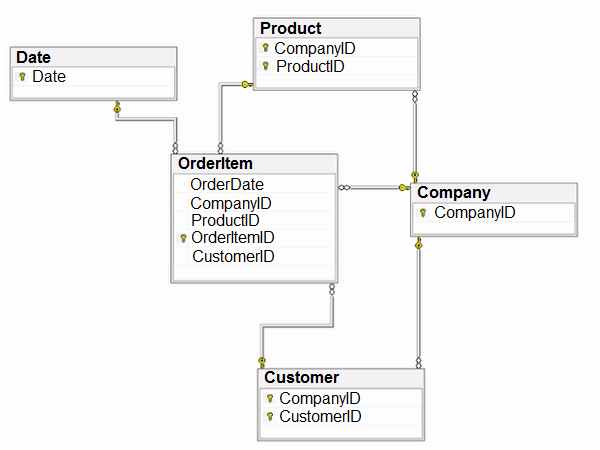

You have the source data model shown in the following exhibit.

The primary keys of the tables are indicated by a key symbol beside the columns involved in each key.

You need to create a dimensional data model that will enable the analysis of order items by date, product, and customer.

What should you include in the solution?

Check the answer section

Answers are;

Both the CompanyID and the ProductID columns

Denormalized into the Customer and Product entities

The company entity should be denormalized into both product and customer tables. Company ID is part of the customer table and you cannot just denormalize it into the product table. For the relationship between OrderItem and Product, you cannot just reference a part of the primary key. This is not how referencing works, your relationship would be wrong, if you only use product id and a product id is repeated for two different company IDs your relationship would consider both of them as the same product.

You have a Fabric tenant that contains a semantic model.

You need to prevent report creators from populating visuals by using implicit measures.

What are two tools that you can use to achieve the goal?

Microsoft Power BI Desktop

Tabular Editor

Microsoft SQL Server Management Studio (SSMS)

DAX Studio

Answers are;

A. Microsoft Power BI Desktop

B. Tabular Editor

You can prevent user-defined implicit measures by turning them off in PBI Desktop or Tabular Editor.

Reference:

https://learn.microsoft.com/en-us/power-bi/transform-model/model-explorer#discourage-implicit-measures

https://docs.tabulareditor.com/api/TabularEditor.TOMWrapper.Model.html#TabularEditor_TOMWrapper_Model_DiscourageImplicitMeasures

You have a Fabric tenant that contains a lakehouse.

You plan to query sales data files by using the SQL endpoint. The files will be in an Amazon Simple Storage Service (Amazon S3) storage bucket.

You need to recommend which file format to use and where to create a shortcut.

Which two actions should you include in the recommendation?

Create a shortcut in the Files section.

Use the Parquet format

Use the CSV format.

Create a shortcut in the Tables section.

Use the delta format.

Answers are;

D. Create a shortcut in the Tables section.

E. Use the delta format.

To be able to use the SQL Endpoint you need to create the shortcut in the Tables section. The file also needs to be in the delta format to be recognised as a managed table. If you try to add a parquet file to the tables section, it will not be recognised as a table object and you won't be able to query it.

Shortcuts aren't supported in other subdirectories of the Tables folder. If the target of the shortcut contains data in the DeltaParquet format, the lakehouse automatically synchronizes the metadata and recognizes the folder as a table.

Reference:

https://learn.microsoft.com/en-us/fabric/onelake/onelake-shortcuts#lakehouse

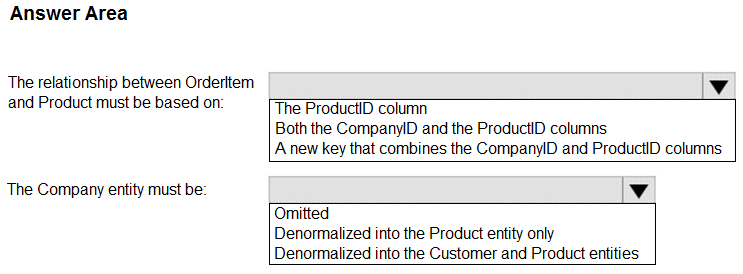

You have a Fabric workspace named Workspace1 that contains a data flow named Dataflow1 contains a query that returns the data shown in the following exhibit.

You need to transform the data columns into attribute-value pairs, where columns become rows.

You select the VendorID column.

Which transformation should you select from the context menu of the VendorID column?

Group by

Unpivot columns

Unpivot other columns

Split column

Remove other columns

Answer is Unpivot other columns

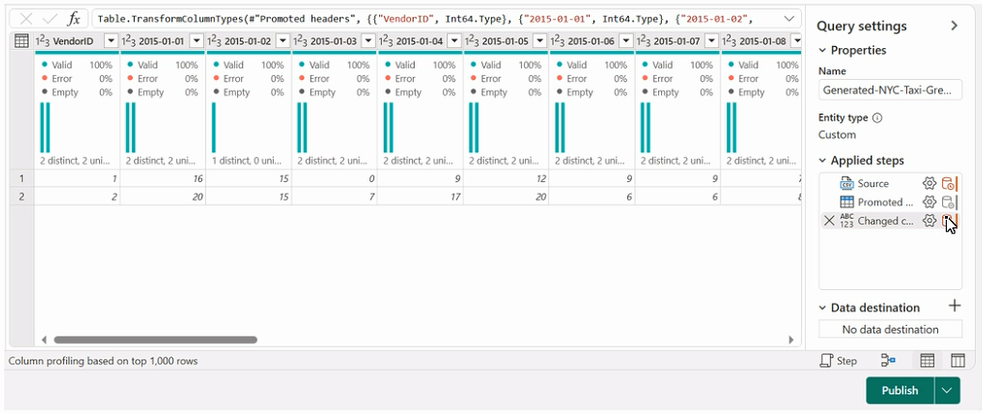

You have a Fabric workspace named Workspace1 and an Azure Data Lake Storage Gen2 account named storage1. Workspace1 contains a lakehouse named Lakehouse1.

You need to create a shortcut to storage1 in Lakehouse1.

Which connection and endpoint should you specify?

Check the answer section

Answer is https - dfs

If you look at the tooltip in the connection settings it has the following about the endpoint, where the example shows https:

"The URL of the ADLSG2 endpoint to connect to. To avoid invalid credential errors, be aware to use the '.dfs' rather than '.blob' endpoint, ensure you are assigned a blob-specific role, and have the networking access set appropriately."

Reference:

https://learn.microsoft.com/en-us/fabric/onelake/create-adls-shortcut

You have a custom Direct Lake semantic model named Model1 that has one billion rows of data.

You use Tabular Editor to connect to Model1 by using the XMLA endpoint.

You need to ensure that when users interact with reports based on Model1, their queries always use Direct Lake mode.

What should you do?

From Model, configure the Default Mode option.

From Partitions, configure the Mode option.

From Model, configure the Storage Location option.

From Model, configure the Direct Lake Behavior option.

Answer is From Model, configure the Direct Lake Behavior option.

Direct Lake models include the DirectLakeBehavior property which can be configured by using Tabular Object Model (TOM) or Tabular Model Scripting Language (TMSL).

Click on Semantic model.

In the Properties pane, choose the Direct Lake behavior for your custom Direct Lake semantic model:

Automatic: This is the default behavior. It allows Direct Lake with fallback to DirectQuery mode if data can’t be efficiently loaded into memory.

Direct Lake only: This option ensures no fallback to DirectQuery mode

Reference:

https://learn.microsoft.com/en-us/power-bi/enterprise/directlake-overview#fallback-behavior

https://powerbi.microsoft.com/en-au/blog/leveraging-pure-direct-lake-mode-for-maximum-query-performance/

You create a semantic model by using Microsoft Power BI Desktop. The model contains one security role named SalesRegionManager and the following tables:

• Sales

• SalesRegion

• SalesAddress

You need to modify the model to ensure that users assigned the SalesRegionManager role cannot see a column named Address in SalesAddress.

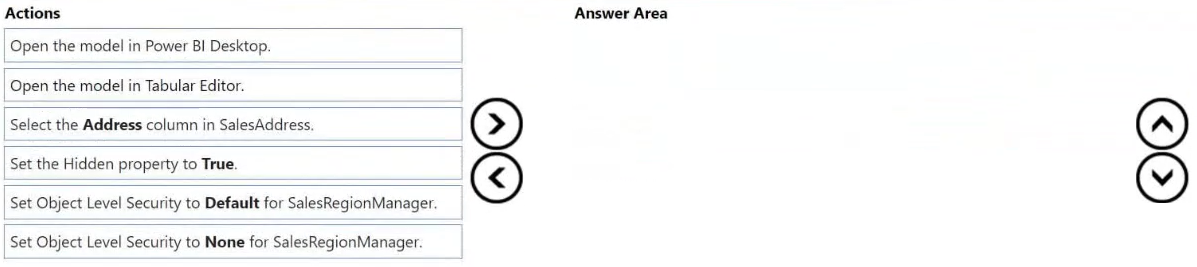

Which three actions should you perform in sequence?

Check the answer section

Answer is 1 Open the model in Tabular Editor

2 Select the address column in SalesAddress

3 Set the Object Level Security to None for the sales manager role.

Setting OLS to None:

When you set OLS to None for a table or column:

- For viewers without the appropriate access levels, it’s as if the secured tables or columns don’t exist.

- The table or column will be hidden from that role.

- Users won’t be able to see or interact with the secured data.

• In Tabular Editor, navigate to Tables and select the specific column names.

• Under Object-Level Security, set the security level to "None" for the role that needs restrictions.

Reference:

https://www.insightsarena.com/post/mastering-object-level-security-in-power-bi-fixing-broken-visuals#:~:text=Configure%20Object-Level%20Security%3A%201%20In%20Tabular%20Editor%2C%20navigate

,need%20to%20be%20restricted%20for%20a%20particular%20role.

You have a Microsoft Power BI semantic model that contains measures. The measures use multiple CALCULATE functions and a FILTER function.

You are evaluating the performance of the measures.

In which use case will replacing the FILTER function with the KEEPFILTERS function reduce execution time?

when the FILTER function uses a nested calculate function

when the FILTER function references a measure

when the FILTER function references columns from multiple tables

when the FILTER function references a column from a single table that uses Import mode

Answer is when the FILTER function references a column from a single table that uses Import mode

The use case in which replacing the FILTER function with the KEEPFILTERS function will most likely reduce execution time is when the FILTER function references a column from a single table that uses Import mode. This scenario allows KEEPFILTERS to optimize the filter application process more efficiently than FILTER.

Reference:

https://learn.microsoft.com/en-us/dax/best-practices/dax-avoid-avoid-filter-as-filter-argument

You have a semantic model named Model1. Model1 contains five tables that all use Import mode. Model1 contains a dynamic row-level security (RLS) role named HR. The HR role filters employee data so that HR managers only see the data of the department to which they are assigned.

You publish Model1 to a Fabric tenant and configure RLS role membership. You share the model and related reports to users.

An HR manager reports that the data they see in a report is incomplete.

What should you do to validate the data seen by the HR Manager?

Select Test as role to view the data as the HR role.

Filter the data in the report to match the intended logic of the filter for the HR department.

Select Test as role to view the report as the HR manager.

Ask the HR manager to open the report in Microsoft Power BI Desktop.

Answer is Select Test as role to view the report as the HR manager.

Option C is the best approach as it directly validates what the specific HR manager sees under the dynamic RLS conditions, ensuring the completeness and accuracy of the data.

Option A is useful, but it doesn't specify viewing the report as the specific HR manager, which is crucial to identify user-specific issues.

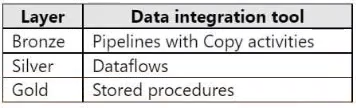

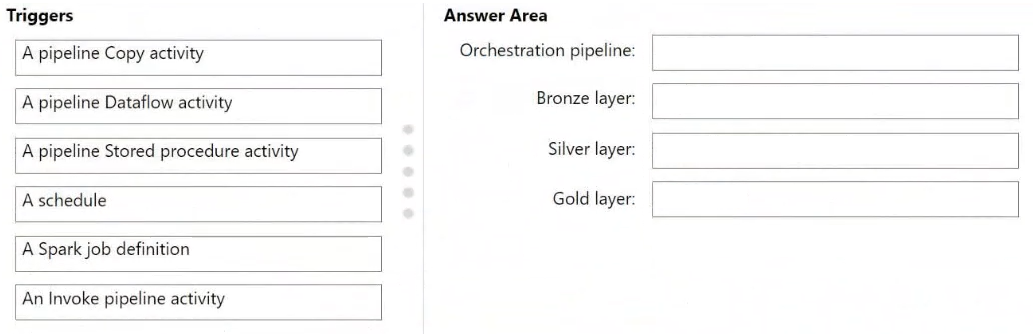

You are implementing a medallion architecture in a single Fabric workspace.

You have a lakehouse that contains the Bronze and Silver layers and a warehouse that contains the Gold layer.

You create the items required to populate the layers as shown in the following table.

You need to ensure that the layers are populated daily in sequential order such that Silver is populated only after Bronze is complete, and Gold is populated only after Silver is complete. The solution must minimize development effort and complexity.

What should you use to execute each set of items?

Check the answer area

Answer is

Orchestration: A schedule

Bronze Layer: An invoke pipeline activity (the actual pipeline is already there)

Silver Layer: A pipeline Dataflow activity

Gold Layer: A pipeline Stored Procedure activity