DP-600: Implementing Analytics Solutions Using Microsoft Fabric

You have a Fabric tenant.

You plan to create a Fabric notebook that will use Spark DataFrames to generate Microsoft Power BI visuals.

You run the following code.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

Answer is No-Yes-Yes

You have a Fabric tenant that contains a workspace named Workspace1. Workspace1 is assigned to a Fabric capacity.

You need to recommend a solution to provide users with the ability to create and publish custom Direct Lake semantic models by using external tools. The solution must follow the principle of least privilege.

Which three actions in the Fabric Admin portal should you include in the recommendation?

From the Tenant settings, set Allow XMLA Endpoints and Analyze in Excel with on-premises datasets to Enabled.

From the Tenant settings, set Allow Azure Active Directory guest users to access Microsoft Fabric to Enabled.

From the Tenant settings, select Users can edit data model in the Power BI service.

From the Capacity settings, set XMLA Endpoint to Read Write.

From the Tenant settings, set Users can create Fabric items to Enabled.

From the Tenant settings, enable Publish to Web.

Answers are;

A. From the Tenant settings, set Allow XMLA Endpoints and Analyze in Excel with on-premises datasets to Enabled.

D. From the Capacity settings, set XMLA Endpoint to Read Write.

E. From the Tenant settings, set Users can create Fabric items to Enabled.

D - The XMLA endpoint needs to be enabled from the capacity settings as it is crucial for allowing external tools to only read, but also, publish and manage custom Direct Lake semantic models.

A - Again from Tenant settings, we need to enable XMLA endpoints ; This is to ensure that external tools interact with the data models as and when necessary within the tenant's workspace ; The analyze in excel is just complimentary to this setting in Fabric and is irrelevant

E - Sets appropriate permissions to users by allowing them to edit models and publish them as when required abiding by the principle of least privilege.

Reference:

https://learn.microsoft.com/en-us/power-bi/enterprise/service-premium-connect-tools#security

You plan to deploy Microsoft Power BI items by using Fabric deployment pipelines. You have a deployment pipeline that contains three stages named Development, Test, and Production. A workspace is assigned to each stage.

You need to provide Power BI developers with access to the pipeline. The solution must meet the following requirements:

- Ensure that the developers can deploy items to the workspaces for Development and Test.

- Prevent the developers from deploying items to the workspace for Production.

- Follow the principle of least privilege.

Which three levels of access should you assign to the developers?

Build permission to the production semantic models

Admin access to the deployment pipeline

Viewer access to the Development and Test workspaces

Viewer access to the Production workspace

Contributor access to the Development and Test workspaces

Contributor access to the Production workspace

Answers are;

B. Admin access to the deployment pipeline D. Viewer access to the Production workspace E. Contributor access to the Development and Test workspaces

If you have contributer access to the workspace, then you don't need to assign an additional viewer access (C) to the developers.

B - Admin access is provided to the developers for the developers to manage the deployent process across the various stages (in this case Dev and Test). This is a basic necessary.

D - To restrict the access on the Production workspace, provide an overriding Viewer access which lets the developers only view the Production environment and not make any changes.

E - This is to provide the developers with the permissions to develop, edit and update the Dev and Test pipelines.

You have a Fabric tenant that contains a warehouse. The warehouse uses row-level security (RLS).

You create a Direct Lake semantic model that uses the Delta tables and RLS of the warehouse.

When users interact with a report built from the model, which mode will be used by the DAX queries?

DirectQuery

Dual

Direct Lake

Import

Answer is DirectQuery

Row-level security only applies to queries on a Warehouse or SQL analytics endpoint in Fabric. Power BI queries on a warehouse in Direct Lake mode will fall back to Direct Query mode to abide by row-level security.

Reference:

https://learn.microsoft.com/en-us/fabric/data-warehouse/row-level-security

You have a Fabric tenant that contains a semantic model. The model uses Direct Lake mode. You suspect that some DAX queries load unnecessary columns into memory. You need to identify the frequently used columns that are loaded into memory. What are two ways to achieve the goal?

Use the Analyze in Excel feature.

Use the Vertipaq Analyzer tool.

Query the $System.DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS dynamic management view (DMV).

Query the DISCOVER_MEMORYGRANT dynamic management view (DMV).

Answers are;

B. Use the Vertipaq Analyzer tool.

C. Query the $System.DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS dynamic management view (DMV).

A. "Use the Analyze in Excel feature." It’s more about data exploration and visualization.

D. "D. Query the DISCOVER_MEMORYGRANT dynamic management view (DMV)." Provides information about memory grants for queries

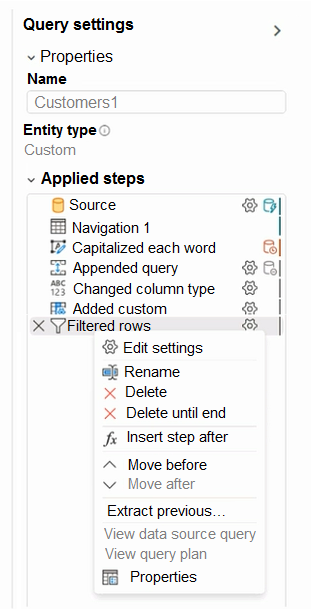

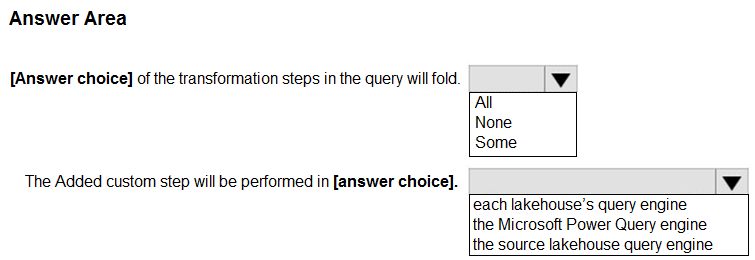

You have a Fabric tenant that contains two lakehouses. You are building a dataflow that will combine data from the lakehouses. The applied steps from one of the queries in the dataflow is shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Check the answer section

If the query folded the work is pushed to the data source. the icons help to tell you if folding is occurring or not.

Reference:

https://learn.microsoft.com/en-us/power-query/step-folding-indicators#step-diagnostics-indicators

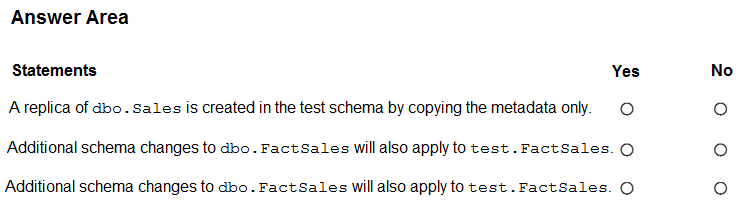

You have a Fabric tenant that contains a warehouse named Warehouse1. Warehouse1 contains a fact table named FactSales that has one billion rows.

You run the following T-SQL statement.

CREATE TABLE test.FactSales AS CLONE OF Dbo.FactSales;For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Check the answer section

Answer is Y-N-N

Second and third statements are equal "Additional schema changes to dbo.FactSales will also apply to test.FactSales"

What happens with this statement:

- A new table called test.FactSales is created.

- The structure (columns, data types, constraints) of test.FactSales will be identical to that of Dbo.FactSales at the time of creation.

- The data in test.FactSales will be a snapshot of the data in Dbo.FactSales at the moment of table creation.

Reference:

https://learn.microsoft.com/en-us/fabric/data-warehouse/clone-table

You have source data in a folder on a local computer.

You need to create a solution that will use Fabric to populate a data store. The solution must meet the following requirements:

Support the use of dataflows to load and append data to the data store.

Ensure that Delta tables are V-Order optimized and compacted automatically.

Which type of data store should you use?

a lakehouse

an Azure SQL database

a warehouse

a KQL database

Answer is a lakehouse

To meet the requirements of supporting dataflows to load and append data to the data store while ensuring that Delta tables are V-Order optimized and compacted automatically, you should use a lakehouse in Fabric as your solution.

Reference:

https://learn.microsoft.com/en-us/fabric/data-engineering/delta-optimization-and-v-order?tabs=sparksql

You have a Fabric tenant that contains a warehouse.

Several times a day, the performance of all warehouse queries degrades. You suspect that Fabric is throttling the compute used by the warehouse.

What should you use to identify whether throttling is occurring?

the Capacity settings

the Monitoring hub

dynamic management views (DMVs)

the Microsoft Fabric Capacity Metrics app

Answer is the Microsoft Fabric Capacity Metrics app

The Microsoft Capacity Metrics app, also known as the metrics app, serves as a monitoring tool within the Microsoft Power BI service. It offers functionalities to track and analyze the resource utilization

Reference:

https://learn.microsoft.com/en-us/fabric/enterprise/throttling#track-rejected-operations

https://learn.microsoft.com/en-us/fabric/enterprise/metrics-app

You have a Fabric tenant that contains a warehouse.

A user discovers that a report that usually takes two minutes to render has been running for 45 minutes and has still not rendered.

You need to identify what is preventing the report query from completing.

Which dynamic management view (DMV) should you use?

sys.dm_exec_requests

sys.dm_exec_sessions

sys.dm_exec_connections

sys.dm_pdw_exec_requests

Answer is sys.dm_exec_requests

You can use the sys.dm_exec_requests dynamic management view (DMV) to identify what is preventing the report query from completing 1.

Reference:

https://learn.microsoft.com/en-us/fabric/data-warehouse/monitor-using-dmv

#identify-and-kill-a-long-running-query" target="_blank">https://learn.microsoft.com/en-us/fabric/data-warehouse/monitor-using-dmv

#identify-and-kill-a-long-running-query

https://learn.microsoft.com/en-us/fabric/data-warehouse/monitor-using-dmv