Professional Data Engineer on Google Cloud Platform

Which Cloud Dataflow / Beam feature should you use to aggregate data in an unbounded data source every hour based on the time when the data entered the pipeline?

An hourly watermark

An event time trigger

The with Allowed Lateness method

A processing time trigger

Answer is A processing time trigger

When collecting and grouping data into windows, Beam uses triggers to determine when to emit the aggregated results of each window.

Processing time triggers. These triggers operate on the processing time the time when the data element is processed at any given stage in the pipeline.

Event time triggers. These triggers operate on the event time, as indicated by the timestamp on each data element. Beams default trigger is event time-based.

Reference:

https://beam.apache.org/documentation/programming-guide/#triggers

You are planning to use Google's Dataflow SDK to analyze customer data such as displayed below. Your project requirement is to extract only the customer name from the data source and then write to an output PCollection.

Tom,555 X street -

Tim,553 Y street -

Sam, 111 Z street -

Which operation is best suited for the above data processing requirement?

ParDo

Sink API

Source API

Data extraction

Answer is ParDo

In Google Cloud dataflow SDK, you can use the ParDo to extract only a customer name of each element in your PCollection.

Reference:

https://cloud.google.com/dataflow/model/par-do

Does Dataflow process batch data pipelines or streaming data pipelines?

Only Batch Data Pipelines

Both Batch and Streaming Data Pipelines

Only Streaming Data Pipelines

None of the above

Answer is Both Batch and Streaming Data Pipelines

Dataflow is a unified processing model, and can execute both streaming and batch data pipelines

Reference:

https://cloud.google.com/dataflow/

The _________ for Cloud Bigtable makes it possible to use Cloud Bigtable in a Cloud Dataflow pipeline.

Cloud Dataflow connector

DataFlow SDK

BiqQuery API

BigQuery Data Transfer Service

Answer is Cloud Dataflow connector

The Cloud Dataflow connector for Cloud Bigtable makes it possible to use Cloud Bigtable in a Cloud Dataflow pipeline. You can use the connector for both batch and streaming operations.

Reference:

https://cloud.google.com/bigtable/docs/dataflow-hbase

https://cloud.google.com/bigtable/docs/hbase-dataflow-java

The Dataflow SDKs have been recently transitioned into which Apache service?

Apache Spark

Apache Hadoop

Apache Kafka

Apache Beam

Answer is Apache Beam

Dataflow SDKs are being transitioned to Apache Beam, as per the latest Google directive

Reference:

https://cloud.google.com/dataflow/docs/

Which Java SDK class can you use to run your Dataflow programs locally?

LocalRunner

DirectPipelineRunner

MachineRunner

LocalPipelineRunner

Answer is DirectPipelineRunner

DirectPipelineRunner allows you to execute operations in the pipeline directly, without any optimization. Useful for small local execution and tests

Reference:

https://cloud.google.com/dataflow/java-sdk/JavaDoc/com/google/cloud/dataflow/sdk/runners/DirectPipelineRunner

https://beam.apache.org/documentation/runners/direct/

https://beam.apache.org/documentation/runners/direct/

Which of the following is NOT one of the three main types of triggers that Dataflow supports?

Trigger based on element size in bytes

Trigger that is a combination of other triggers

Trigger based on element count

Trigger based on time

Answer is Trigger based on element size in bytes

There are three major kinds of triggers that Dataflow supports: 1. Time-based triggers 2. Data-driven triggers. You can set a trigger to emit results from a window when that window has received a certain number of data elements. 3. Composite triggers. These triggers combine multiple time-based or data-driven triggers in some logical way

Reference:

https://cloud.google.com/dataflow/model/triggers

What Dataflow concept determines when a Window's contents should be output based on certain criteria being met?

Sessions

OutputCriteria

Windows

Triggers

Answer is Triggers

Triggers control when the elements for a specific key and window are output. As elements arrive, they are put into one or more windows by a Window transform and its associated WindowFn, and then passed to the associated Trigger to determine if the Windows contents should be output.

Reference:

https://cloud.google.com/dataflow/java-sdk/JavaDoc/com/google/cloud/dataflow/sdk/transforms/windowing/Trigger

When running a pipeline that has a BigQuery source, on your local machine, you continue to get permission denied errors. What could be the reason for that?

Your gcloud does not have access to the BigQuery resources

BigQuery cannot be accessed from local machines

You are missing gcloud on your machine

Pipelines cannot be run locally

Answer is Your gcloud does not have access to the BigQuery resources

When reading from a Dataflow source or writing to a Dataflow sink using DirectPipelineRunner, the Cloud Platform account that you configured with the gcloud executable will need access to the corresponding source/sink

Reference:

https://cloud.google.com/dataflow/java-sdk/JavaDoc/com/google/cloud/dataflow/sdk/runners/DirectPipelineRunner

You are deploying a new storage system for your mobile application, which is a media streaming service. You decide the best fit is Google Cloud Datastore. You have entities with multiple properties, some of which can take on multiple values. For example, in the entity 'Movie' the property 'actors' and the property 'tags' have multiple values but the property 'date released' does not. A typical query would ask for all movies with actor=

How should you avoid a combinatorial explosion in the number of indexes?

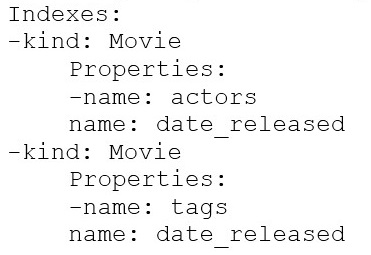

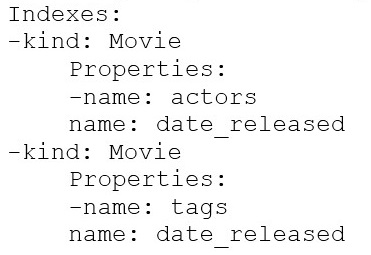

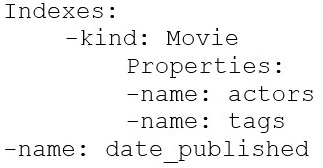

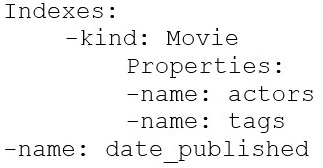

Manually configure the index in your index config as follows:

Manually configure the index in your index config as follows:

Set the following in your entity options: exclude_from_indexes = 'actors, tags'

Set the following in your entity options: exclude_from_indexes = 'date_published'

Answer is Manually configure the index in your index config

You can circumvent the exploding index by manually configuring an index in your index configuration file.

Reference:

https://cloud.google.com/datastore/docs/concepts/indexes#index_limits