SAA-C03: AWS Certified Solutions Architect - Associate

A company recently launched Linux-based application instances on Amazon EC2 in a private subnet and launched a Linux-based bastion host on an Amazon EC2 instance in a public subnet of a VPC. A solutions architect needs to connect from the on-premises network, through the company's internet connection, to the bastion host, and to the application servers. The solutions architect must make sure that the security groups of all the EC2 instances will allow that access.

Which combination of steps should the solutions architect take to meet these requirements? (Choose two.)

Replace the current security group of the bastion host with one that only allows inbound access from the application instances.

Replace the current security group of the bastion host with one that only allows inbound access from the internal IP range for the company.

Replace the current security group of the bastion host with one that only allows inbound access from the external IP range for the company.

Replace the current security group of the application instances with one that allows inbound SSH access from only the private IP address of the bastion host.

Replace the current security group of the application instances with one that allows inbound SSH access from only the public IP address of the bastion host.

Answers are;

C. Replace the current security group of the bastion host with one that only allows inbound access from the external IP range for the company.

D. Replace the current security group of the application instances with one that allows inbound SSH access from only the private IP address of the bastion host.

on-prem -----> bastion host (we use internet, means that we need external IPs of the company)

bastion host -----> private subnet (we use private IP since we are in the same AWS network)

Reference:

https://aws.amazon.com/solutions/implementations/linux-bastion/

A solutions architect is designing a two-tier web application. The application consists of a public-facing web tier hosted on Amazon EC2 in public subnets. The database tier consists of Microsoft SQL Server running on Amazon EC2 in a private subnet. Security is a high priority for the company.

How should security groups be configured in this situation? (Choose two.)

Configure the security group for the web tier to allow inbound traffic on port 443 from 0.0.0.0/0.

Configure the security group for the web tier to allow outbound traffic on port 443 from 0.0.0.0/0.

Configure the security group for the database tier to allow inbound traffic on port 1433 from the security group for the web tier.

Configure the security group for the database tier to allow outbound traffic on ports 443 and 1433 to the security group for the web tier.

Configure the security group for the database tier to allow inbound traffic on ports 443 and 1433 from the security group for the web tier.

Answers are;

A. Configure the security group for the web tier to allow inbound traffic on port 443 from 0.0.0.0/0.

C. Configure the security group for the database tier to allow inbound traffic on port 1433 from the security group for the web tier.

SG are blocked by default and stateful so;

A: Allows inbound traffic from web to the HTTPS default port on web servers

B: Outbound is not required if inbound is configured due to stateful nature of SG

C: 1433 is SQL default so allow access from web-tier only

D: Opens up the database to web on 1433 port

E: opens up 443 port unnecessarily on the DB tier so less secure

AC is the most secure config.

A company recently signed a contract with an AWS Managed Service Provider (MSP) Partner for help with an application migration initiative. A solutions architect needs ta share an Amazon Machine Image (AMI) from an existing AWS account with the MSP Partner's AWS account. The AMI is backed by Amazon Elastic Block Store (Amazon EBS) and uses an AWS Key Management Service (AWS KMS) customer managed key to encrypt EBS volume snapshots.

What is the MOST secure way for the solutions architect to share the AMI with the MSP Partner's AWS account?

Make the encrypted AMI and snapshots publicly available. Modify the key policy to allow the MSP Partner's AWS account to use the key.

Modify the launchPermission property of the AMI. Share the AMI with the MSP Partner's AWS account only. Modify the key policy to allow the MSP Partner's AWS account to use the key.

Modify the launchPermission property of the AMI. Share the AMI with the MSP Partner's AWS account only. Modify the key policy to trust a new KMS key that is owned by the MSP Partner for encryption.

Export the AMI from the source account to an Amazon S3 bucket in the MSP Partner's AWS account, Encrypt the S3 bucket with a new KMS key that is owned by the MSP Partner. Copy and launch the AMI in the MSP Partner's AWS account.

Answer is Modify the launchPermission property of the AMI. Share the AMI with the MSP Partner's AWS account only. Modify the key policy to allow the MSP Partner's AWS account to use the key.

The most secure way for the solutions architect to share the AMI with the MSP Partner's AWS account would be to modify the launchPermission property of the AMI and share it with the MSP Partner's AWS account only. The key policy should also be modified to allow the MSP Partner's AWS account to use the key. This ensures that the AMI is only shared with the MSP Partner and is encrypted with a key that they are authorized to use.

Option A, making the AMI and snapshots publicly available, is not a secure option as it would allow anyone with access to the AMI to use it.

Option C, modifying the key policy to trust a new KMS key owned by the MSP Partner, is also not a secure option as it would involve sharing the key with the MSP Partner, which could potentially compromise the security of the data encrypted with the key.

Option D, exporting the AMI to an S3 bucket in the MSP Partner's AWS account and encrypting the S3 bucket with a new KMS key owned by the MSP Partner, is also not the most secure option as it involves sharing the AMI and a new key with the MSP Partner, which could potentially compromise the security of the data.

Reference:

https://docs.aws.amazon.com/kms/latest/developerguide/key-policy-modifying-external-accounts.html

A company has several web servers that need to frequently access a common Amazon RDS MySQL Multi-AZ DB instance. The company wants a secure method for the web servers to connect to the database while meeting a security requirement to rotate user credentials frequently.

Which solution meets these requirements?

Store the database user credentials in AWS Secrets Manager. Grant the necessary IAM permissions to allow the web servers to access AWS Secrets Manager.

Store the database user credentials in AWS Systems Manager OpsCenter. Grant the necessary IAM permissions to allow the web servers to access OpsCenter.

Store the database user credentials in a secure Amazon S3 bucket. Grant the necessary IAM permissions to allow the web servers to retrieve credentials and access the database.

Store the database user credentials in files encrypted with AWS Key Management Service (AWS KMS) on the web server file system. The web server should be able to decrypt the files and access the database.

Answer is Store the database user credentials in AWS Secrets Manager. Grant the necessary IAM permissions to allow the web servers to access AWS Secrets Manager.

Option A it meets the requirements specified in the question: a secure method for the web servers to connect to the database while meeting a security requirement to rotate user credentials frequently. AWS Secrets Manager is designed specifically to store and manage secrets like database credentials, and it provides an automated way to rotate secrets every time they are used, ensuring that the secrets are always fresh and secure. This makes it a good choice for storing and managing the database user credentials in a secure way.

Option B, storing the database user credentials in AWS Systems Manager OpsCenter, is not a good fit for this use case because OpsCenter is a tool for managing and monitoring systems, and it is not designed for storing and managing secrets.

Option C, storing the database user credentials in a secure Amazon S3 bucket, is not a secure option because S3 buckets are not designed to store secrets. While it is possible to store secrets in S3, it is not recommended because S3 is not a secure secrets management service and does not provide the same level of security and automation as AWS Secrets Manager.

Option D, storing the database user credentials in files encrypted with AWS Key Management Service (AWS KMS) on the web server file system, is not a secure option because it relies on the security of the web server file system, which may not be as secure as a dedicated secrets management service like AWS Secrets Manager. Additionally, this option does not meet the requirement to rotate user credentials frequently because it does not provide an automated way to rotate the credentials.

A company uses Amazon S3 to store its confidential audit documents. The S3 bucket uses bucket policies to restrict access to audit team IAM user credentials according to the principle of least privilege. Company managers are worried about accidental deletion of documents in the S3 bucket and want a more secure solution.

What should a solutions architect do to secure the audit documents?

Enable the versioning and MFA Delete features on the S3 bucket.

Enable multi-factor authentication (MFA) on the IAM user credentials for each audit team IAM user account.

Add an S3 Lifecycle policy to the audit team's IAM user accounts to deny the s3:DeleteObject action during audit dates.

Use AWS Key Management Service (AWS KMS) to encrypt the S3 bucket and restrict audit team IAM user accounts from accessing the KMS key.

Answer is Enable the versioning and MFA Delete features on the S3 bucket.

This will secure the audit documents by providing an additional layer of protection against accidental deletion. With versioning enabled, any deleted or overwritten objects in the S3 bucket will be preserved as previous versions, allowing the company to recover them if needed. With MFA Delete enabled, any delete request made to the S3 bucket will require the use of an MFA code, which provides an additional layer of security.

Option B: Enable multi-factor authentication (MFA) on the IAM user credentials for each audit team IAM user account, would not provide protection against accidental deletion.

Option C: Adding an S3 Lifecycle policy to the audit team's IAM user accounts to deny the s3:DeleteObject action during audit dates, which would not provide protection against accidental deletion outside of the specified audit dates.

Option D: Use AWS Key Management Service (AWS KMS) to encrypt the S3 bucket and restrict audit team IAM user accounts from accessing the KMS key, would not provide protection against accidental deletion.

A company has applications that run on Amazon EC2 instances in a VPC. One of the applications needs to call the Amazon S3 API to store and read objects. According to the company's security regulations, no traffic from the applications is allowed to travel across the internet.

Which solution will meet these requirements?

Configure an S3 gateway endpoint.

Create an S3 bucket in a private subnet.

Create an S3 bucket in the same AWS Region as the EC2 instances.

Configure a NAT gateway in the same subnet as the EC2 instances.

Answer is Configure an S3 gateway endpoint.

A gateway endpoint is a VPC endpoint that you can use to connect to Amazon S3 from within your VPC. Traffic between your VPC and Amazon S3 never leaves the Amazon network, so it doesn't traverse the internet. This means you can access Amazon S3 without the need to use a NAT gateway or a VPN connection.

Option B (creating an S3 bucket in a private subnet) is not a valid solution because S3 buckets do not have subnets.

Option C (creating an S3 bucket in the same AWS Region as the EC2 instances) is not a requirement for meeting the given security regulations.

Option D (configuring a NAT gateway in the same subnet as the EC2 instances) is not a valid solution because it would allow traffic to leave the VPC and travel across the Internet.

Reference:

https://docs.aws.amazon.com/vpc/latest/privatelink/gateway-endpoints.html

A company is storing sensitive user information in an Amazon S3 bucket. The company wants to provide secure access to this bucket from the application tier running on Amazon EC2 instances inside a VPC.

Which combination of steps should a solutions architect take to accomplish this? (Choose two.)

Configure a VPC gateway endpoint for Amazon S3 within the VPC.

Create a bucket policy to make the objects in the S3 bucket public.

Create a bucket policy that limits access to only the application tier running in the VPC.

Create an IAM user with an S3 access policy and copy the IAM credentials to the EC2 instance.

Create a NAT instance and have the EC2 instances use the NAT instance to access the S3 bucket.

Answers are;

A. Configure a VPC gateway endpoint for Amazon S3 within the VPC.

C. Create a bucket policy that limits access to only the application tier running in the VPC.

A. This eliminates the need for the traffic to go over the internet, providing an added layer of security.

B. It is important to restrict access to the bucket and its objects only to authorized entities.

C. This helps maintain the confidentiality of the sensitive user information by limiting access to authorized resources.

D. In this case, since the EC2 instances are accessing the S3 bucket from within the VPC, using IAM user credentials is unnecessary and can introduce additional security risks.

E. a NAT instance to access the S3 bucket adds unnecessary complexity and overhead.

In summary, the recommended steps to provide secure access to the S3 from the application tier running on EC2 inside a VPC are to configure a VPC gateway endpoint for S3 within the VPC (option A) and create a bucket policy that limits access to only the application tier running in the VPC (option C).

Reference:

https://aws.amazon.com/premiumsupport/knowledge-center/s3-private-connection-noauthentication/

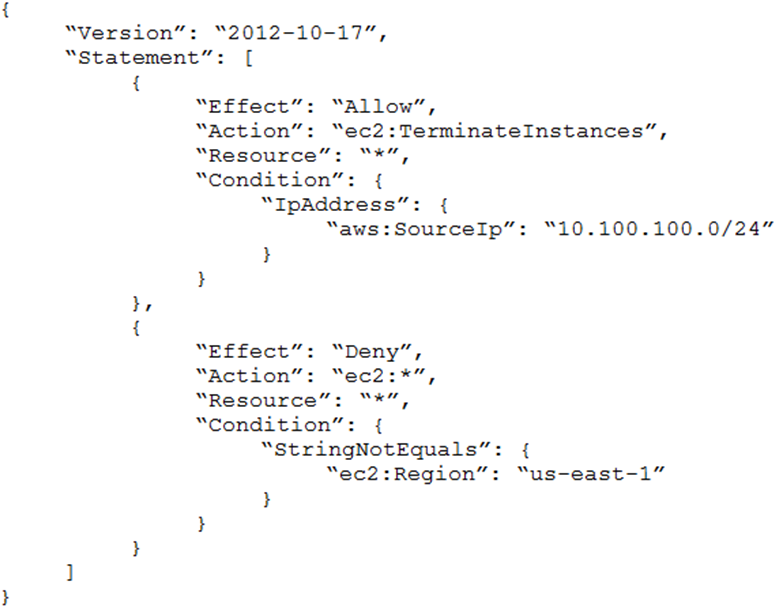

An Amazon EC2 administrator created the following policy associated with an IAM group containing several users:

What is the effect of this policy?

Users can terminate an EC2 instance in any AWS Region except us-east-1.

Users can terminate an EC2 instance with the IP address 10.100.100.1 in the us-east-1 Region.

Users can terminate an EC2 instance in the us-east-1 Region when the user's source IP is 10.100.100.254.

Users cannot terminate an EC2 instance in the us-east-1 Region when the user's source IP is 10.100.100.254.

Answer is Users can terminate an EC2 instance in the us-east-1 Region when the user's source IP is 10.100.100.254.

0.0/24 , the following five IP addresses are reserved:

0.0: Network address.

0.1: Reserved by AWS for the VPC router.

0.2: Reserved by AWS. The IP address of the DNS server is the base of the VPC network range plus two.

0.3: Reserved by AWS for future use.

0.255: Network broadcast address.

A company's containerized application runs on an Amazon EC2 instance. The application needs to download security certificates before it can communicate with other business applications. The company wants a highly secure solution to encrypt and decrypt the certificates in near real time. The solution also needs to store data in highly available storage after the data is encrypted.

Which solution will meet these requirements with the LEAST operational overhead?

Create AWS Secrets Manager secrets for encrypted certificates. Manually update the certificates as needed. Control access to the data by using fine-grained IAM access.

Create an AWS Lambda function that uses the Python cryptography library to receive and perform encryption operations. Store the function in an Amazon S3 bucket.

Create an AWS Key Management Service (AWS KMS) customer managed key. Allow the EC2 role to use the KMS key for encryption operations. Store the encrypted data on Amazon S3.

Create an AWS Key Management Service (AWS KMS) customer managed key. Allow the EC2 role to use the KMS key for encryption operations. Store the encrypted data on Amazon Elastic Block Store (Amazon EBS) volumes.

Answer is Create an AWS Key Management Service (AWS KMS) customer managed key. Allow the EC2 role to use the KMS key for encryption operations. Store the encrypted data on Amazon S3.

AWS KMS: Provides a managed service for secure key storage and encryption/decryption operations. This eliminates the need to manage encryption/decryption logic within the application itself.

Customer Managed Key: The company maintains control over the key, ensuring security.

EC2 Role Permissions: Granting permissions to the EC2 role allows the application to use KMS for encryption/decryption without managing individual credentials.

Amazon S3: Offers highly available and scalable storage for the encrypted certificates. S3 is generally cheaper than EBS for data that is not frequently accessed.

A - does not mention storing the encrypted data at all (though that is a requirement), also involves manual action which is surely NOT "least operational effort"

B - Doesn't make any sense

C - Yes, S3 meets the requirements and is easy to access from containerized app

D - EBS volumes are mounted to the container host, but data is created on containers

A solutions architect is designing a VPC with public and private subnets. The VPC and subnets use IPv4 CIDR blocks. There is one public subnet and one private subnet in each of three Availability Zones (AZs) for high availability. An internet gateway is used to provide internet access for the public subnets. The private subnets require access to the internet to allow Amazon EC2 instances to download software updates.

What should the solutions architect do to enable Internet access for the private subnets?

Create three NAT gateways, one for each public subnet in each AZ. Create a private route table for each AZ that forwards non-VPC traffic to the NAT gateway in its AZ.

Create three NAT instances, one for each private subnet in each AZ. Create a private route table for each AZ that forwards non-VPC traffic to the NAT instance in its AZ.

Create a second internet gateway on one of the private subnets. Update the route table for the private subnets that forward non-VPC traffic to the private internet gateway.

Create an egress-only internet gateway on one of the public subnets. Update the route table for the private subnets that forward non-VPC traffic to the egress-only Internet gateway.

Answer is Create three NAT gateways, one for each public subnet in each AZ. Create a private route table for each AZ that forwards non-VPC traffic to the NAT gateway in its AZ.

To enable Internet access for the private subnets, the solutions architect should create three NAT gateways, one for each public subnet in each Availability Zone (AZ). NAT gateways allow private instances to initiate outbound traffic to the Internet but do not allow inbound traffic from the Internet to reach the private instances.

The solutions architect should then create a private route table for each AZ that forwards non-VPC traffic to the NAT gateway in its AZ. This will allow instances in the private subnets to access the Internet through the NAT gateways in the public subnets.

Reference:

https://docs.aws.amazon.com/vpc/latest/userguide/vpc-example-private-subnets-nat.html