MLS-C01: AWS Certified Machine Learning - Specialty

A Machine Learning Specialist is designing a system for improving sales for a company.

The objective is to use the large amount of information the company has on users' behavior and product preferences to predict which products users would like based on the users' similarity to other users.

What should the Specialist do to meet this objective?

Build a content-based filtering recommendation engine with Apache Spark ML on Amazon EMR

Build a collaborative filtering recommendation engine with Apache Spark ML on Amazon EMR.

Build a model-based filtering recommendation engine with Apache Spark ML on Amazon EMR

Build a combinative filtering recommendation engine with Apache Spark ML on Amazon EMR

Answer is Build a collaborative filtering recommendation engine with Apache Spark ML on Amazon EMR.

Collaborative filtering is a technique used to recommend products to users based on their similarity to other users. It is a widely used method for building recommendation engines. Apache Spark ML is a distributed machine learning library that provides scalable implementations of collaborative filtering algorithms. Amazon EMR is a managed cluster platform that provides easy access to Apache Spark and other distributed computing frameworks.

Reference:

https://aws.amazon.com/blogs/big-data/building-a-recommendation-engine-with-spark-ml-on-amazon-emr-using-zeppelin/

A Mobile Network Operator is building an analytics platform to analyze and optimize a company's operations using Amazon Athena and Amazon S3.

The source systems send data in .CSV format in real time. The Data Engineering team wants to transform the data to the Apache Parquet format before storing it on Amazon S3.

Which solution takes the LEAST effort to implement?

Ingest .CSV data using Apache Kafka Streams on Amazon EC2 instances and use Kafka Connect S3 to serialize data as Parquet

Ingest .CSV data from Amazon Kinesis Data Streams and use Amazon Glue to convert data into Parquet.

Ingest .CSV data using Apache Spark Structured Streaming in an Amazon EMR cluster and use Apache Spark to convert data into Parquet.

Ingest .CSV data from Amazon Kinesis Data Streams and use Amazon Kinesis Data Firehose to convert data into Parquet.

Answer is Ingest .CSV data from Amazon Kinesis Data Streams and use Amazon Glue to convert data into Parquet.

Amazon Kinesis Data Firehose can convert the format of your input data from JSON to Apache Parquet or Apache ORC before storing the data in Amazon S3. Parquet and ORC are columnar data formats that save space and enable faster queries compared to row-oriented formats like JSON. If you want to convert an input format other than JSON, such as comma-separated values (CSV) or structured text, you can use AWS Lambda to transform it to JSON first. For more information, see Amazon Kinesis Data Firehose Data Transformation. So there is additional work involved with firehose. Reference:

https://docs.aws.amazon.com/firehose/latest/dev/record-format-conversion.html

https://github.com/ecloudvalley/Building-a-Data-Lake-with-AWS-Glue-and-Amazon-S3

https://aws.amazon.com/blogs/aws/new-serverless-streaming-etl-with-aws-glue/

A Machine Learning Specialist is using an Amazon SageMaker notebook instance in a private subnet of a corporate VPC.

The ML Specialist has important data stored on the Amazon SageMaker notebook instance's Amazon EBS volume, and needs to take a snapshot of that EBS volume. However, the ML Specialist cannot find the Amazon SageMaker notebook instance's EBS volume or Amazon EC2 instance within the VPC.

Why is the ML Specialist not seeing the instance visible in the VPC?

Amazon SageMaker notebook instances are based on the EC2 instances within the customer account, but they run outside of VPCs.

Amazon SageMaker notebook instances are based on the Amazon ECS service within customer accounts.

Amazon SageMaker notebook instances are based on EC2 instances running within AWS service accounts.

Amazon SageMaker notebook instances are based on AWS ECS instances running within AWS service accounts.

Answer is Amazon SageMaker notebook instances are based on EC2 instances running within AWS service accounts.

An Amazon SageMaker managed VPC can only be created in an Amazon managed Account. Notebooks can run inside AWS managed VPC or customer managed VPC.

A cannot be correct because it says Sagemakers EC2 instances run with VPC which is not correct, as with VPC means governed by its network policies incase of Sagemaker

Reference:

https://docs.aws.amazon.com/sagemaker/latest/dg/studio-notebooks-and-internet-access.html

A manufacturing company has structured and unstructured data stored in an Amazon S3 bucket. A Machine Learning Specialist wants to use SQL to run queries on this data.

Which solution requires the LEAST effort to be able to query this data?

Use AWS Data Pipeline to transform the data and Amazon RDS to run queries.

Use AWS Glue to catalogue the data and Amazon Athena to run queries.

Use AWS Batch to run ETL on the data and Amazon Aurora to run the queries.

Use AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries.

Answer is Use AWS Glue to catalogue the data and Amazon Athena to run queries.

AWS Glue is a fully managed ETL service that makes it easy to move data between data stores. It can automatically crawl, catalogue, and classify data stored in Amazon S3, and make it available for querying and analysis. With AWS Glue, you don't have to worry about the underlying infrastructure and can focus on your data.

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. It integrates with AWS Glue, so you can use the catalogued data directly in Athena without any additional data movement or transformation.

Reference:

https://aws.amazon.com/glue/

A Machine Learning Specialist is developing a custom video recommendation model for an application. The dataset used to train this model is very large with millions of data points and is hosted in an Amazon S3 bucket. The Specialist wants to avoid loading all of this data onto an Amazon SageMaker notebook instance because it would take hours to move and will exceed the attached 5 GB Amazon EBS volume on the notebook instance.

Which approach allows the Specialist to use all the data to train the model?

Load a smaller subset of the data into the SageMaker notebook and train locally. Confirm that the training code is executing and the model parameters seem reasonable. Initiate a SageMaker training job using the full dataset from the S3 bucket using Pipe input mode.

Launch an Amazon EC2 instance with an AWS Deep Learning AMI and attach the S3 bucket to the instance. Train on a small amount of the data to verify the training code and hyperparameters. Go back to Amazon SageMaker and train using the full dataset

Use AWS Glue to train a model using a small subset of the data to confirm that the data will be compatible with Amazon SageMaker. Initiate a SageMaker training job using the full dataset from the S3 bucket using Pipe input mode.

Load a smaller subset of the data into the SageMaker notebook and train locally. Confirm that the training code is executing and the model parameters seem reasonable. Launch an Amazon EC2 instance with an AWS Deep Learning AMI and attach the S3 bucket to train the full dataset.

Answer is Load a smaller subset of the data into the SageMaker notebook and train locally. Confirm that the training code is executing and the model parameters seem reasonable. Initiate a SageMaker training job using the full dataset from the S3 bucket using Pipe input mode.

Pipe mode is for dealing with very big data. You need to use Pipe mode but Glue cannot train a model.

Reference:

https://aws.amazon.com/blogs/machine-learning/using-pipe-input-mode-for-amazon-sagemaker-algorithms/

A Machine Learning Specialist has completed a proof of concept for a company using a small data sample, and now the Specialist is ready to implement an end-to-end solution in AWS using Amazon SageMaker. The historical training data is stored in Amazon RDS.

Which approach should the Specialist use for training a model using that data?

Write a direct connection to the SQL database within the notebook and pull data in

Push the data from Microsoft SQL Server to Amazon S3 using an AWS Data Pipeline and provide the S3 location within the notebook.

Move the data to Amazon DynamoDB and set up a connection to DynamoDB within the notebook to pull data in.

Move the data to Amazon ElastiCache using AWS DMS and set up a connection within the notebook to pull data in for fast access.

Answer is Push the data from Microsoft SQL Server to Amazon S3 using an AWS Data Pipeline and provide the S3 location within the notebook.

The data for a SageMaker notebook needs to be from S3 and option B is the only option that says it. The only thing with option B is that it is talking of moving data from MS SQL Server not RDS.

Amazon ML allows you to create a datasource object from data stored in a MySQL database in Amazon Relational Database Service (Amazon RDS). When you perform this action, Amazon ML creates an AWS Data Pipeline object that executes the SQL query that you specify, and places the output into an S3 bucket of your choice. Amazon ML uses that data to create the datasource.

Reference:

https://www.slideshare.net/AmazonWebServices/train-models-on-amazon-sagemaker-using-data-not-from-amazon-s3-aim419-aws-reinvent-2018

A Machine Learning Specialist receives customer data for an online shopping website. The data includes demographics, past visits, and locality information. The Specialist must develop a machine learning approach to identify the customer shopping patterns, preferences, and trends to enhance the website for better service and smart recommendations.

Which solution should the Specialist recommend?

Latent Dirichlet Allocation (LDA) for the given collection of discrete data to identify patterns in the customer database.

A neural network with a minimum of three layers and random initial weights to identify patterns in the customer database.

Collaborative filtering based on user interactions and correlations to identify patterns in the customer database.

Random Cut Forest (RCF) over random subsamples to identify patterns in the customer database.

Answer is Collaborative filtering based on user interactions and correlations to identify patterns in the customer database.

In natural language processing, the latent Dirichlet allocation (LDA) is a generative statistical model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar.

Amazon SageMaker Random Cut Forest (RCF) is an unsupervised algorithm for detecting anomalous data points within a data set.

Neural network is used for image detection.

Reference:

https://aws.amazon.com/blogs/machine-learning/extending-amazon-sagemaker-factorization-machines-algorithm-to-predict-top-x-recommendations/

A large mobile network operating company is building a machine learning model to predict customers who are likely to unsubscribe from the service. The company plans to offer an incentive for these customers as the cost of churn is far greater than the cost of the incentive.

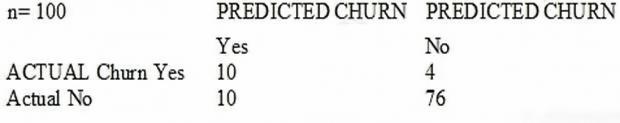

The model produces the following confusion matrix after evaluating on a test dataset of 100 customers:

Based on the model evaluation results, why is this a viable model for production?

The model is 86% accurate and the cost incurred by the company as a result of false negatives is less than the false positives.

The precision of the model is 86%, which is less than the accuracy of the model.

The model is 86% accurate and the cost incurred by the company as a result of false positives is less than the false negatives.

The precision of the model is 86%, which is greater than the accuracy of the model.

Answer is The model is 86% accurate and the cost incurred by the company as a result of false negatives is less than the false positives.

The question says "the cost of churn is far greater than the cost of the incentive", so we want to identify all the true churns, in order to do something about it. We don't want there to be any true churns we didn't see. This means we want false negatives as low as possible. So we want false negatives < false positives.

A city wants to monitor its air quality to address the consequences of air pollution.

A Machine Learning Specialist needs to forecast the air quality in parts per million of contaminates for the next 2 days in the city.

As this is a prototype, only daily data from the last year is available.

Which model is MOST likely to provide the best results in Amazon SageMaker?

Use the Amazon SageMaker k-Nearest-Neighbors (kNN) algorithm on the single time series consisting of the full year of data with a predictor_type of regressor.

Use Amazon SageMaker Random Cut Forest (RCF) on the single time series consisting of the full year of data.

Use the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of regressor.

Use the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of classifier.

Answer is Use the Amazon SageMaker k-Nearest-Neighbors (kNN) algorithm on the single time series consisting of the full year of data with a predictor_type of regressor.

Linear regression is not suitable for time series data.

k-Nearest-Neighbors (kNN) algorithm will provide the best results for this use case as it is a good fit for time series data, especially for predicting continuous values. The predictor_type of regressor is also appropriate for this task, as the goal is to forecast a continuous value (air quality in parts per million of contaminants). The other options are also viable, but may not provide as good of results as the kNN algorithm, especially with limited data.

using the Amazon SageMaker Linear Learner algorithm with a predictor_type of regressor, may still provide reasonable results, but it assumes a linear relationship between the input features and the target variable (air quality), which may not always hold in practice, especially with complex time series data. In such cases, non-linear models like kNN may perform better. Furthermore, the kNN algorithm can handle irregular patterns in the data, which may be present in the air quality data, and provide more accurate predictions.

Reference:

https://cran.r-project.org/web/packages/tsfknn/vignettes/tsfknn.html

A Machine Learning Specialist is working with a large company to leverage machine learning within its products. The company wants to group its customers into categories based on which customers will and will not churn within the next 6 months. The company has labeled the data available to the Specialist.

Which machine learning model type should the Specialist use to accomplish this task?

Linear regression

Classification

Clustering

Reinforcement learning

Answer is Classification

The goal of classification is to determine to which class or category a data point (customer in our case) belongs to. For classification problems, data scientists would use historical data with predefined target variables AKA labels (churner/non-churner) ג€" answers that need to be predicted ג€" to train an algorithm. With classification, businesses can answer the following questions:

- Will this customer churn or not? - Will a customer renew their subscription? - Will a user downgrade a pricing plan? - Are there any signs of unusual customer behavior?

Classification is a type of supervised learning that predicts a discrete categorical value, such as yes or no, spam or not spam, or churn or not churn1. Classification models are trained using labeled data, which means that the input data has a known target attribute that indicates the correct class for each instance2. For example, a classification model that predicts customer churn would use data that has a label indicating whether the customer churned or not in the past.

Classification models can be used for various applications, such as sentiment analysis, image recognition, fraud detection, and customer segmentation2. Classification models can also handle both binary and multiclass problems, depending on the number of possible classes in the target attribute3.

Reference:

https://www.kdnuggets.com/2019/05/churn-prediction-machine-learning.html